.supabase.co/rest/v1/`.

The REST interface is automatically reflected from your database's schema and is:

* **Instant and auto-generated.**

As you update your database the changes are immediately accessible through your API.

* **Self documenting.**

Supabase generates documentation in the Dashboard which updates as you make database changes.

* **Secure.**

The API is configured to work with PostgreSQL's Row Level Security, provisioned behind an API gateway with key-auth enabled.

* **Fast.**

Our benchmarks for basic reads are more than 300% faster than Firebase. The API is a very thin layer on top of Postgres, which does most of the heavy lifting.

* **Scalable.**

The API can serve thousands of simultaneous requests, and works well for Serverless workloads.

The reflected API is designed to retain as much of Postgres' capability as possible including:

* Basic CRUD operations (Create/Read/Update/Delete)

* Arbitrarily deep relationships among tables/views, functions that return table types can also nest related tables/views.

* Works with Postgres Views, Materialized Views and Foreign Tables

* Works with Postgres Functions

* User defined computed columns and computed relationships

* The Postgres security model - including Row Level Security, Roles, and Grants.

The REST API resolves all requests to a single SQL statement leading to fast response times and high throughput.

Reference:

* [Docs](https://postgrest.org/)

* [Source Code](https://github.com/PostgREST/postgrest)

## API URL and keys

You can find the API URL and Keys in the [Dashboard](/dashboard/project/_/settings/api-keys).

# Auth

Use Supabase to authenticate and authorize your users.

Supabase Auth makes it easy to implement authentication and authorization in your app. We provide client SDKs and API endpoints to help you create and manage users.

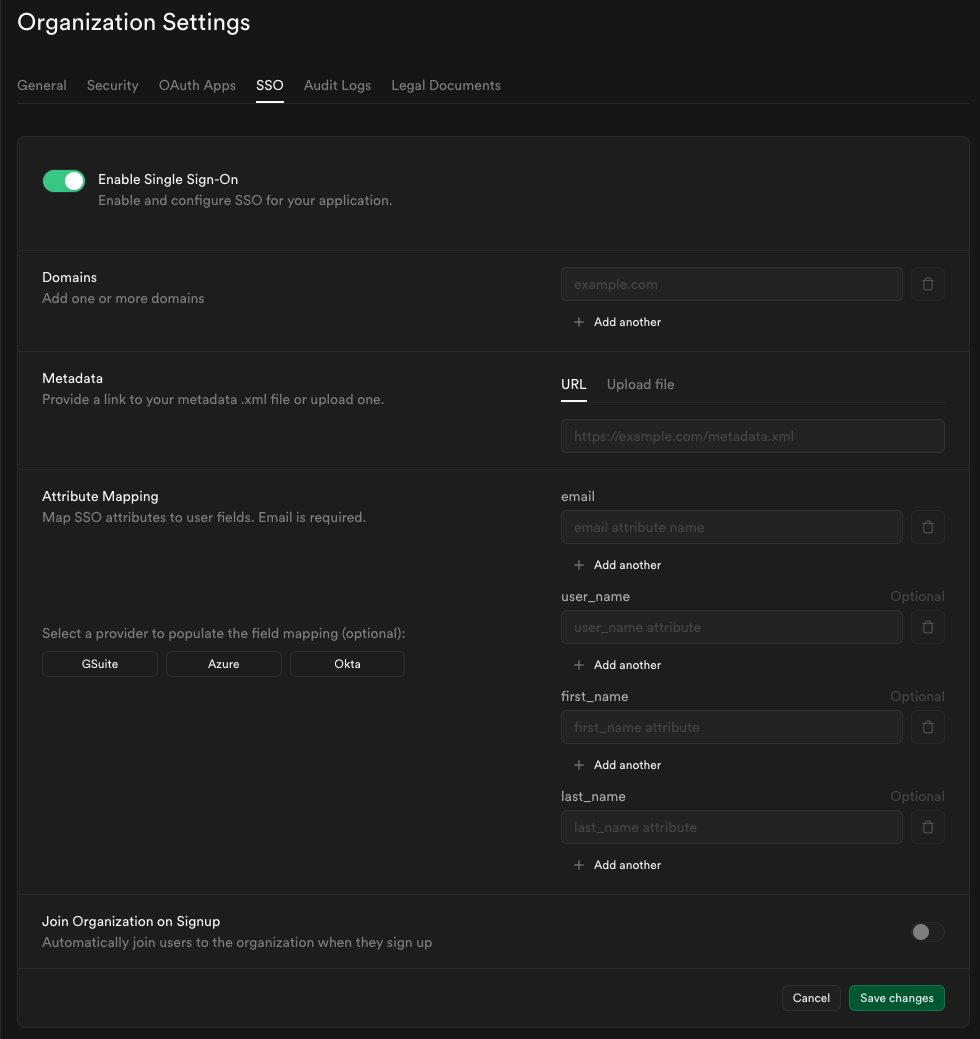

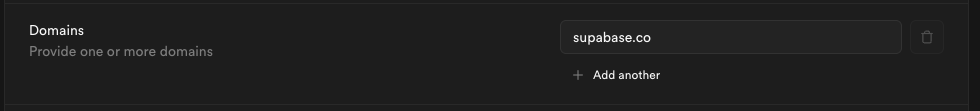

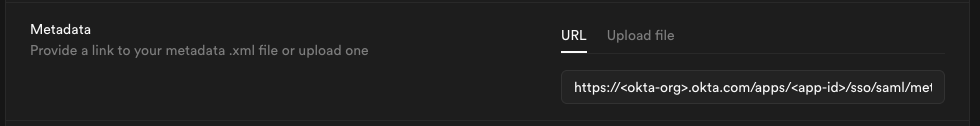

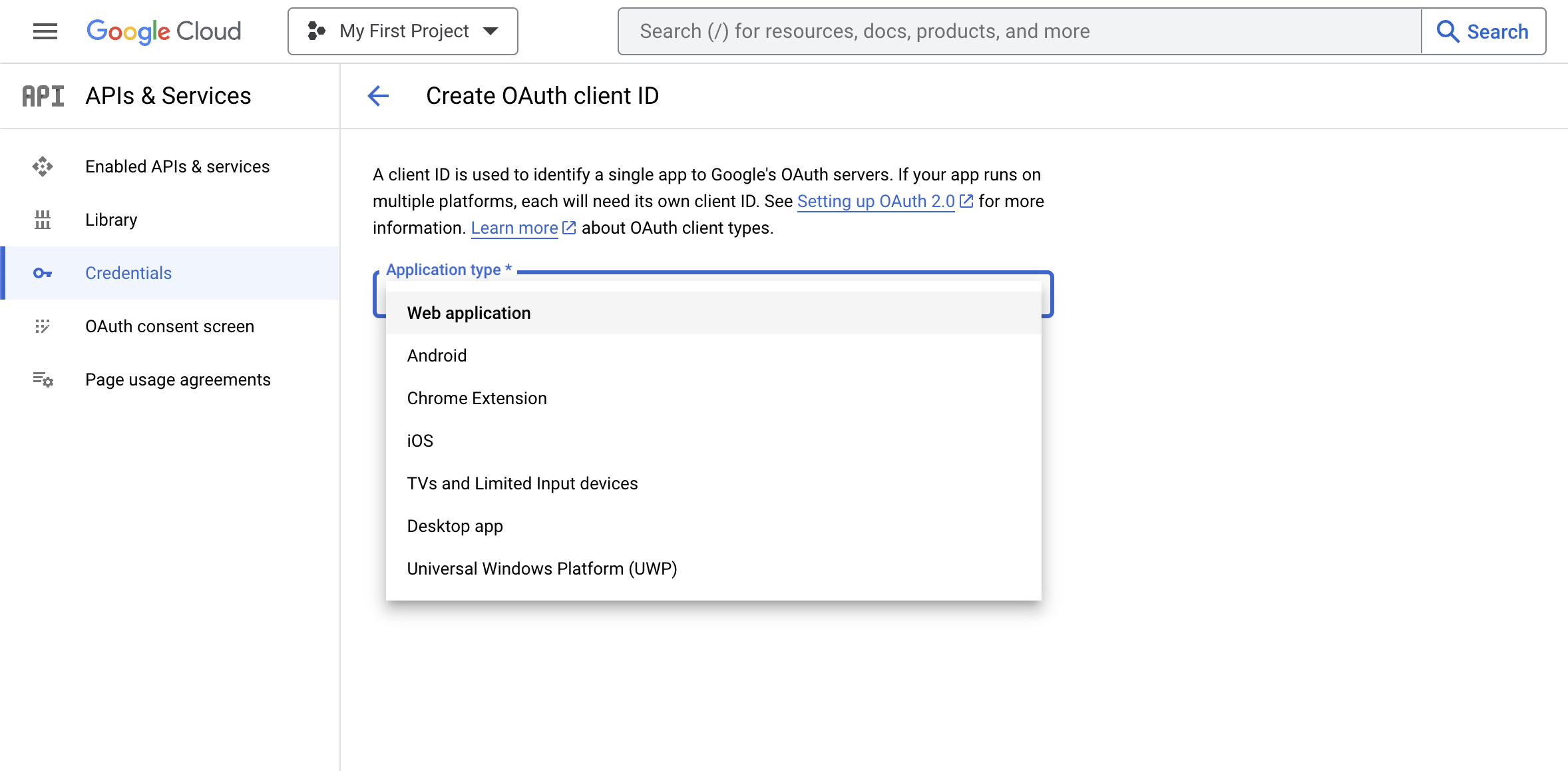

Your users can use many popular Auth methods, including password, magic link, one-time password (OTP), social login, and single sign-on (SSO).

## About authentication and authorization

Authentication and authorization are the core responsibilities of any Auth system.

* **Authentication** means checking that a user is who they say they are.

* **Authorization** means checking what resources a user is allowed to access.

Supabase Auth uses [JSON Web Tokens (JWTs)](/docs/guides/auth/jwts) for authentication. For a complete reference of all JWT fields, see the [JWT Fields Reference](/docs/guides/auth/jwt-fields). Auth integrates with Supabase's database features, making it easy to use [Row Level Security (RLS)](/docs/guides/database/postgres/row-level-security) for authorization.

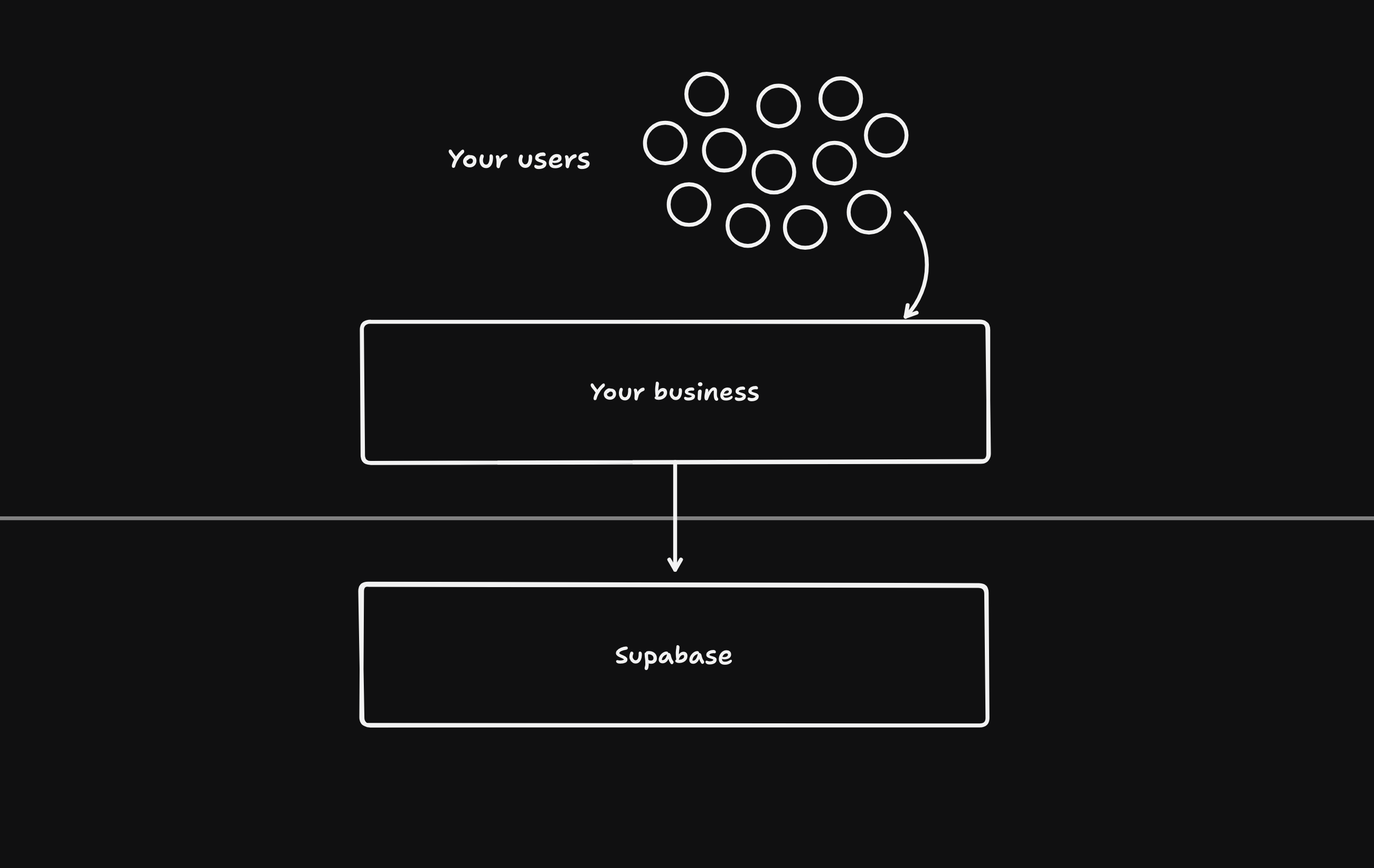

## The Supabase ecosystem

You can use Supabase Auth as a standalone product, but it's also built to integrate with the Supabase ecosystem.

Auth uses your project's Postgres database under the hood, storing user data and other Auth information in a special schema. You can connect this data to your own tables using triggers and foreign key references.

Auth also enables access control to your database's automatically generated [REST API](/docs/guides/api). When using Supabase SDKs, your data requests are automatically sent with the user's Auth Token. The Auth Token scopes database access on a row-by-row level when used along with [RLS policies](/docs/guides/database/postgres/row-level-security).

## Providers

Supabase Auth works with many popular Auth methods, including Social and Phone Auth using third-party providers. See the following sections for a list of supported third-party providers.

### Social Auth

### Phone Auth

## Pricing

Charges apply to Monthly Active Users (MAU), Monthly Active Third-Party Users (Third-Party MAU), and Monthly Active SSO Users (SSO MAU) and Advanced MFA Add-ons. For a detailed breakdown of how these charges are calculated, refer to the following pages:

* [Pricing MAU](/docs/guides/platform/manage-your-usage/monthly-active-users)

* [Pricing Third-Party MAU](/docs/guides/platform/manage-your-usage/monthly-active-users-third-party)

* [Pricing SSO MAU](/docs/guides/platform/manage-your-usage/monthly-active-users-sso)

* [Advanced MFA - Phone](/docs/guides/platform/manage-your-usage/advanced-mfa-phone)

# Local Dev with CLI

Developing locally using the Supabase CLI.

You can use the Supabase CLI to run the entire Supabase stack locally on your machine, by running `supabase init` and then `supabase start`. To install the CLI, see the [installation guide](/docs/guides/cli/getting-started#installing-the-supabase-cli).

The Supabase CLI provides tools to develop your project locally, deploy to the Supabase Platform, handle database migrations, and generate types directly from your database schema.

## Resources

{[

{

name: 'Supabase CLI',

description:

'The Supabase CLI provides tools to develop manage your Supabase projects from your local machine.',

href: 'https://github.com/supabase/cli',

},

{

name: 'GitHub Action',

description: ' A GitHub action for interacting with your Supabase projects using the CLI.',

href: 'https://github.com/supabase/setup-cli',

},

].map((x) => (

{x.description}

))}

{[

{

name: 'With supabase-js',

description: 'Use the Supabase client inside your Edge Function.',

href: '/guides/functions/auth',

},

{

name: 'Type-Safe SQL with Kysely',

description:

'Combining Kysely with Deno Postgres gives you a convenient developer experience for interacting directly with your Postgres database.',

href: '/guides/functions/kysely-postgres',

},

{

name: 'Monitoring with Sentry',

description: 'Monitor Edge Functions with the Sentry Deno SDK.',

href: '/guides/functions/examples/sentry-monitoring',

},

{

name: 'With CORS headers',

description: 'Send CORS headers for invoking from the browser.',

href: '/guides/functions/cors',

},

{

name: 'React Native with Stripe',

description: 'Full example for using Supabase and Stripe, with Expo.',

href: 'https://github.com/supabase-community/expo-stripe-payments-with-supabase-functions',

},

{

name: 'Flutter with Stripe',

description: 'Full example for using Supabase and Stripe, with Flutter.',

href: 'https://github.com/supabase-community/flutter-stripe-payments-with-supabase-functions',

},

{

name: 'Building a RESTful Service API',

description:

'Learn how to use HTTP methods and paths to build a RESTful service for managing tasks.',

href: 'https://github.com/supabase/supabase/blob/master/examples/edge-functions/supabase/functions/restful-tasks/index.ts',

},

{

name: 'Working with Supabase Storage',

description: 'An example on reading a file from Supabase Storage.',

href: 'https://github.com/supabase/supabase/blob/master/examples/edge-functions/supabase/functions/read-storage/index.ts',

},

{

name: 'Open Graph Image Generation',

description: 'Generate Open Graph images with Deno and Supabase Edge Functions.',

href: '/guides/functions/examples/og-image',

},

{

name: 'OG Image Generation & Storage CDN Caching',

description: 'Cache generated images with Supabase Storage CDN.',

href: 'https://github.com/supabase/supabase/tree/master/examples/edge-functions/supabase/functions/og-image-with-storage-cdn',

},

{

name: 'Get User Location',

description: `Get user location data from user's IP address.`,

href: 'https://github.com/supabase/supabase/tree/master/examples/edge-functions/supabase/functions/location',

},

{

name: 'Cloudflare Turnstile',

description: `Protecting Forms with Cloudflare Turnstile.`,

href: '/guides/functions/examples/cloudflare-turnstile',

},

{

name: 'Connect to Postgres',

description: `Connecting to Postgres from Edge Functions.`,

href: '/guides/functions/connect-to-postgres',

},

{

name: 'GitHub Actions',

description: `Deploying Edge Functions with GitHub Actions.`,

href: '/guides/functions/examples/github-actions',

},

{

name: 'Oak Server Middleware',

description: `Request Routing with Oak server middleware.`,

href: 'https://github.com/supabase/supabase/tree/master/examples/edge-functions/supabase/functions/oak-server',

},

{

name: 'Hugging Face',

description: `Access 100,000+ Machine Learning models.`,

href: '/guides/ai/examples/huggingface-image-captioning',

},

{

name: 'Amazon Bedrock',

description: `Amazon Bedrock Image Generator`,

href: '/guides/functions/examples/amazon-bedrock-image-generator',

},

{

name: 'OpenAI',

description: `Using OpenAI in Edge Functions.`,

href: '/guides/ai/examples/openai',

},

{

name: 'Stripe Webhooks',

description: `Handling signed Stripe Webhooks with Edge Functions.`,

href: '/guides/functions/examples/stripe-webhooks',

},

{

name: 'Send emails',

description: `Send emails in Edge Functions with Resend.`,

href: '/guides/functions/examples/send-emails',

},

{

name: 'Web Stream',

description: `Server-Sent Events in Edge Functions.`,

href: 'https://github.com/supabase/supabase/tree/master/examples/edge-functions/supabase/functions/streams',

},

{

name: 'Puppeteer',

description: `Generate screenshots with Puppeteer.`,

href: '/guides/functions/examples/screenshots',

},

{

name: 'Discord Bot',

description: `Building a Slash Command Discord Bot with Edge Functions.`,

href: '/guides/functions/examples/discord-bot',

},

{

name: 'Telegram Bot',

description: `Building a Telegram Bot with Edge Functions.`,

href: '/guides/functions/examples/telegram-bot',

},

{

name: 'Upload File',

description: `Process multipart/form-data.`,

href: 'https://github.com/supabase/supabase/tree/master/examples/edge-functions/supabase/functions/file-upload-storage',

},

{

name: 'Upstash Redis',

description: `Build an Edge Functions Counter with Upstash Redis.`,

href: '/guides/functions/examples/upstash-redis',

},

{

name: 'Rate Limiting',

description: `Rate Limiting Edge Functions with Upstash Redis.`,

href: '/guides/functions/examples/rate-limiting',

},

{

name: 'Slack Bot Mention Edge Function',

description: `Slack Bot handling Slack mentions in Edge Function`,

href: '/guides/functions/examples/slack-bot-mention',

},

].map((x) => (

{x.description}

))}

{[

{

title: 'Features',

hasLightIcon: true,

href: '/guides/getting-started/features',

description: 'A non-exhaustive list of features that Supabase provides for every project.'

},

{

title: 'Architecture',

hasLightIcon: true,

href: '/guides/getting-started/architecture',

description: "An overview of Supabase's architecture and product principles.",

},

{

title: 'Local Development',

hasLightIcon: true,

href: '/guides/cli/getting-started',

description: 'Use the Supabase CLI to develop locally and collaborate between teams.',

}

].map((resource) => {

return (

{resource.description}

)

})}

### Use cases

{[

{

title: 'AI, Vectors, and embeddings',

href: '/guides/ai#examples',

description: `Build AI-enabled applications using our Vector toolkit.`,

icon: '/docs/img/icons/openai_logo',

hasLightIcon: true,

},

{

title: 'Subscription Payments (SaaS)',

href: 'https://github.com/vercel/nextjs-subscription-payments#nextjs-subscription-payments-starter',

description: `Clone, deploy, and fully customize a SaaS subscription application with Next.js.`,

icon: '/docs/img/icons/nextjs-icon',

},

{

title: 'Partner Gallery',

href: 'https://github.com/supabase-community/partner-gallery-example#supabase-partner-gallery-example',

description: `Postgres full-text search, image storage, and more.`,

icon: '/docs/img/icons/nextjs-icon',

},

].map((item) => {

return (

{item.description}

)

})}

### Framework quickstarts

{[

{

title: 'React',

href: '/guides/getting-started/quickstarts/reactjs',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from a React app.',

icon: '/docs/img/icons/react-icon',

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

{

title: 'Next.js',

href: '/guides/getting-started/quickstarts/nextjs',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from a Next.js app.',

icon: '/docs/img/icons/nextjs-icon',

hasLightIcon: true,

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

{

title: 'Nuxt',

href: '/guides/getting-started/quickstarts/nuxtjs',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from a Nuxt app.',

icon: '/docs/img/icons/nuxt-icon',

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

{

title: 'Hono',

href: '/guides/getting-started/quickstarts/hono',

description:

'Learn how to create a Supabase project, add some sample data to your database, secure it with auth, and query the data from a Hono app.',

icon: '/docs/img/icons/hono-icon',

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

{

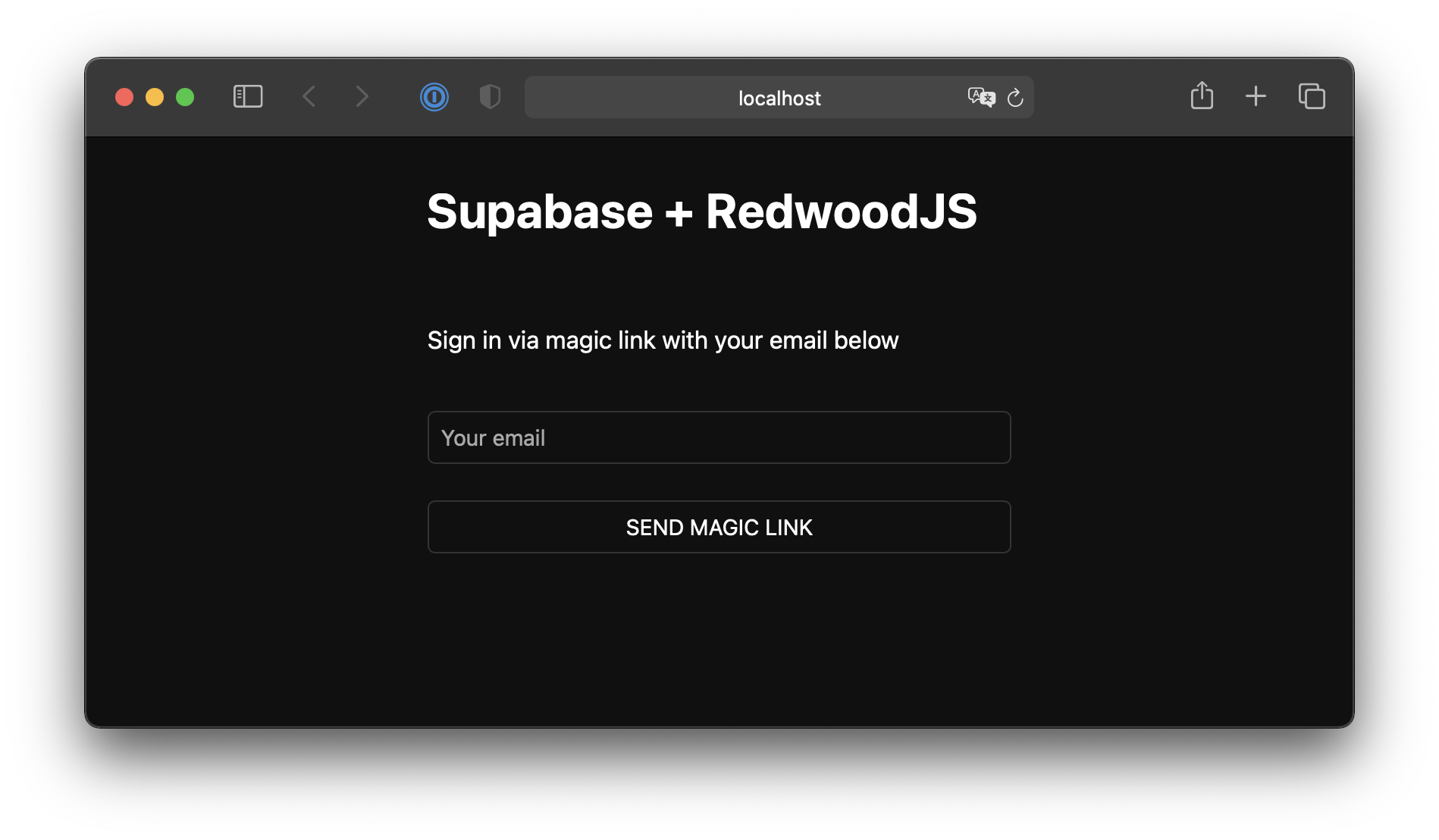

title: 'RedwoodJS',

href: '/guides/getting-started/quickstarts/redwoodjs',

description:

'Learn how to create a Supabase project, add some sample data to your database using Prisma migration and seeds, and query the data from a RedwoodJS app.',

icon: '/docs/img/icons/redwood-icon',

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

{

title: 'Flutter',

href: '/guides/getting-started/quickstarts/flutter',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from a Flutter app.',

icon: '/docs/img/icons/flutter-icon',

enabled: isFeatureEnabled('sdk:dart'),

},

{

title: 'iOS SwiftUI',

href: '/guides/getting-started/quickstarts/ios-swiftui',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from an iOS app.',

icon: '/docs/img/icons/swift-icon',

enabled: isFeatureEnabled('sdk:swift'),

},

{

title: 'Android Kotlin',

href: '/guides/getting-started/quickstarts/kotlin',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from an Android Kotlin app.',

icon: '/docs/img/icons/kotlin-icon',

enabled: isFeatureEnabled('sdk:kotlin'),

},

{

title: 'SvelteKit',

href: '/guides/getting-started/quickstarts/sveltekit',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from a SvelteKit app.',

icon: '/docs/img/icons/svelte-icon',

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

{

title: 'SolidJS',

href: '/guides/getting-started/quickstarts/solidjs',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from a SolidJS app.',

icon: '/docs/img/icons/solidjs-icon',

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

{

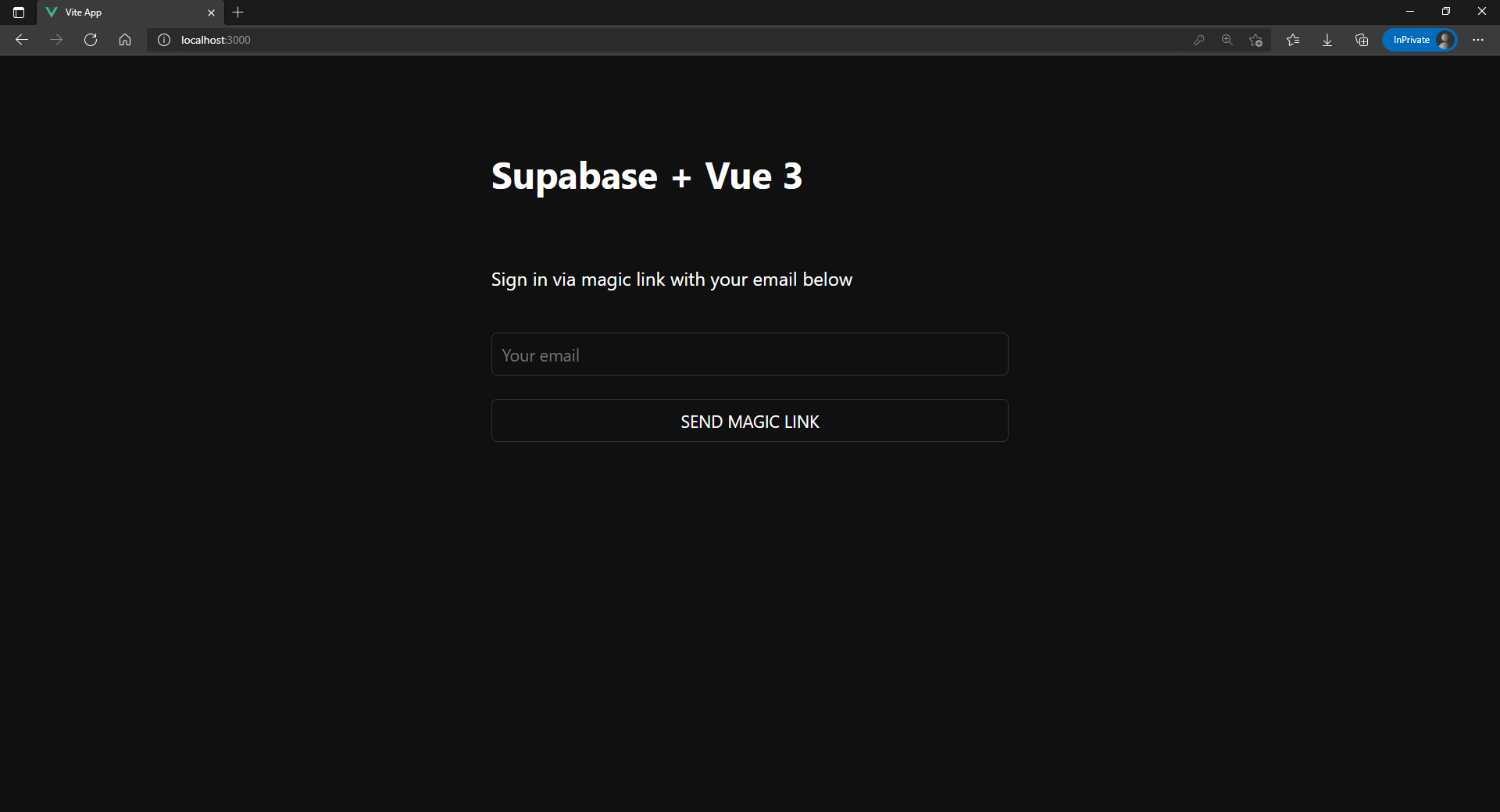

title: 'Vue',

href: '/guides/getting-started/quickstarts/vue',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from a Vue app.',

icon: '/docs/img/icons/vuejs-icon',

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

{

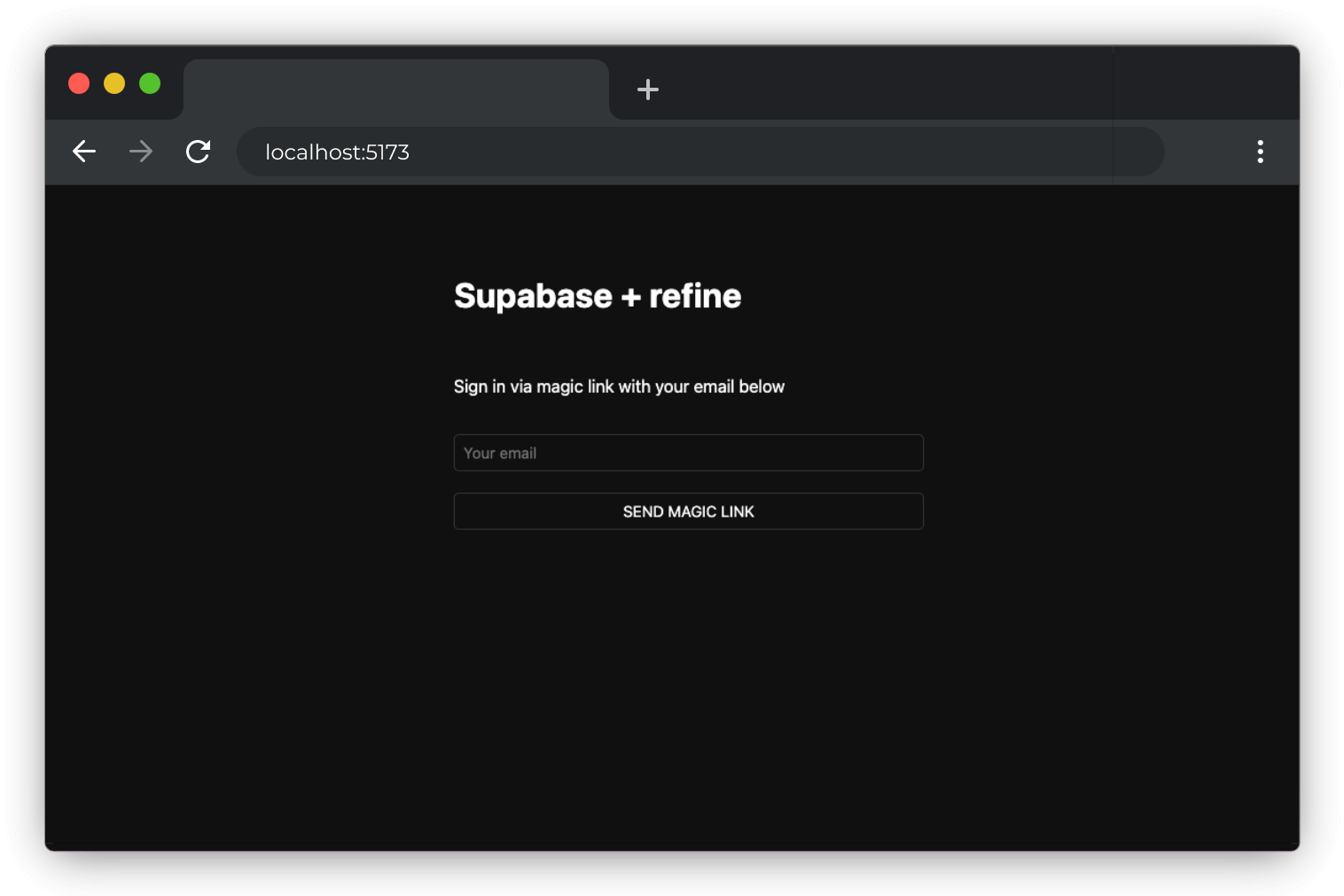

title: 'Refine',

href: '/guides/getting-started/quickstarts/refine',

description:

'Learn how to create a Supabase project, add some sample data to your database, and query the data from a Refine app.',

icon: '/docs/img/icons/refine-icon',

enabled: isFeatureEnabled('docs:framework_quickstarts'),

},

]

.filter((item) => item.enabled !== false)

.map((item) => {

return (

{item.description}

)

})}

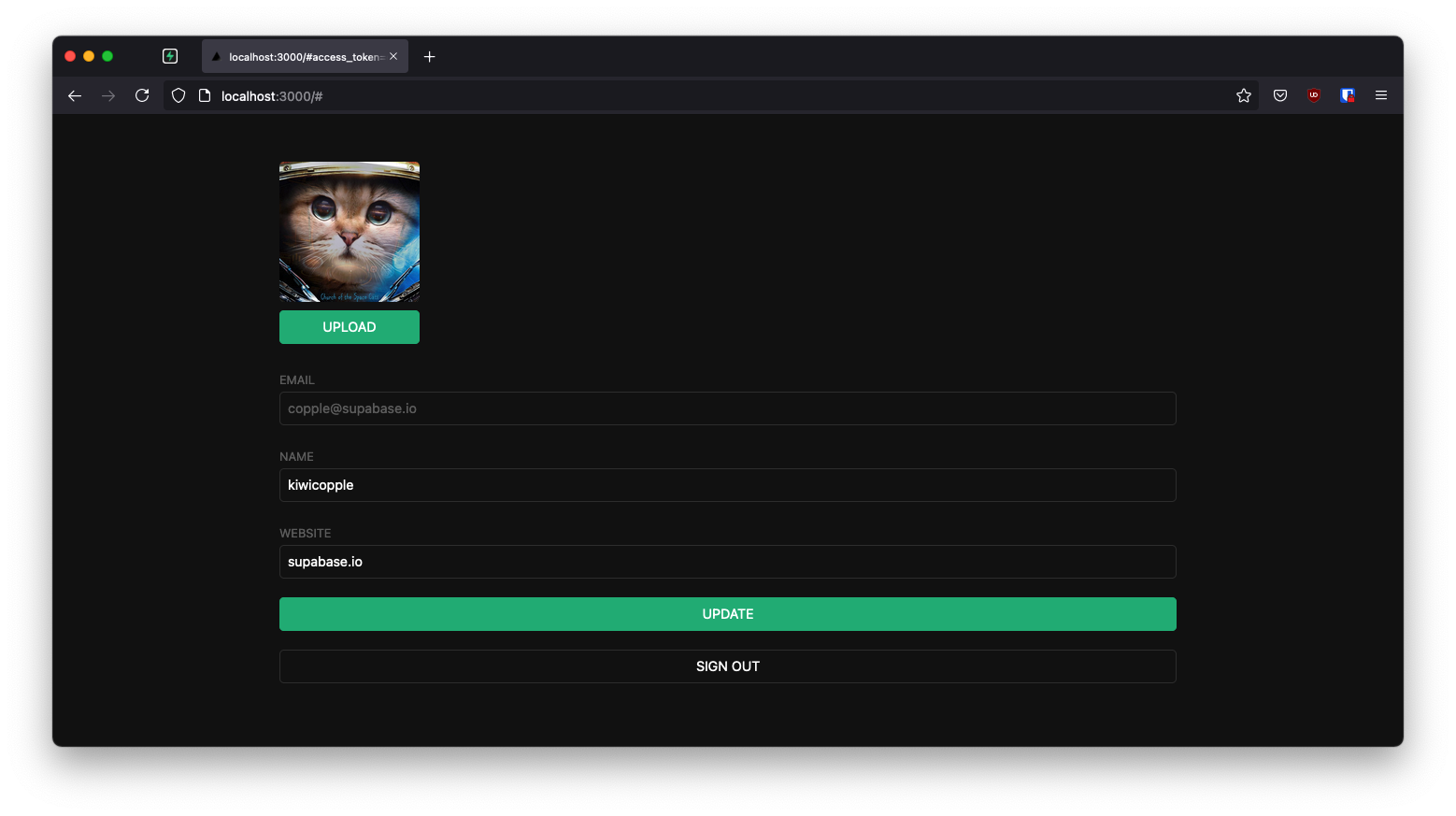

### Web app demos

{

[

{

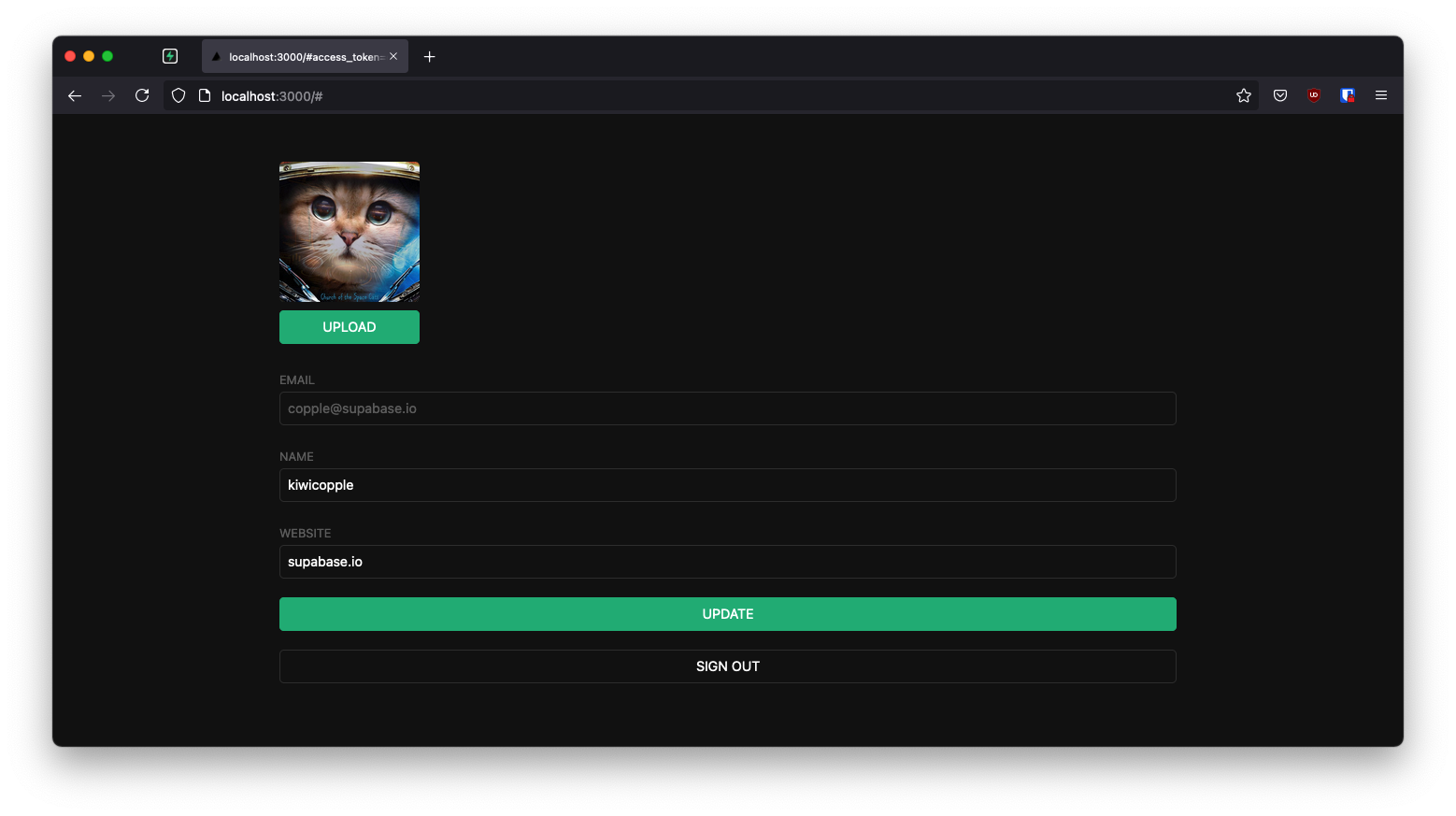

title: 'Next.js',

href: '/guides/getting-started/tutorials/with-nextjs',

description:

'Learn how to build a user management app with Next.js and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/nextjs-icon',

hasLightIcon: true,

},

{

title: 'React',

href: '/guides/getting-started/tutorials/with-react',

description:

'Learn how to build a user management app with React and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/react-icon',

},

{

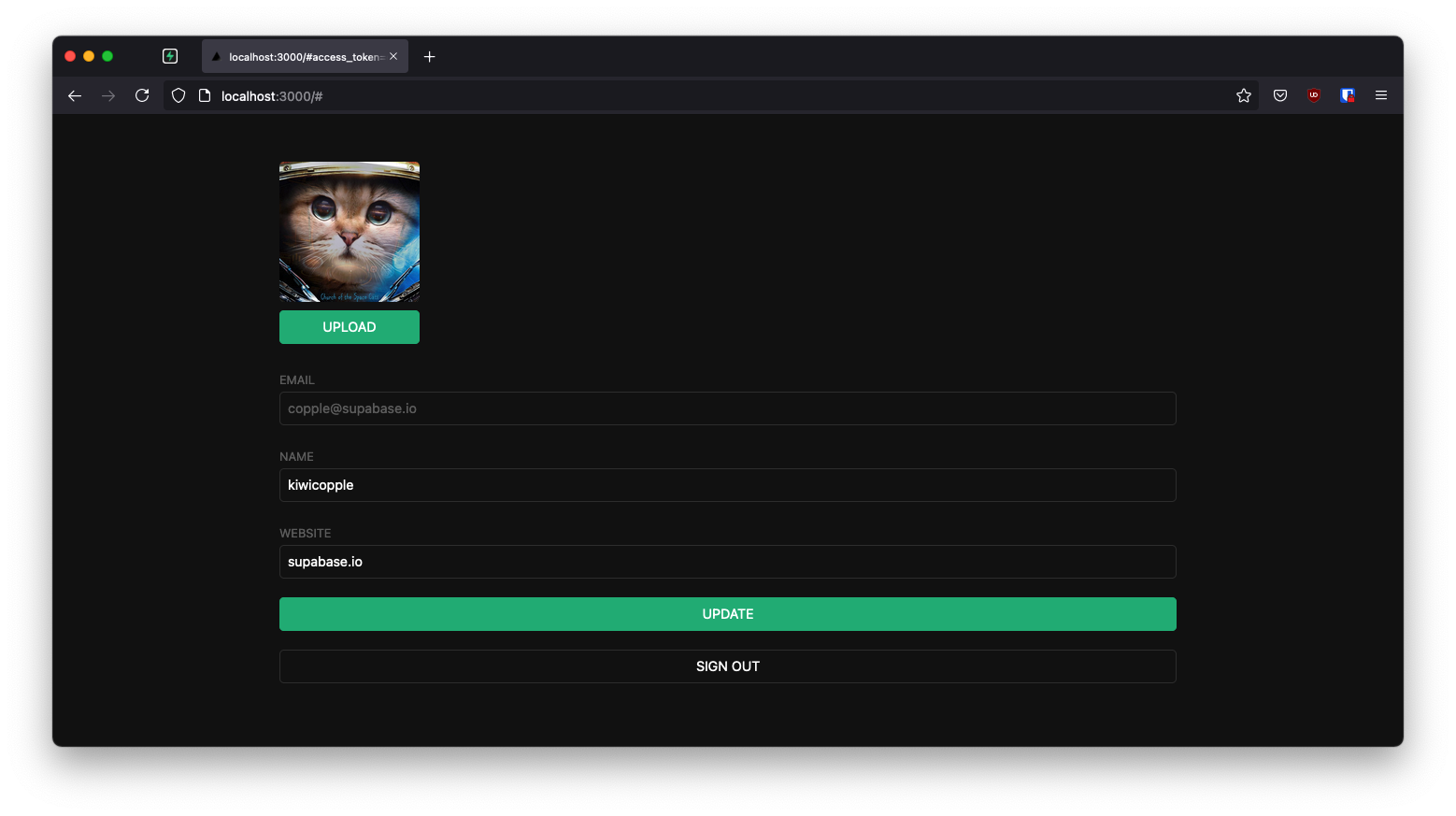

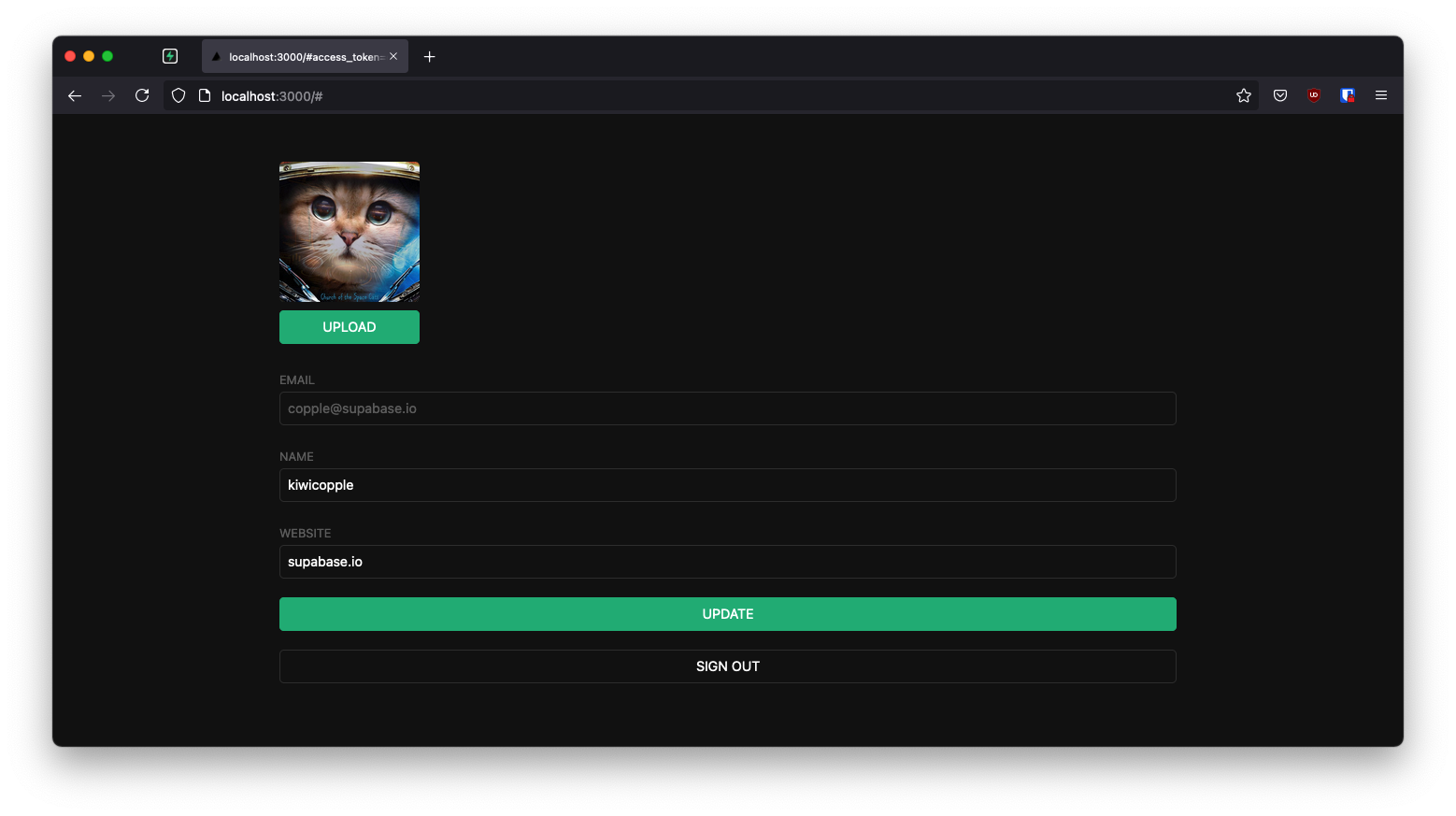

title: 'Vue 3',

href: '/guides/getting-started/tutorials/with-vue-3',

description:

'Learn how to build a user management app with Vue 3 and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/vuejs-icon',

},

{

title: 'Nuxt 3',

href: '/guides/getting-started/tutorials/with-nuxt-3',

description:

'Learn how to build a user management app with Nuxt 3 and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/nuxt-icon',

},

{

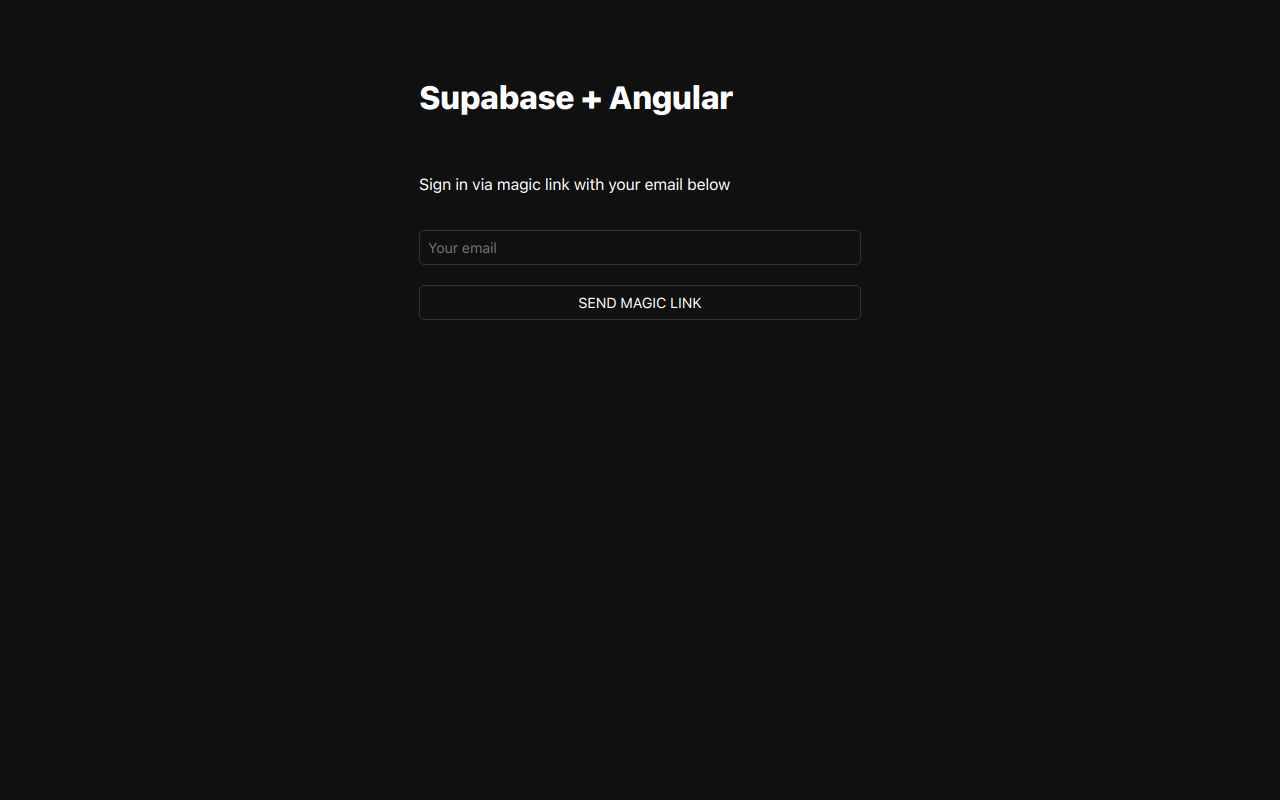

title: 'Angular',

href: '/guides/getting-started/tutorials/with-angular',

description:

'Learn how to build a user management app with Angular and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/angular-icon',

},

{

title: 'RedwoodJS',

href: '/guides/getting-started/tutorials/with-redwoodjs',

description:

'Learn how to build a user management app with RedwoodJS and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/redwood-icon',

},

{

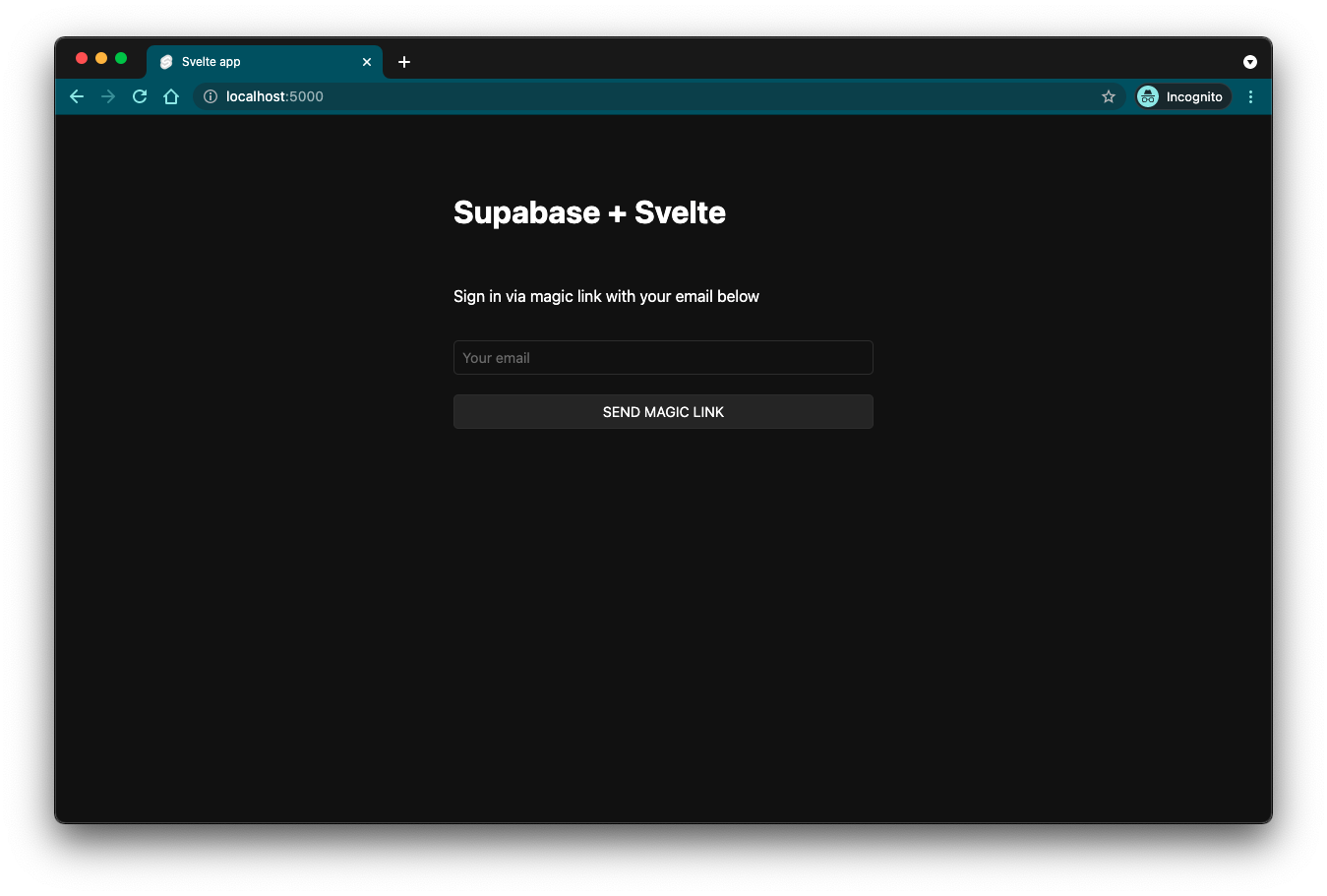

title: 'Svelte',

href: '/guides/getting-started/tutorials/with-svelte',

description:

'Learn how to build a user management app with Svelte and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/svelte-icon',

},

{

title: 'SvelteKit',

href: '/guides/getting-started/tutorials/with-sveltekit',

description:

'Learn how to build a user management app with SvelteKit and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/svelte-icon',

},

{

title: 'Refine',

href: '/guides/getting-started/tutorials/with-refine',

description:

'Learn how to build a user management app with Refine and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/refine-icon',

}

]

.map((item) => {

return (

{item.description}

)

})}

### Mobile tutorials

{[

{

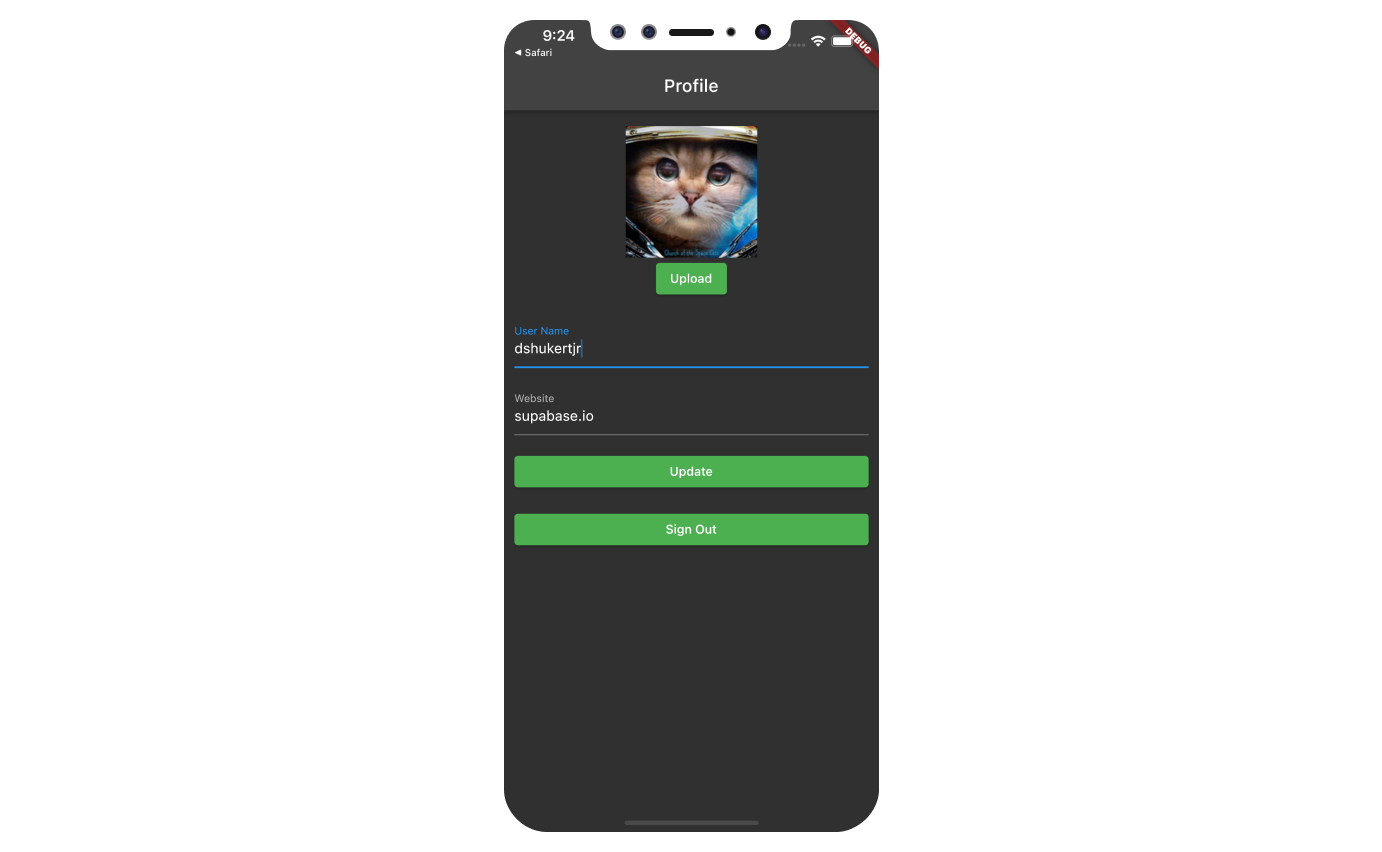

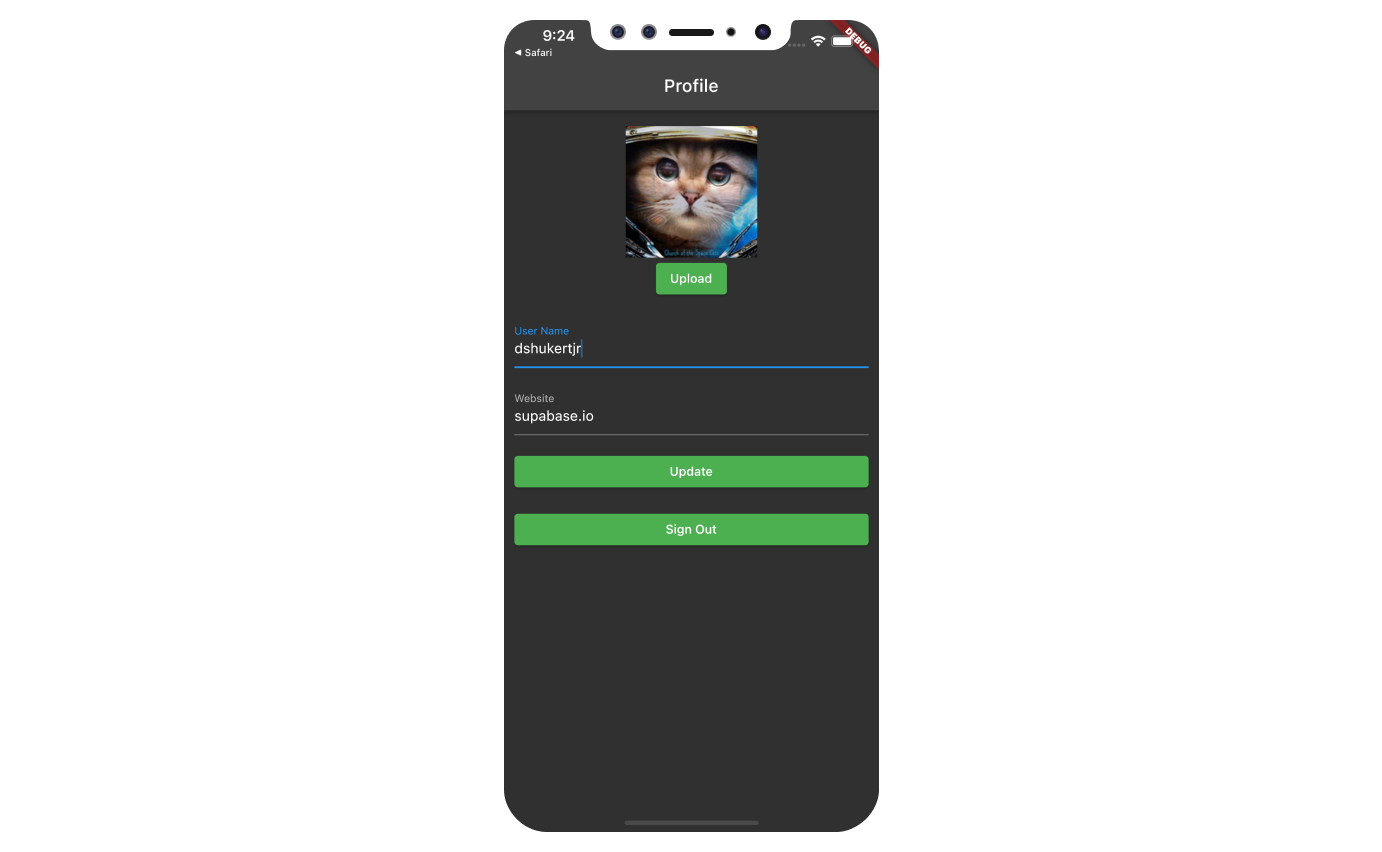

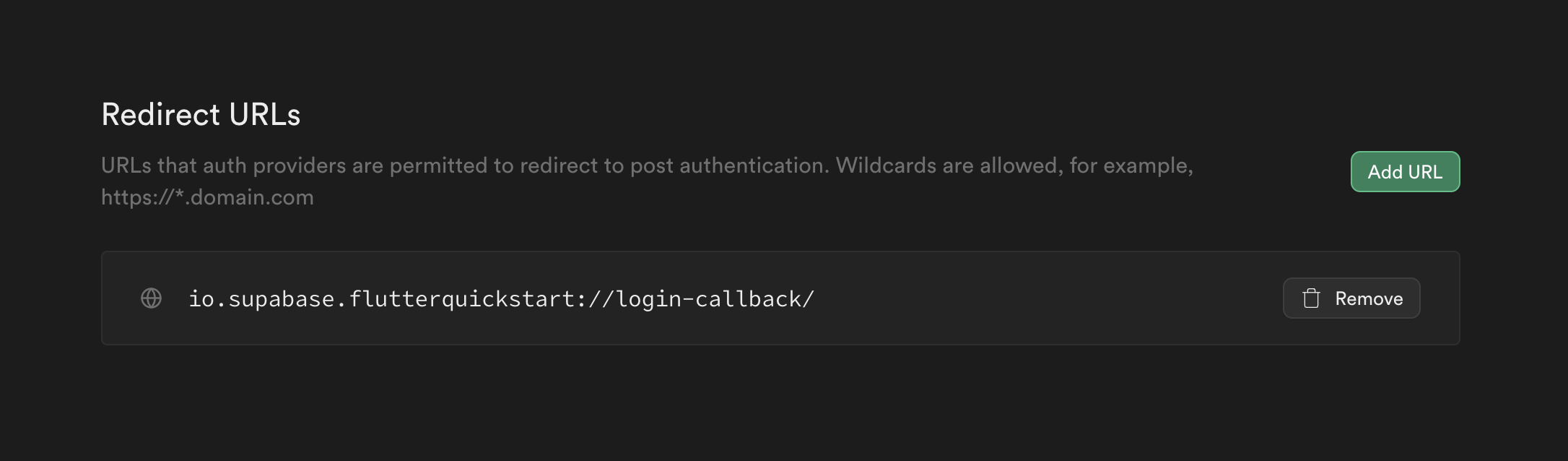

title: 'Flutter',

href: '/guides/getting-started/tutorials/with-flutter',

description:

'Learn how to build a user management app with Flutter and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/flutter-icon',

enabled: isFeatureEnabled('sdk:dart')

},

{

title: 'Expo React Native',

href: '/guides/getting-started/tutorials/with-expo-react-native',

description:

'Learn how to build a user management app with Expo React Native and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/expo-icon',

hasLightIcon: true,

enabled: true

},

{

title: 'Expo React Native Social Auth',

href: '/guides/getting-started/tutorials/with-expo-react-native-social-auth',

description:

'Learn how to implement social authentication in an app with Expo React Native and Supabase Database and Auth functionality.',

icon: '/docs/img/icons/expo-icon',

hasLightIcon: true

},

{

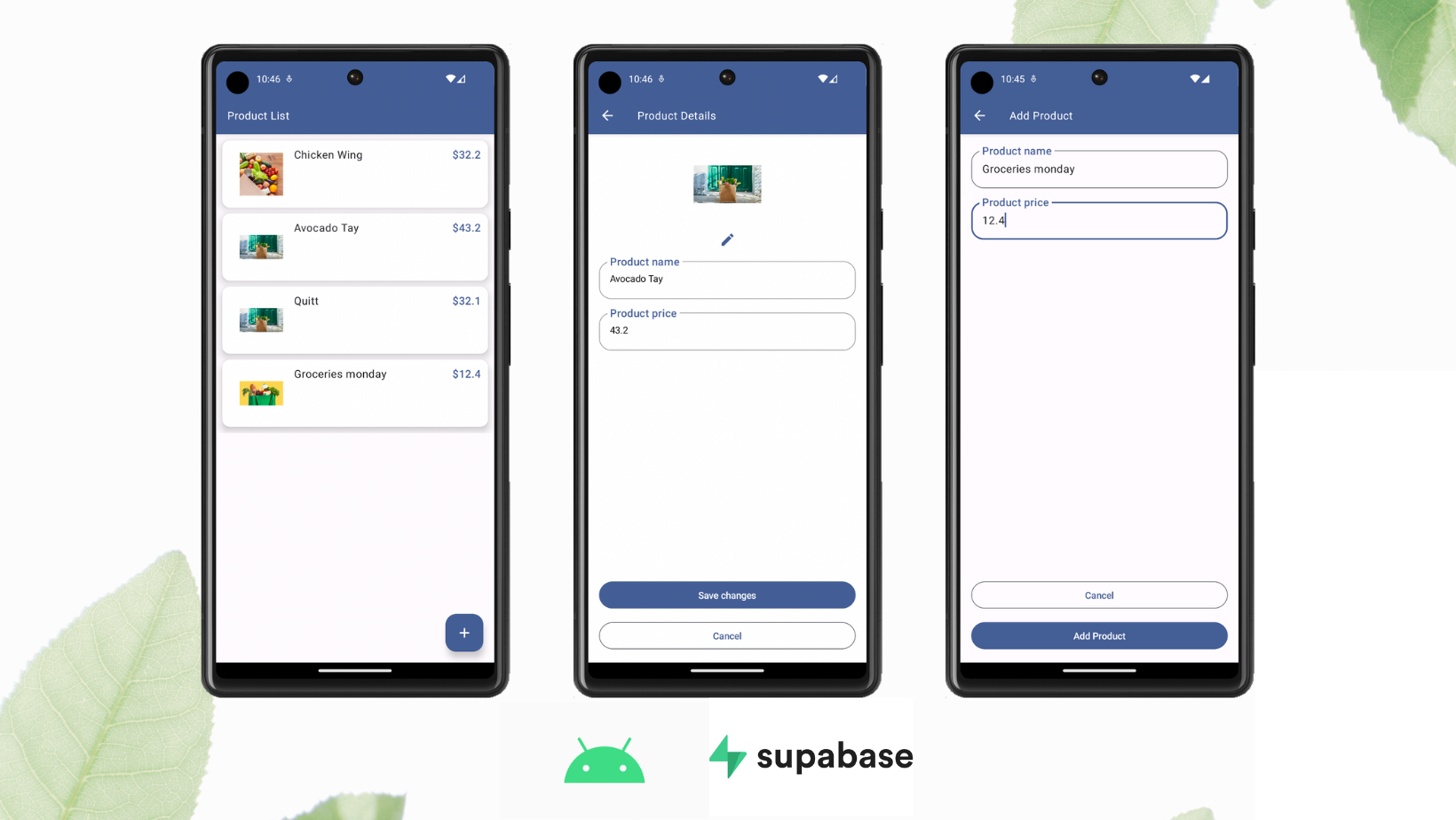

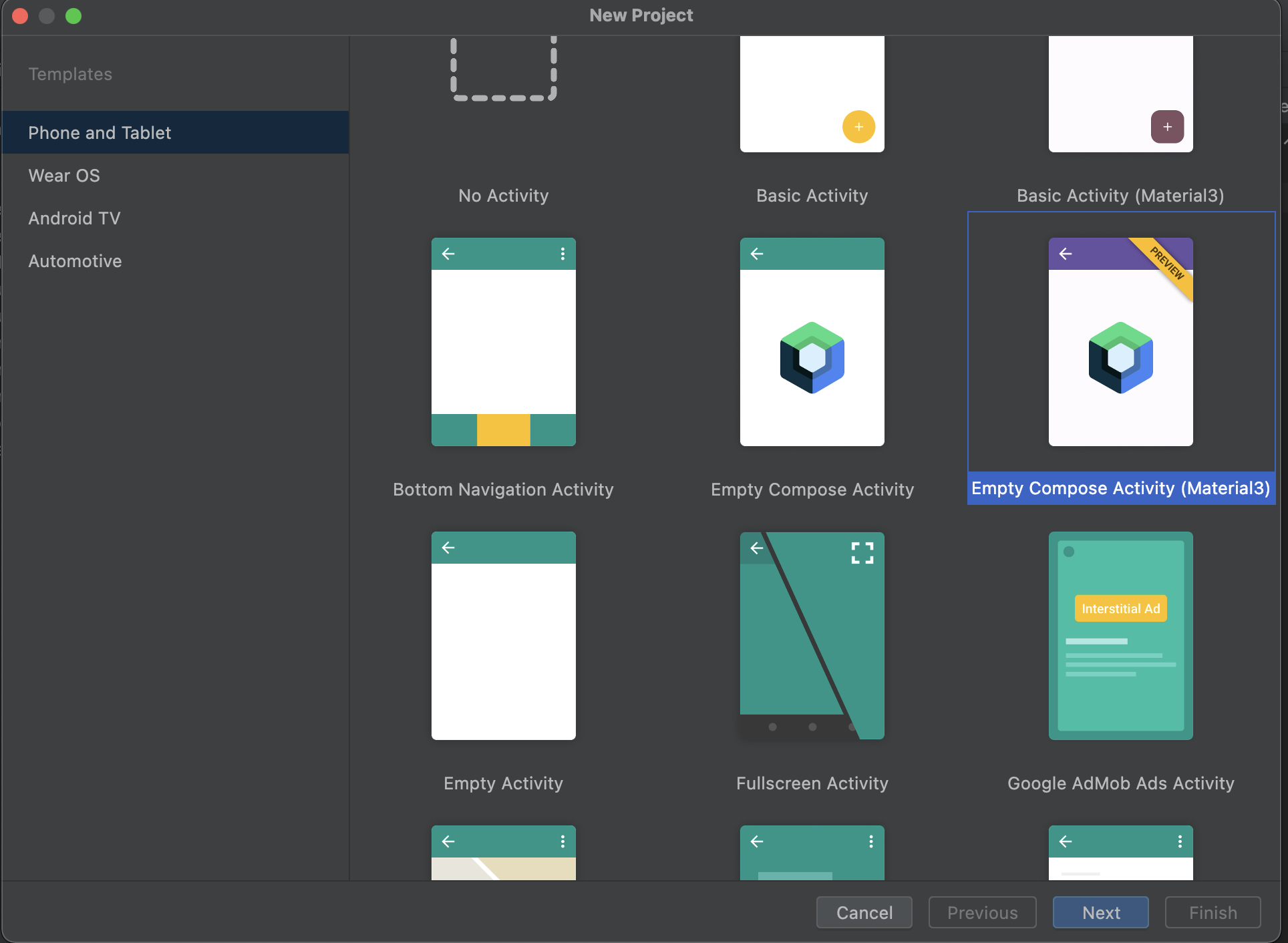

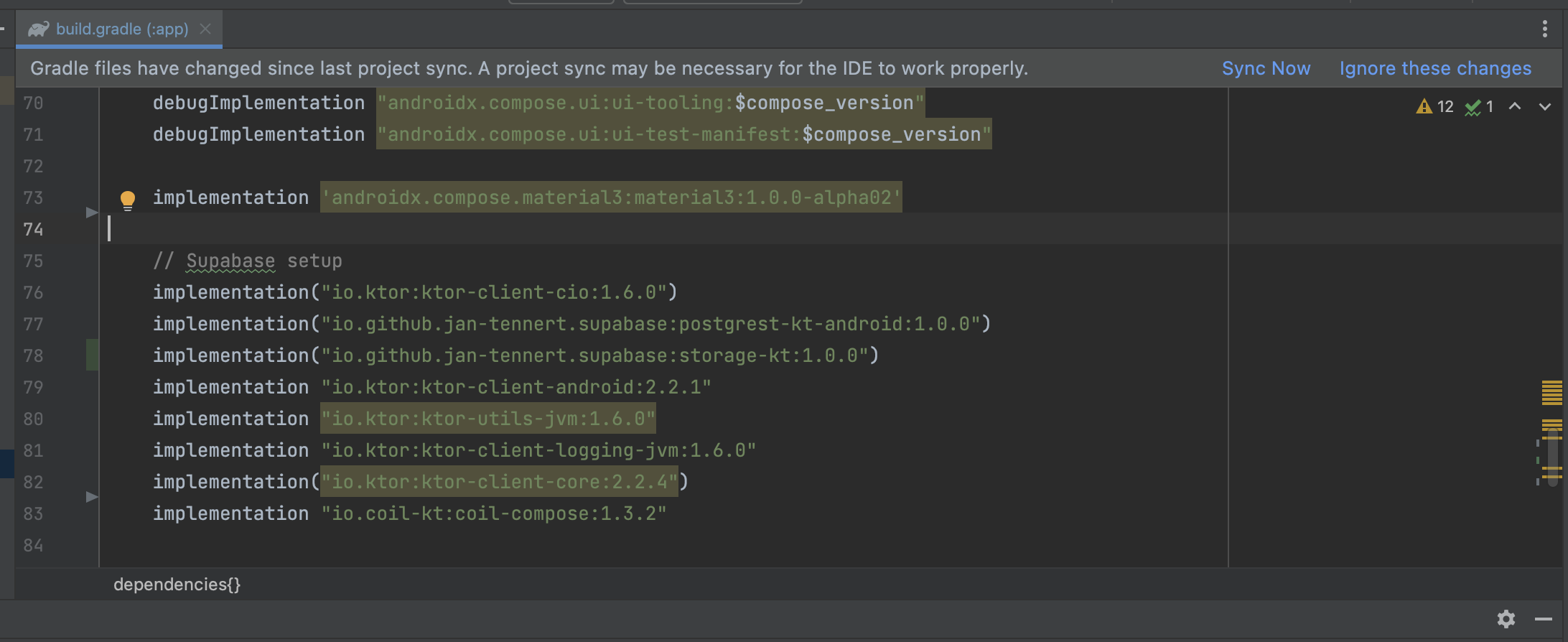

title: 'Android Kotlin',

href: '/guides/getting-started/tutorials/with-kotlin',

description:

'Learn how to build a product management app with Android and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/kotlin-icon',

enabled: isFeatureEnabled('sdk:kotlin')

},

{

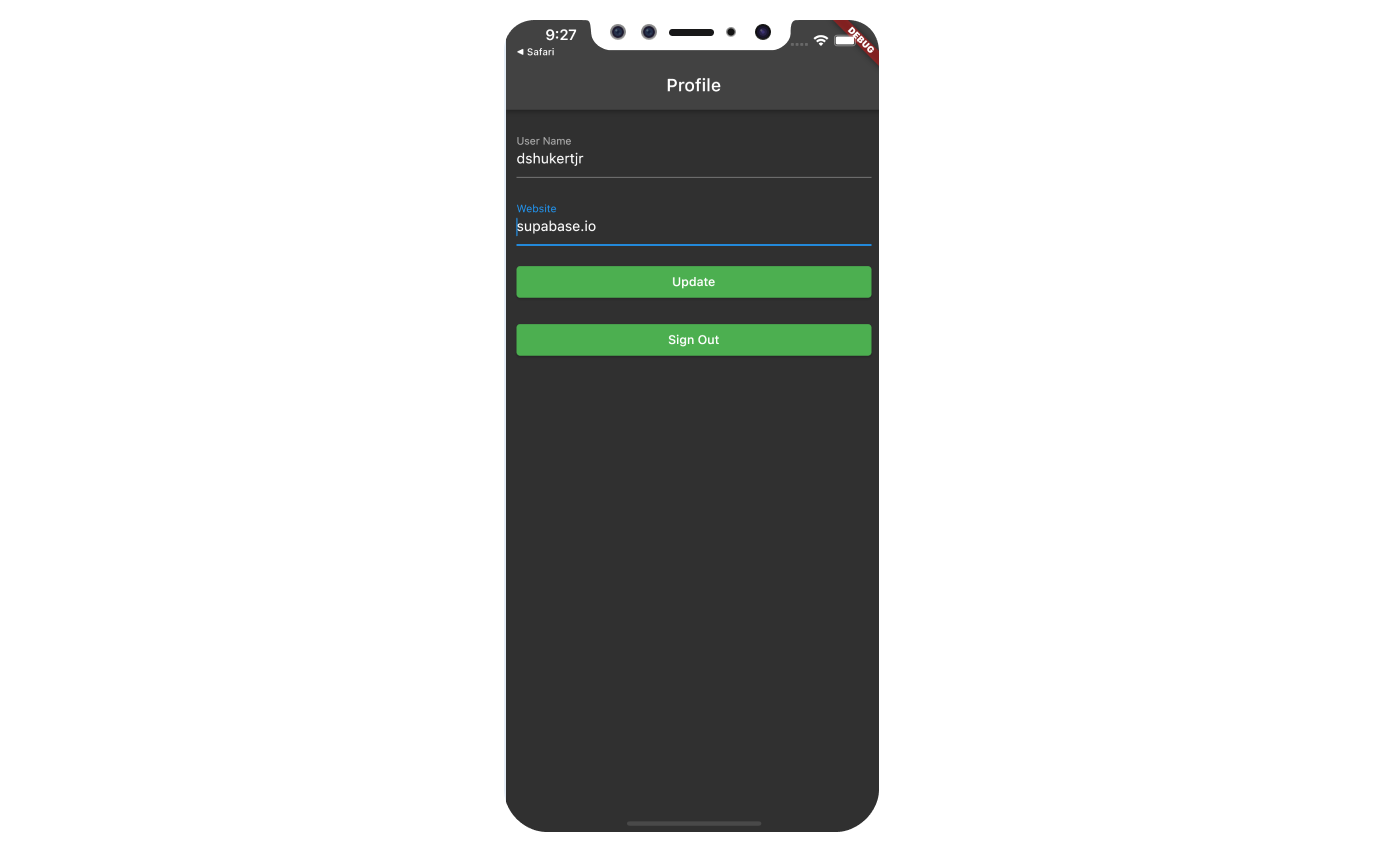

title: 'iOS Swift',

href: '/guides/getting-started/tutorials/with-swift',

description:

'Learn how to build a user management app with iOS and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/swift-icon',

enabled: isFeatureEnabled('sdk:swift')

},

{

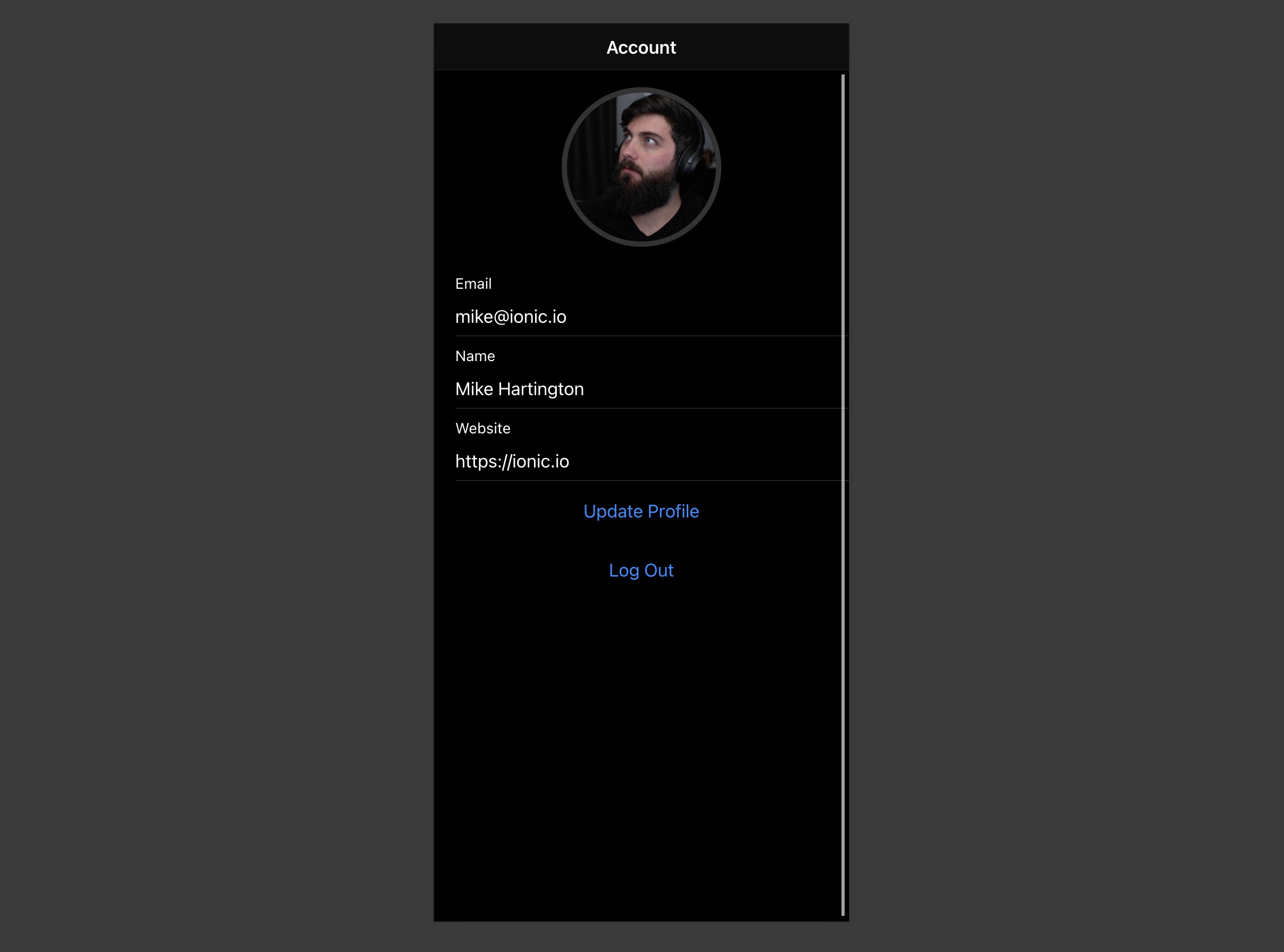

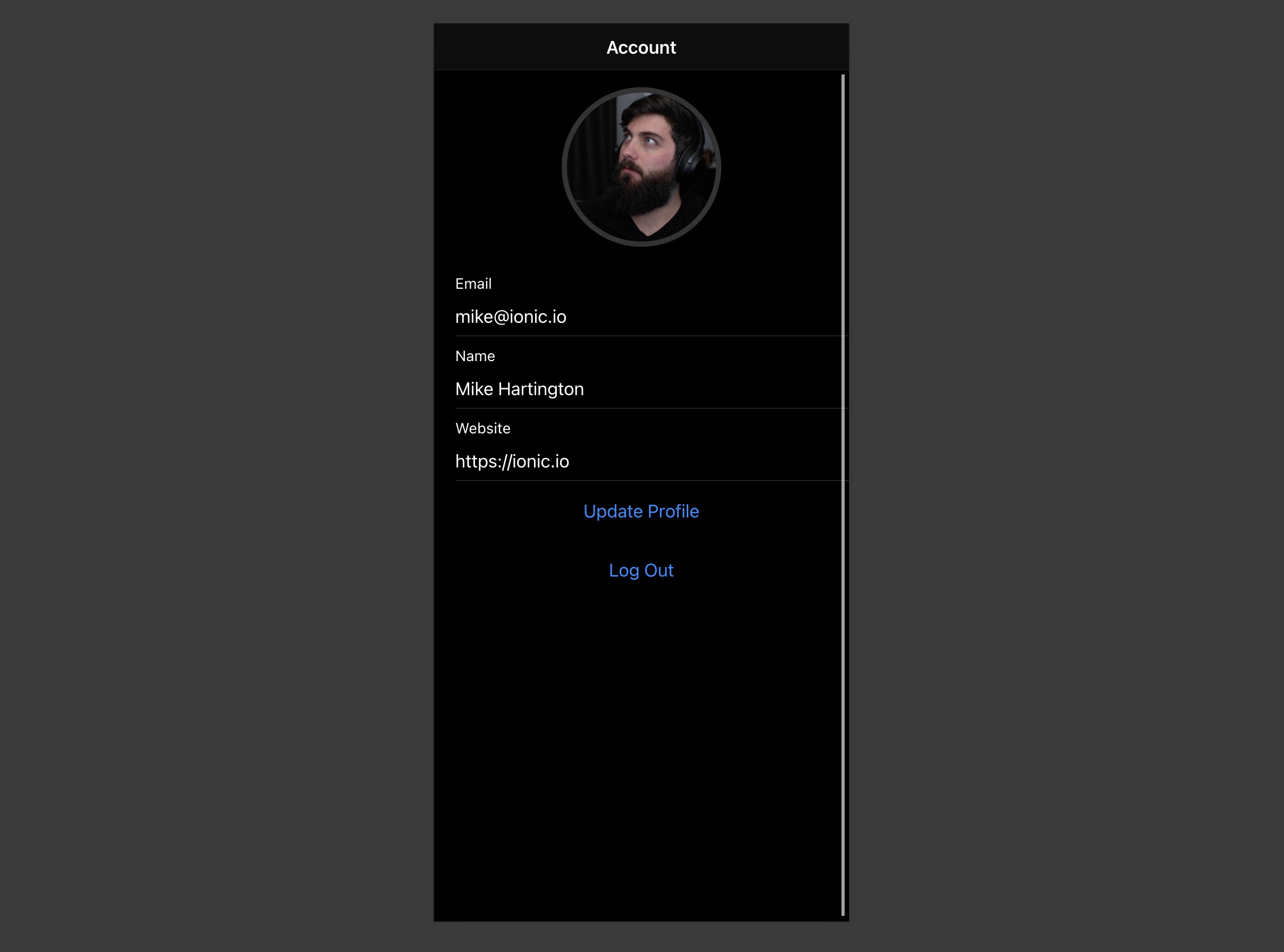

title: 'Ionic React',

href: '/guides/getting-started/tutorials/with-ionic-react',

description:

'Learn how to build a user management app with Ionic React and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/ionic-icon',

enabled: true

},

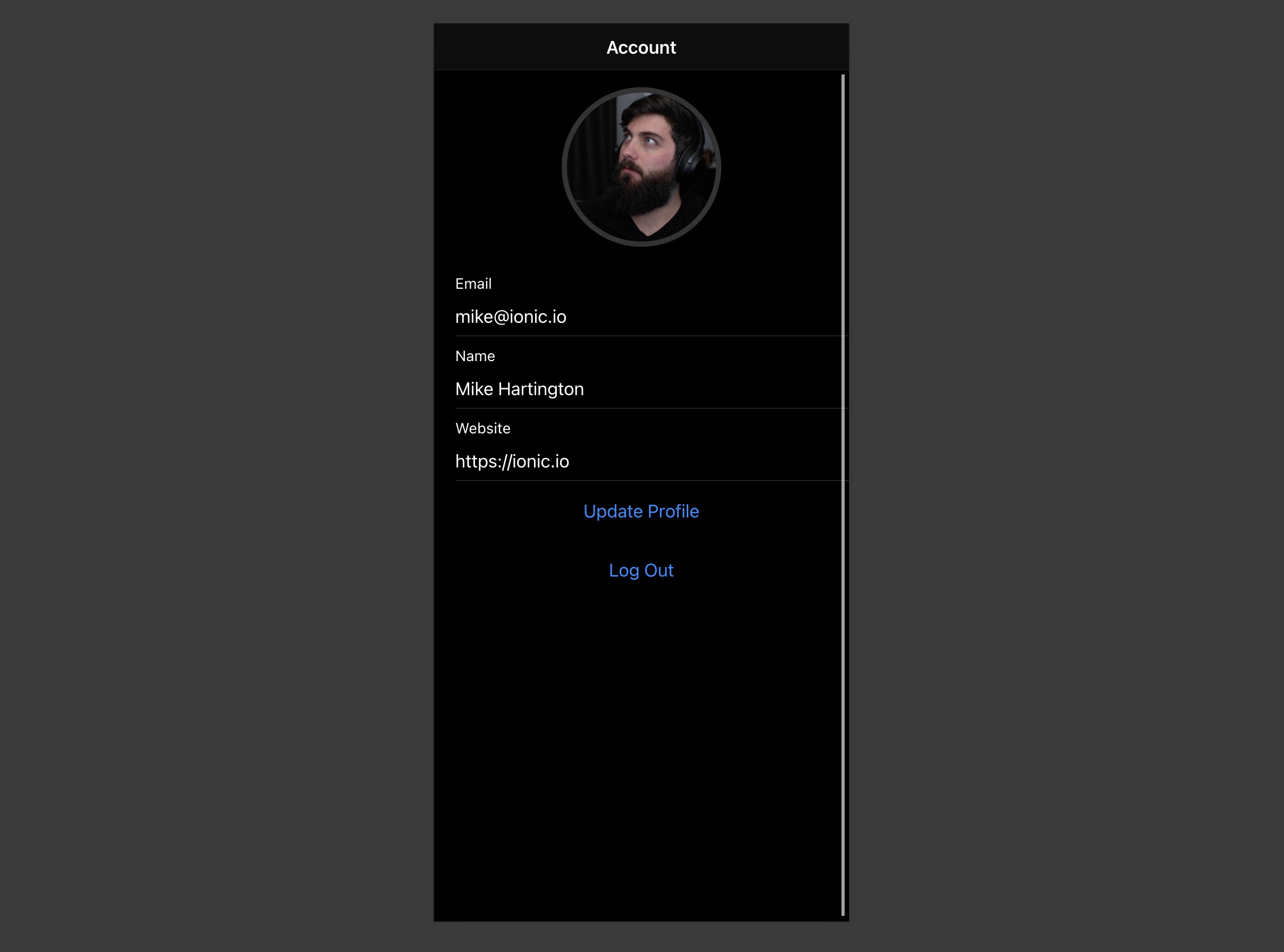

{

title: 'Ionic Vue',

href: '/guides/getting-started/tutorials/with-ionic-vue',

description:

'Learn how to build a user management app with Ionic Vue and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/ionic-icon',

enabled: true

},

{

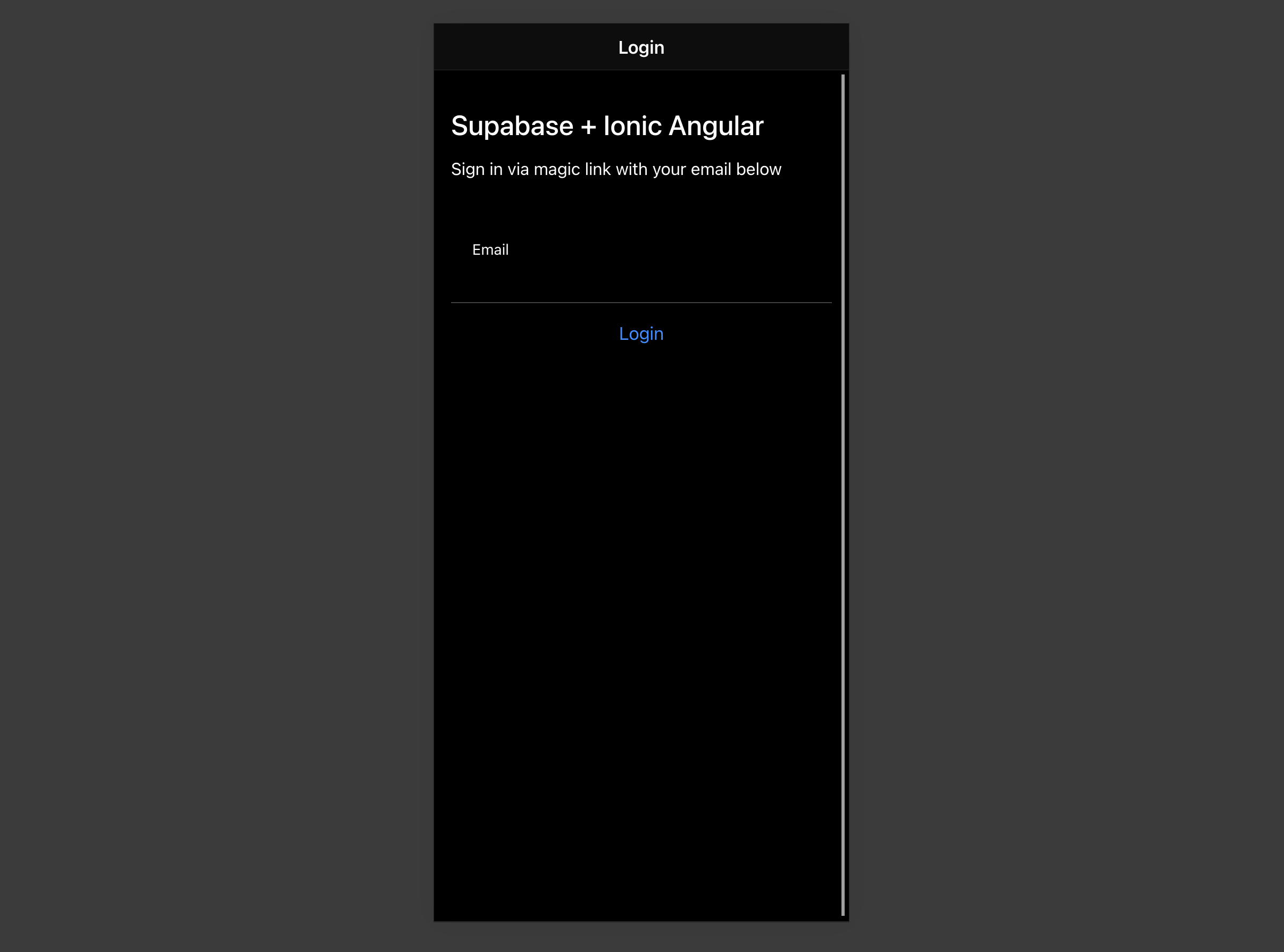

title: 'Ionic Angular',

href: '/guides/getting-started/tutorials/with-ionic-angular',

description:

'Learn how to build a user management app with Ionic Angular and Supabase Database, Auth, and Storage functionality.',

icon: '/docs/img/icons/ionic-icon',

enabled: true

}

]

.filter((item) => item.enabled !== false)

.map((item) => {

return (

{item.description}

)

})}

# Integrations

Supabase integrates with many of your favorite third-party services.

## Vercel Marketplace

Create and manage your Supabase projects directly through Vercel. [Get started with Vercel](/docs/guides/integrations/vercel-marketplace).

## Supabase Marketplace

Browse tools for extending your Supabase project. [Browse the Supabase Marketplace](/partners/integrations).

# Local Development & CLI

Learn how to develop locally and use the Supabase CLI

Develop locally while running the Supabase stack on your machine.

As a prerequisite, you must install a container runtime compatible with Docker APIs.

* [Docker Desktop](https://docs.docker.com/desktop/) (macOS, Windows, Linux)

* [Rancher Desktop](https://rancherdesktop.io/) (macOS, Windows, Linux)

* [Podman](https://podman.io/) (macOS, Windows, Linux)

* [OrbStack](https://orbstack.dev/) (macOS)

## Quickstart

1. Install the Supabase CLI:

```sh

npm install supabase --save-dev

```

```sh

NODE_OPTIONS=--no-experimental-fetch yarn add supabase --dev

```

```sh

pnpm add supabase --save-dev --allow-build=supabase

```

The `--allow-build=supabase` flag is required on pnpm version 10 or higher. If you're using an older version of pnpm, omit this flag.

```sh

brew install supabase/tap/supabase

```

2. In your repo, initialize the Supabase project:

```sh

npx supabase init

```

```sh

yarn supabase init

```

```sh

pnpx supabase init

```

```sh

supabase init

```

3. Start the Supabase stack:

```sh

npx supabase start

```

```sh

yarn supabase start

```

```sh

pnpx supabase start

```

```sh

supabase start

```

4. View your local Supabase instance at [http://localhost:54323](http://localhost:54323).

If your local development machine is connected to an untrusted public network, you should create a separate docker network and bind to 127.0.0.1 before starting the local development stack. This restricts network access to only your localhost machine.

```sh

docker network create -o 'com.docker.network.bridge.host_binding_ipv4=127.0.0.1' local-network

npx supabase start --network-id local-network

```

You should never expose your local development stack publicly.

## Local development

Local development with Supabase allows you to work on your projects in a self-contained environment on your local machine. Working locally has several advantages:

1. Faster development: You can make changes and see results instantly without waiting for remote deployments.

2. Offline work: You can continue development even without an internet connection.

3. Cost-effective: Local development is free and doesn't consume your project's quota.

4. Enhanced privacy: Sensitive data remains on your local machine during development.

5. Easy testing: You can experiment with different configurations and features without affecting your production environment.

To get started with local development, you'll need to install the [Supabase CLI](#cli) and Docker. The Supabase CLI allows you to start and manage your local Supabase stack, while Docker is used to run the necessary services.

Once set up, you can initialize a new Supabase project, start the local stack, and begin developing your application using local Supabase services. This includes access to a local Postgres database, Auth, Storage, and other Supabase features.

## CLI

The Supabase CLI is a powerful tool that enables developers to manage their Supabase projects directly from the terminal. It provides a suite of commands for various tasks, including:

* Setting up and managing local development environments

* Generating TypeScript types for your database schema

* Handling database migrations

* Managing environment variables and secrets

* Deploying your project to the Supabase platform

With the CLI, you can streamline your development workflow, automate repetitive tasks, and maintain consistency across different environments. It's an essential tool for both local development and CI/CD pipelines.

See the [CLI Getting Started guide](/docs/guides/local-development/cli/getting-started) for more information.

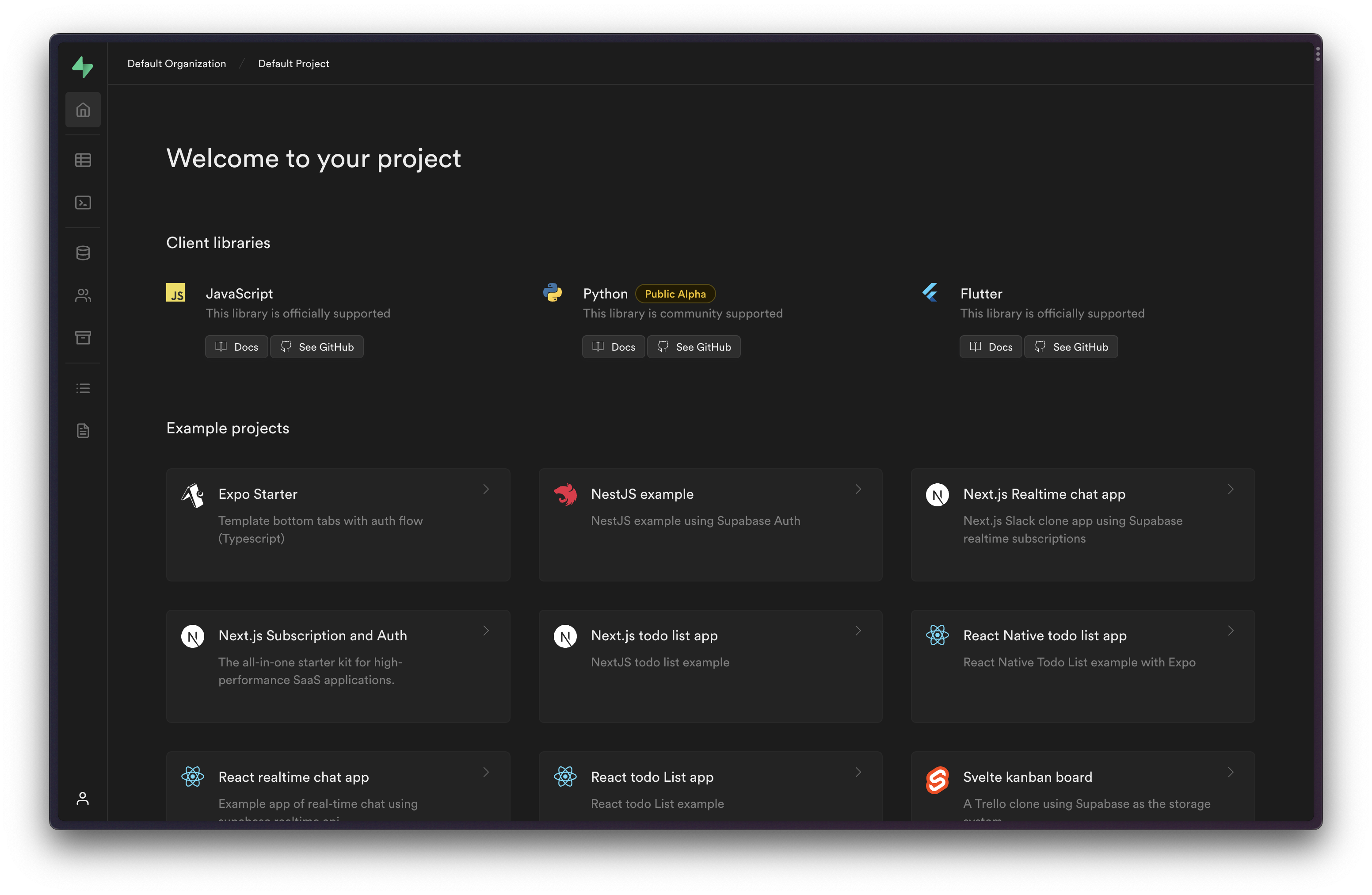

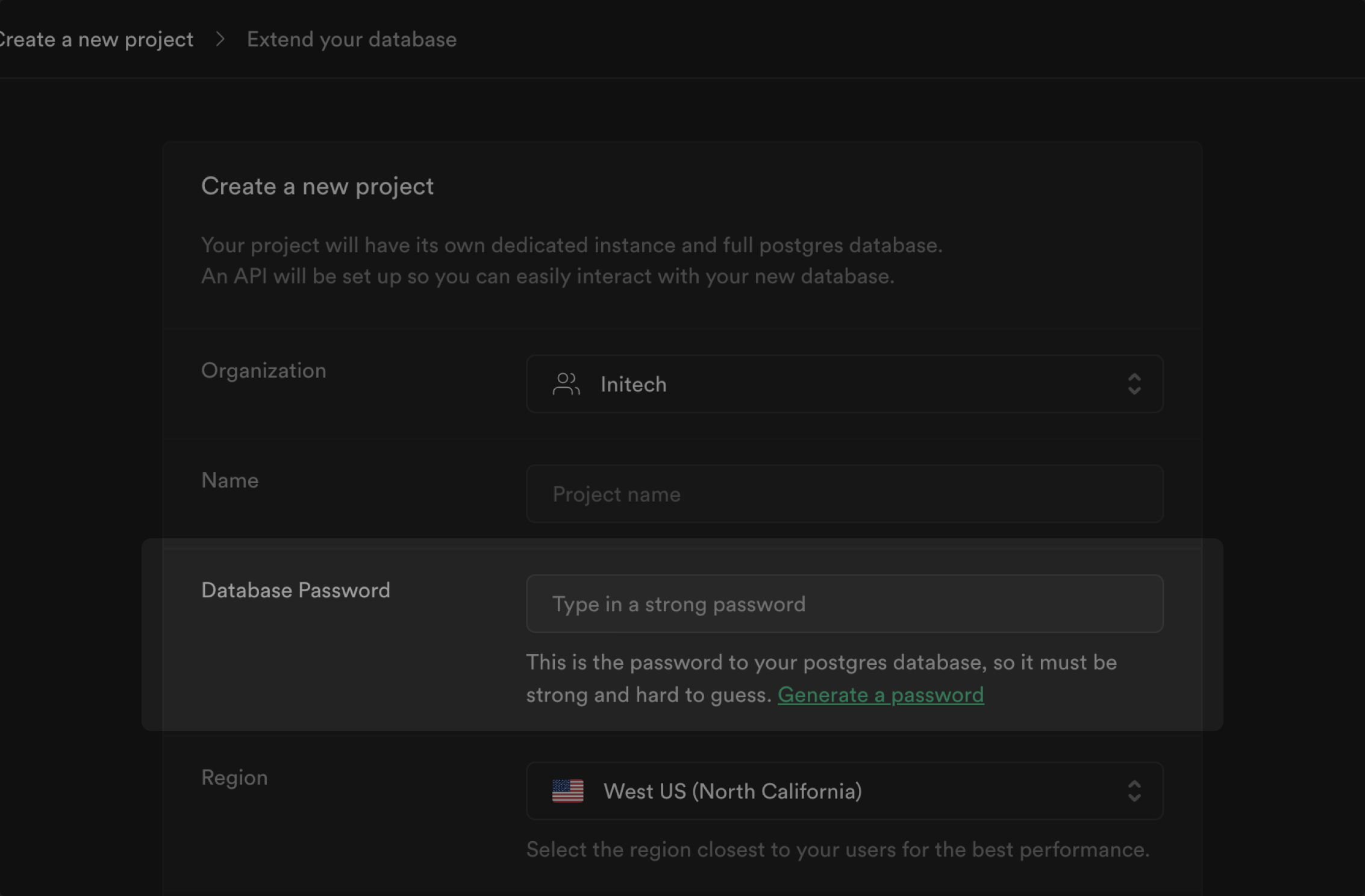

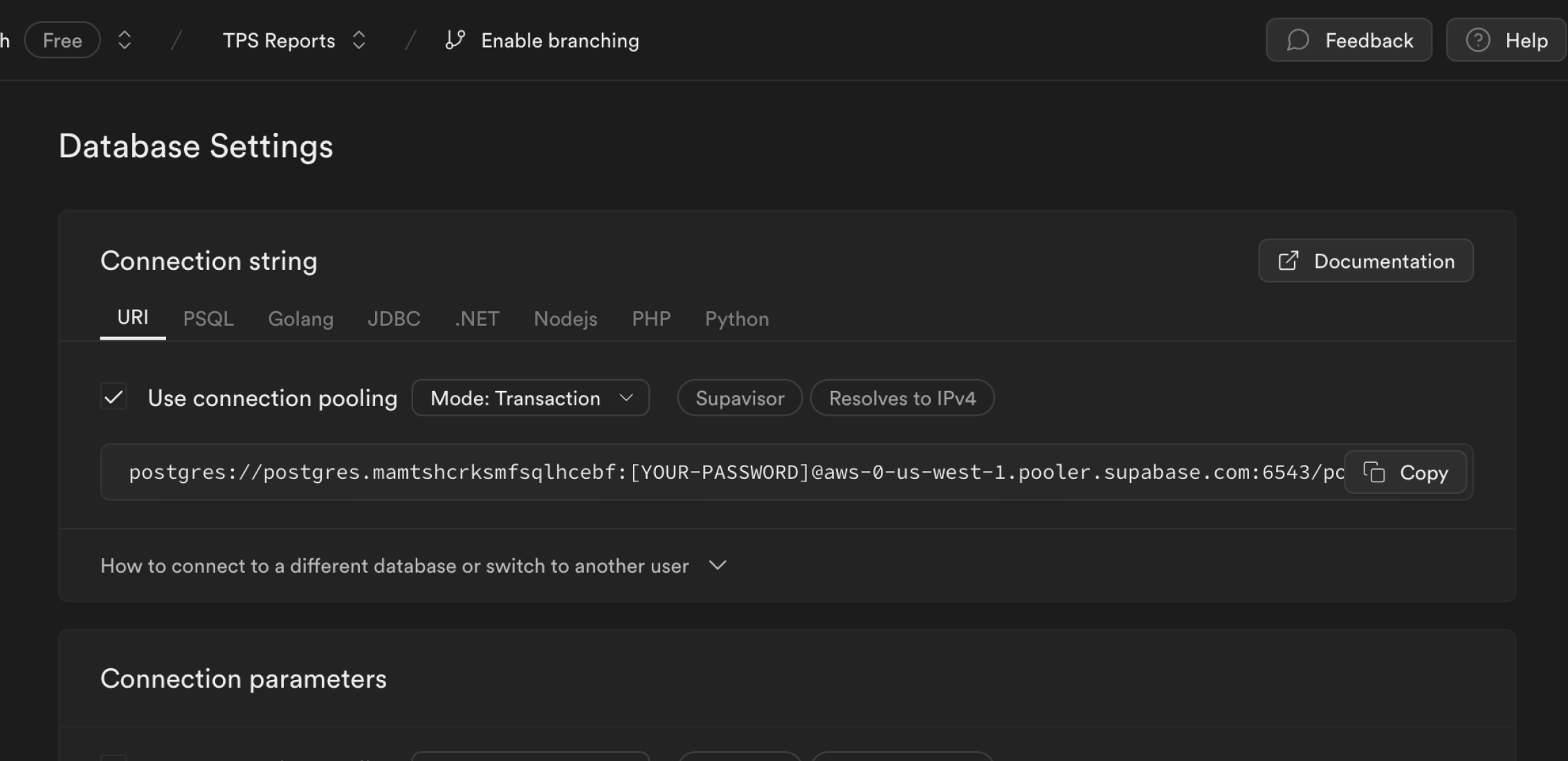

# Supabase Platform

Supabase is a hosted platform which makes it very simple to get started without needing to manage any infrastructure.

Visit [supabase.com/dashboard](/dashboard) and sign in to start creating projects.

## Projects

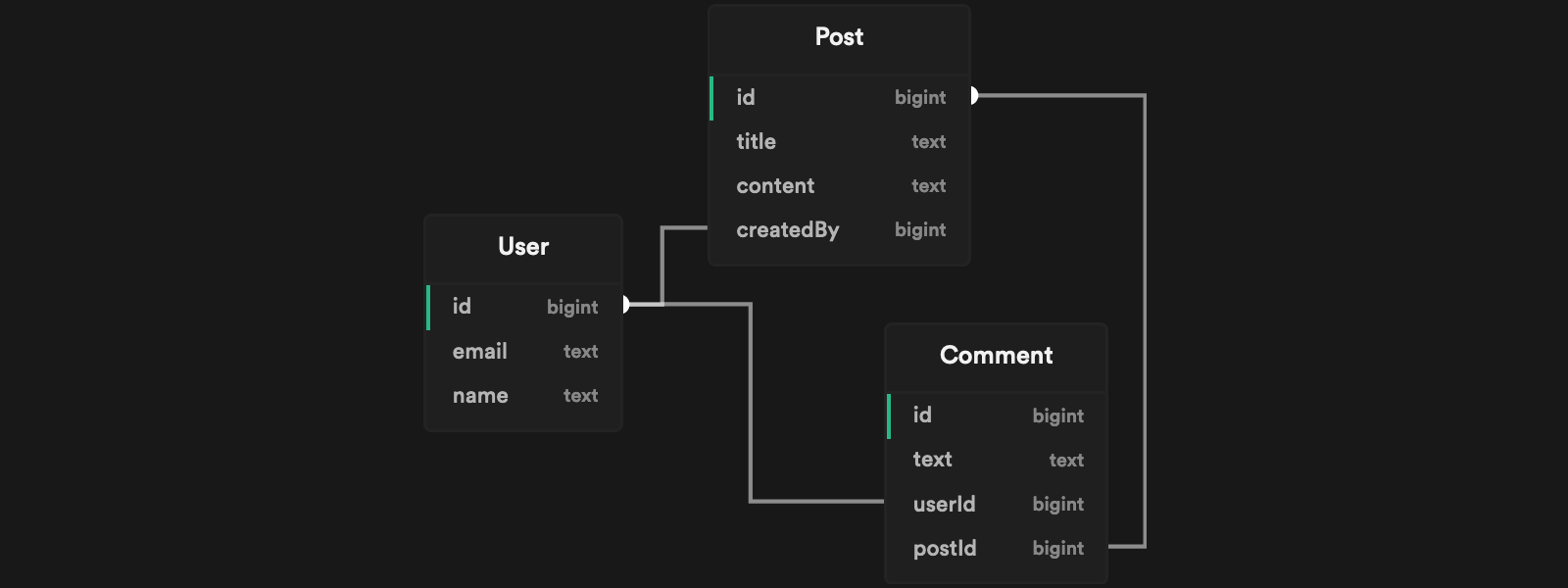

Each project on Supabase comes with:

* A dedicated [Postgres database](/docs/guides/database)

* [Auto-generated APIs](/docs/guides/database/api)

* [Auth and user management](/docs/guides/auth)

* [Edge Functions](/docs/guides/functions)

* [Realtime API](/docs/guides/realtime)

* [Storage](/docs/guides/storage)

## Organizations

Organizations are a way to group your projects. Each organization can be configured with different team members and billing settings.

Refer to [access control](/docs/guides/platform/access-control) for more information on how to manage team members within an organization.

## Platform status

If Supabase experiences outages, we keep you as informed as possible, as early as possible. We provide the following feedback channels:

* Status page: [status.supabase.com](https://status.supabase.com/)

* RSS Feed: [status.supabase.com/history.rss](https://status.supabase.com/history.rss)

* Atom Feed: [status.supabase.com/history.atom](https://status.supabase.com/history.atom)

* Slack Alerts: You can receive updates via the RSS feed, using Slack's [built-in RSS functionality](https://slack.com/help/articles/218688467-Add-RSS-feeds-to-Slack)

`/feed subscribe https://status.supabase.com/history.atom`

Make sure to review our [SLA](/docs/company/sla) for details on our commitment to Platform Stability.

# Supabase Queues

Durable Message Queues with Guaranteed Delivery in Postgres

Supabase Queues is a Postgres-native durable Message Queue system with guaranteed delivery built on the [pgmq database extension](https://github.com/tembo-io/pgmq). It offers developers a seamless way to persist and process Messages in the background while improving the resiliency and scalability of their applications and services.

Queues couples the reliability of Postgres with the simplicity Supabase's platform and developer experience, enabling developers to manage Background Tasks with zero configuration.

## Features

* **Postgres Native**

Built on top of the `pgmq` database extension, create and manage Queues with any Postgres tooling.

* **Guaranteed Message Delivery**

Messages added to Queues are guaranteed to be delivered to your consumers.

* **Exactly Once Message Delivery**

A Message is delivered exactly once to a consumer within a customizable visibility window.

* **Message Durability and Archival**

Messages are stored in Postgres and you can choose to archive them for analytical or auditing purposes.

* **Granular Authorization**

Control client-side consumer access to Queues with API permissions and Row Level Security (RLS) policies.

* **Queue Management and Monitoring**

Create, manage, and monitor Queues and Messages in the Supabase Dashboard.

## Resources

* [Quickstart](/docs/guides/queues/quickstart)

* [API Reference](/docs/guides/queues/api)

* [`pgmq` GitHub Repository](https://github.com/tembo-io/pgmq)

# Realtime

Send and receive messages to connected clients.

Supabase provides a globally distributed [Realtime](https://github.com/supabase/realtime) service with the following features:

* [Broadcast](/docs/guides/realtime/broadcast): Send low-latency messages between clients. Perfect for real-time messaging, database changes, cursor tracking, game events, and custom notifications.

* [Presence](/docs/guides/realtime/presence): Track and synchronize user state across clients. Ideal for showing who's online, or active participants.

* [Postgres Changes](/docs/guides/realtime/postgres-changes): Listen to database changes in real-time.

## What can you build?

* **Chat applications** - Real-time messaging with typing indicators and online presence

* **Collaborative tools** - Document editing, whiteboards, and shared workspaces

* **Live dashboards** - Real-time data visualization and monitoring

* **Multiplayer games** - Synchronized game state and player interactions

* **Social features** - Live notifications, reactions, and user activity feeds

Check the [Getting Started](/docs/guides/realtime/getting_started) guide to get started.

## Examples

{[

{

name: 'Multiplayer.dev',

description: 'Showcase application displaying cursor movements and chat messages using Broadcast.',

href: 'https://multiplayer.dev',

},

{

name: 'Chat',

description: 'Supabase UI chat component using Broadcast to send message between users.',

href: 'https://supabase.com/ui/docs/nextjs/realtime-chat'

},

{

name: 'Avatar Stack',

description: 'Supabase UI avatar stack component using Presence to track connected users.',

href: 'https://supabase.com/ui/docs/nextjs/realtime-avatar-stack'

},

{

name: 'Realtime Cursor',

description: "Supabase UI realtime cursor component using Broadcast to share users' cursors to build collaborative applications.",

href: 'https://supabase.com/ui/docs/nextjs/realtime-cursor'

}

].map((x) => (

{x.description}

))}

{[

{

name: 'Supabase Realtime',

description: 'View the source code.',

href: 'https://github.com/supabase/realtime',

},

{

name: 'Realtime: Multiplayer Edition',

description: 'Read more about Supabase Realtime.',

href: 'https://supabase.com/blog/supabase-realtime-multiplayer-general-availability',

},

].map((x) => (

{x.description}

))}

{

[

{

title: 'Examples',

hasLightIcon: true,

href: '/guides/resources/examples',

description: 'Official GitHub examples, curated content from the community, and more.',

},

{

title: 'Glossary',

hasLightIcon: true,

href: '/guides/resources/glossary',

description: 'Definitions for terminology and acronyms used in the Supabase documentation.',

}

]

.map((resource) => {

return (

{resource.description}

)

})}

### Migrate to Supabase

{

[

{

title: 'Auth0',

icon: '/docs/img/icons/auth0-icon',

href: '/guides/resources/migrating-to-supabase/auth0',

description: 'Move your auth users from Auth0 to a Supabase project.',

hasLightIcon: true,

},

{

title: 'Firebase Auth',

icon: '/docs/img/icons/firebase-icon',

href: '/guides/resources/migrating-to-supabase/firebase-auth',

description: 'Move your auth users from a Firebase project to a Supabase project.',

},

{

title: 'Firestore Data',

icon: '/docs/img/icons/firebase-icon',

href: '/guides/resources/migrating-to-supabase/firestore-data',

description: 'Migrate the contents of a Firestore collection to a single PostgreSQL table.',

},

{

title: 'Firebase Storage',

icon: '/docs/img/icons/firebase-icon',

href: '/guides/resources/migrating-to-supabase/firebase-storage',

description: 'Convert your Firebase Storage files to Supabase Storage.'

},

{

title: 'Heroku',

icon: '/docs/img/icons/heroku-icon',

href: '/guides/resources/migrating-to-supabase/heroku',

description: 'Migrate your Heroku Postgres database to Supabase.'

},

{

title: 'Render',

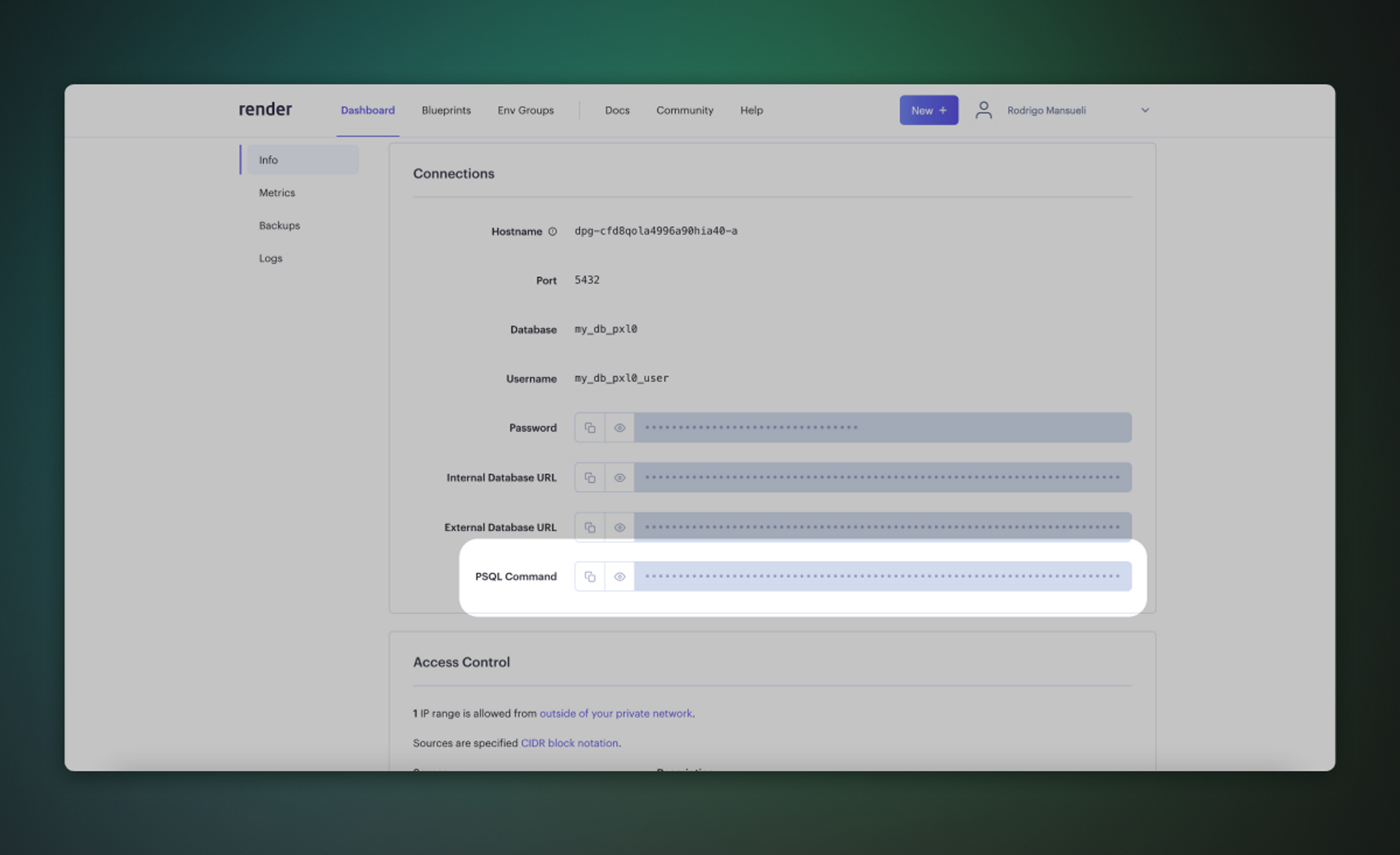

icon: '/docs/img/icons/render-icon',

href: '/guides/resources/migrating-to-supabase/render',

description: 'Migrate your Render Postgres database to Supabase.'

},

{

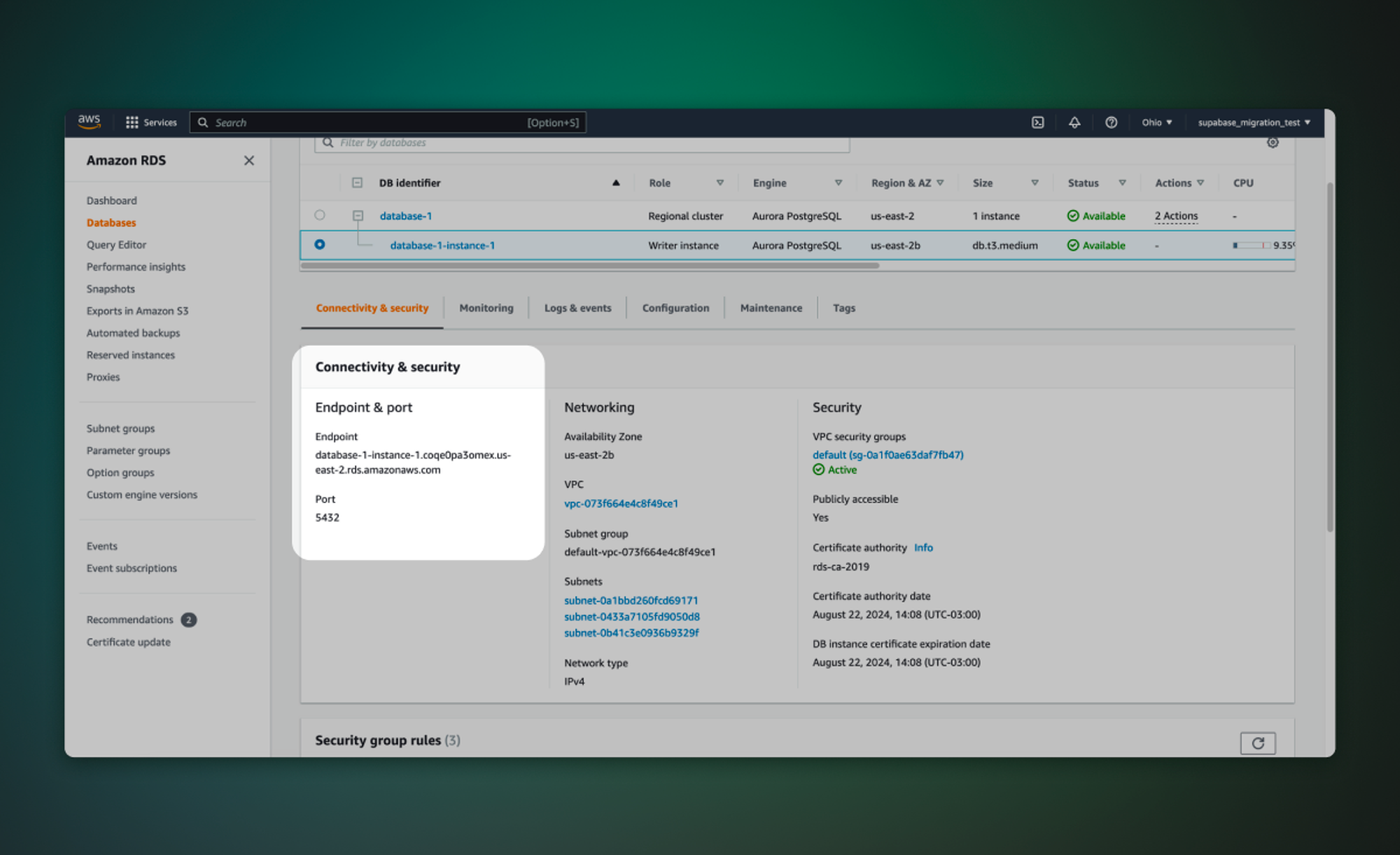

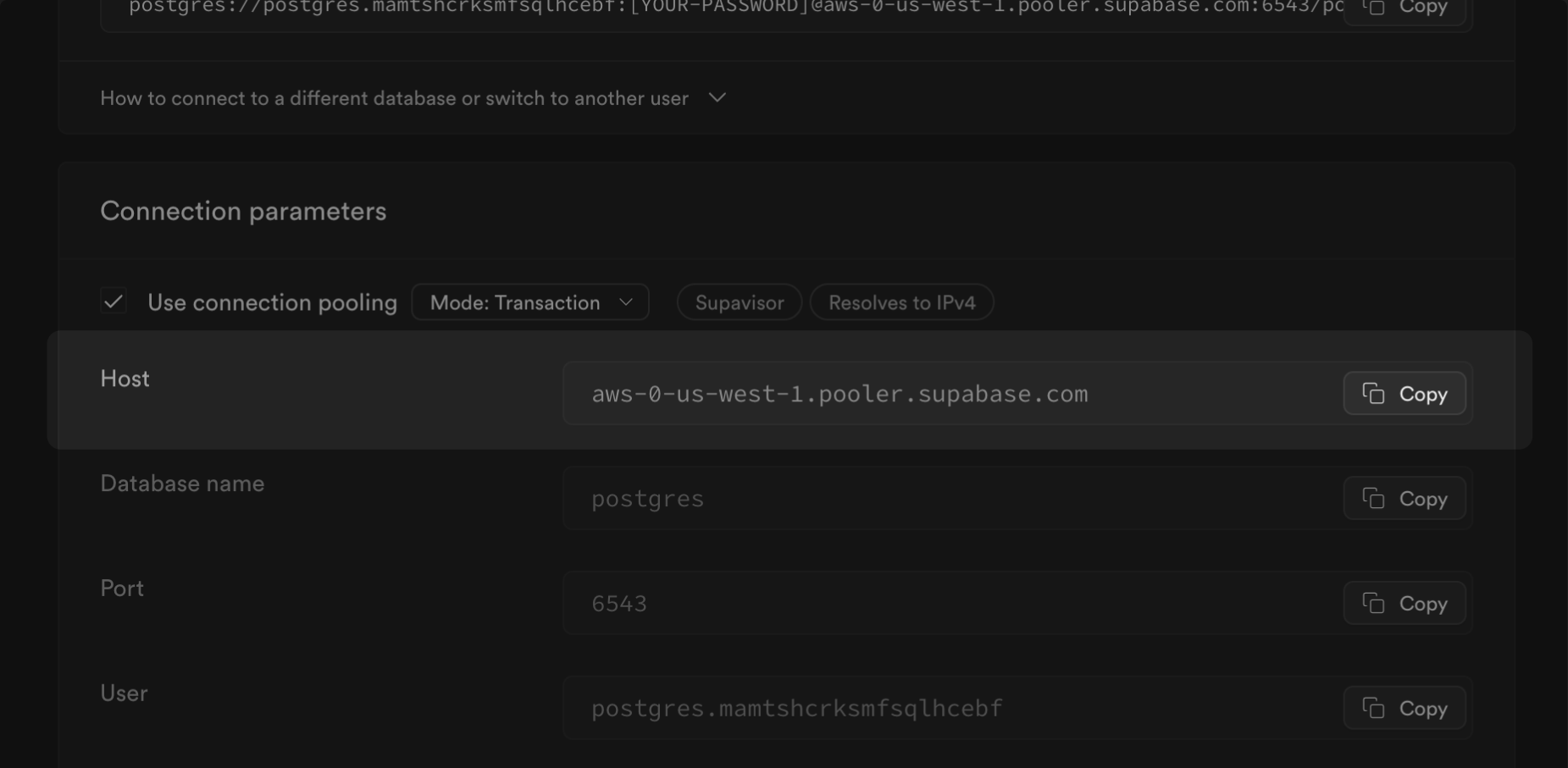

title: 'Amazon RDS',

icon: '/docs/img/icons/aws-rds-icon',

href: '/guides/resources/migrating-to-supabase/amazon-rds',

description: 'Migrate your Amazon RDS database to Supabase.'

},

{

title: 'Postgres',

icon: '/docs/img/icons/postgres-icon',

href: '/guides/resources/migrating-to-supabase/postgres',

description: 'Migrate your Postgres database to Supabase.'

},

{

title: 'MySQL',

icon: '/docs/img/icons/mysql-icon',

href: '/guides/resources/migrating-to-supabase/mysql',

description: 'Migrate your MySQL database to Supabase.'

},

{

title: 'Microsoft SQL Server',

icon: '/docs/img/icons/mssql-icon',

href: '/guides/resources/migrating-to-supabase/mssql',

description: 'Migrate your Microsoft SQL Server database to Supabase.'

}

]

.map((product) => {

return (

{product.description}

)

})}

### Postgres resources

{

[

{

title: 'Managing Indexes',

hasLightIcon: true,

href: '/guides/database/postgres/indexes',

description: 'Improve query performance using various index types in Postgres.'

},

{

title: 'Cascade Deletes',

hasLightIcon: true,

href: '/guides/database/postgres/cascade-deletes',

description: 'Understand the types of foreign key constraint deletes.'

},

{

title: 'Drop all tables in schema',

hasLightIcon: true,

href: '/guides/database/postgres/dropping-all-tables-in-schema',

description: 'Delete all tables in a given schema.'

},

{

title: 'Select first row per group',

hasLightIcon: true,

href: '/guides/database/postgres/first-row-in-group',

description: 'Retrieve the first row in each distinct group.'

},

{

title: 'Print PostgreSQL version',

hasLightIcon: true,

href: '/guides/database/postgres/which-version-of-postgres',

description: 'Find out which version of Postgres you are running.'

}

]

.map((resource) => {

return (

{resource.description}

)

})}

{/* end of container */}

# Supabase Security

Supabase is a hosted platform which makes it very simple to get started without needing to manage any infrastructure. The hosted platform comes with many security and compliance controls managed by Supabase.

# Compliance

Supabase is SOC 2 Type 2 compliant and regularly audited. All projects at Supabase are governed by the same set of compliance controls.

The [SOC 2 Compliance Guide](/docs/guides/security/soc-2-compliance) explains Supabase's SOC 2 responsibilities and controls in more detail.

The [HIPAA Compliance Guide](/docs/guides/security/hipaa-compliance) explains Supabase's HIPAA responsibilities. Additional [security and compliance controls](/docs/guides/deployment/shared-responsibility-model#managing-healthcare-data) for projects that deal with electronic Protected Health Information (ePHI) and require HIPAA compliance are available through the HIPAA add-on.

# Platform configuration

As a hosted platform, Supabase provides additional security controls to further enhance the security posture depending on organizations' own requirements or obligations.

These can be found under the [dedicated security page](/dashboard/org/_/security) under organization settings. And are described in greater detail [here](/docs/guides/security/platform-security).

# Product configuration

Each product offered by Supabase comes with customizable security controls and these security controls help ensure that applications built on Supabase are secure, compliant, and resilient against various threats.

The [security configuration guides](/docs/guides/security/product-security) provide detailed information for configuring individual products.

# Self-Hosting

Install and run your own Supabase on your computer, server, or cloud infrastructure.

## Get started

The fastest and recommended way to self-host Supabase is using Docker.

Docker

Official

}

showIconBg={true}

>

Deploy Supabase within your own infrastructure using Docker Compose.

## Other deployment options

{/* supa-mdx-lint-disable-next-line Rule004ExcludeWords */}

There are several other ways to deploy Supabase with the help of community-driven projects. These projects may be outdated and are seeking active maintainers. If you're interested in maintaining one of these projects, [contact the Community team](/open-source/contributing/supasquad).

{selfHostingCommunity.map((x) => (

{x.name}

Maintainer needed

}

>

{x.description}

))}

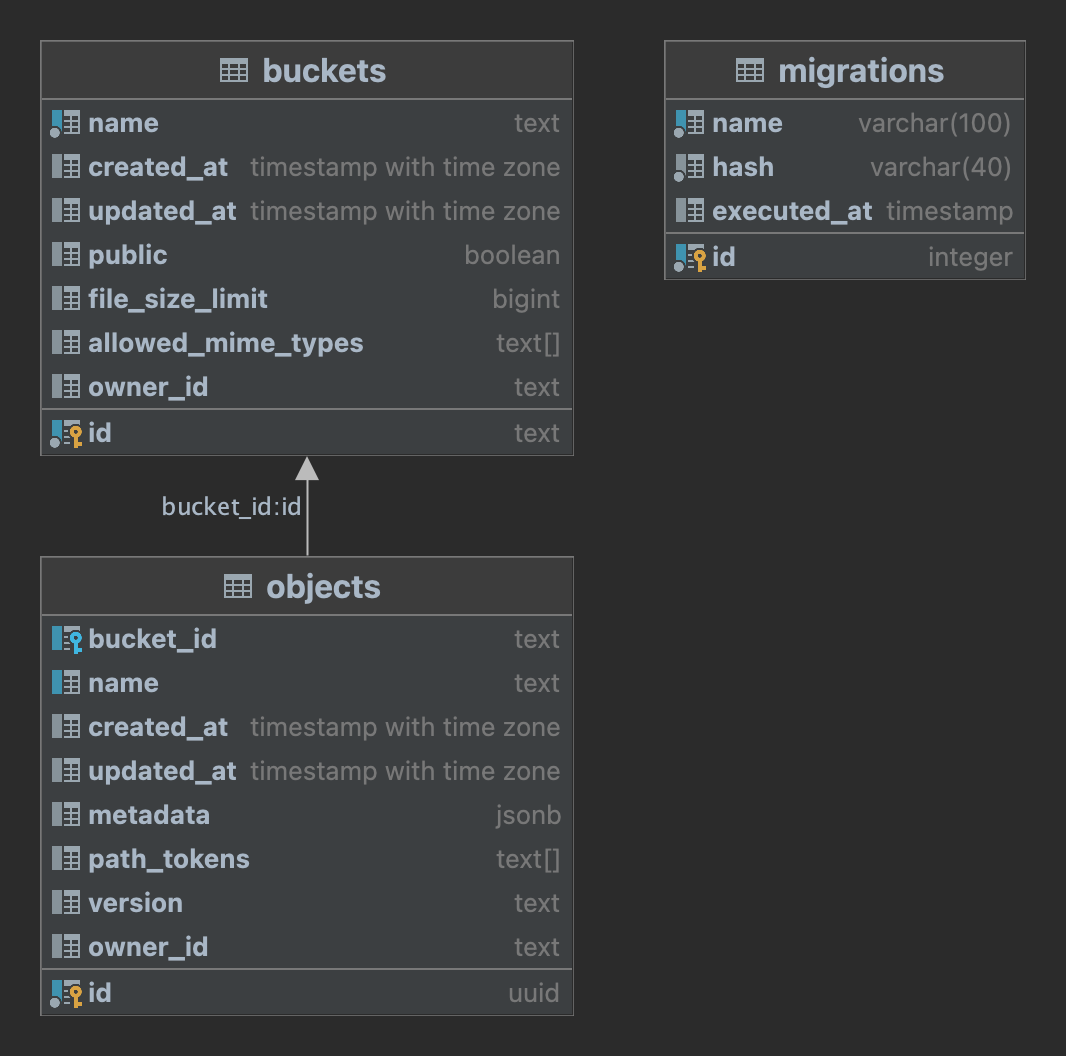

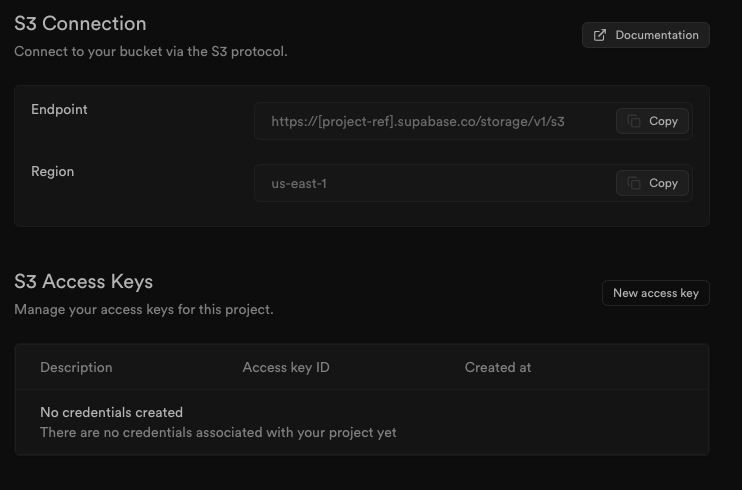

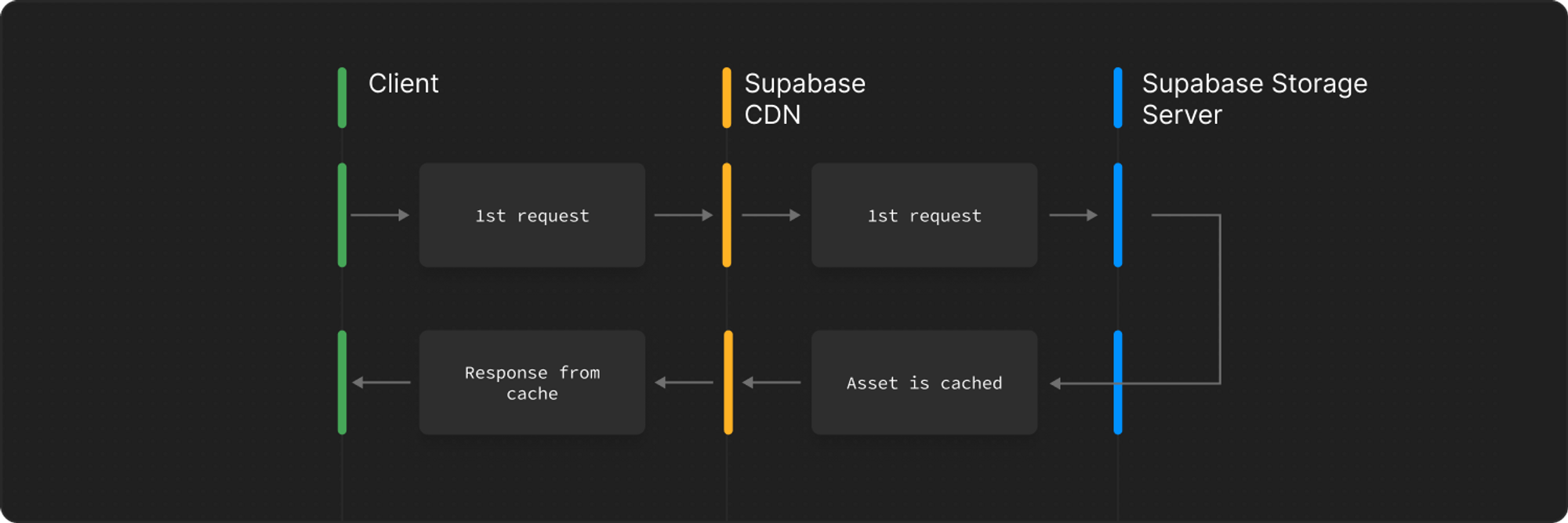

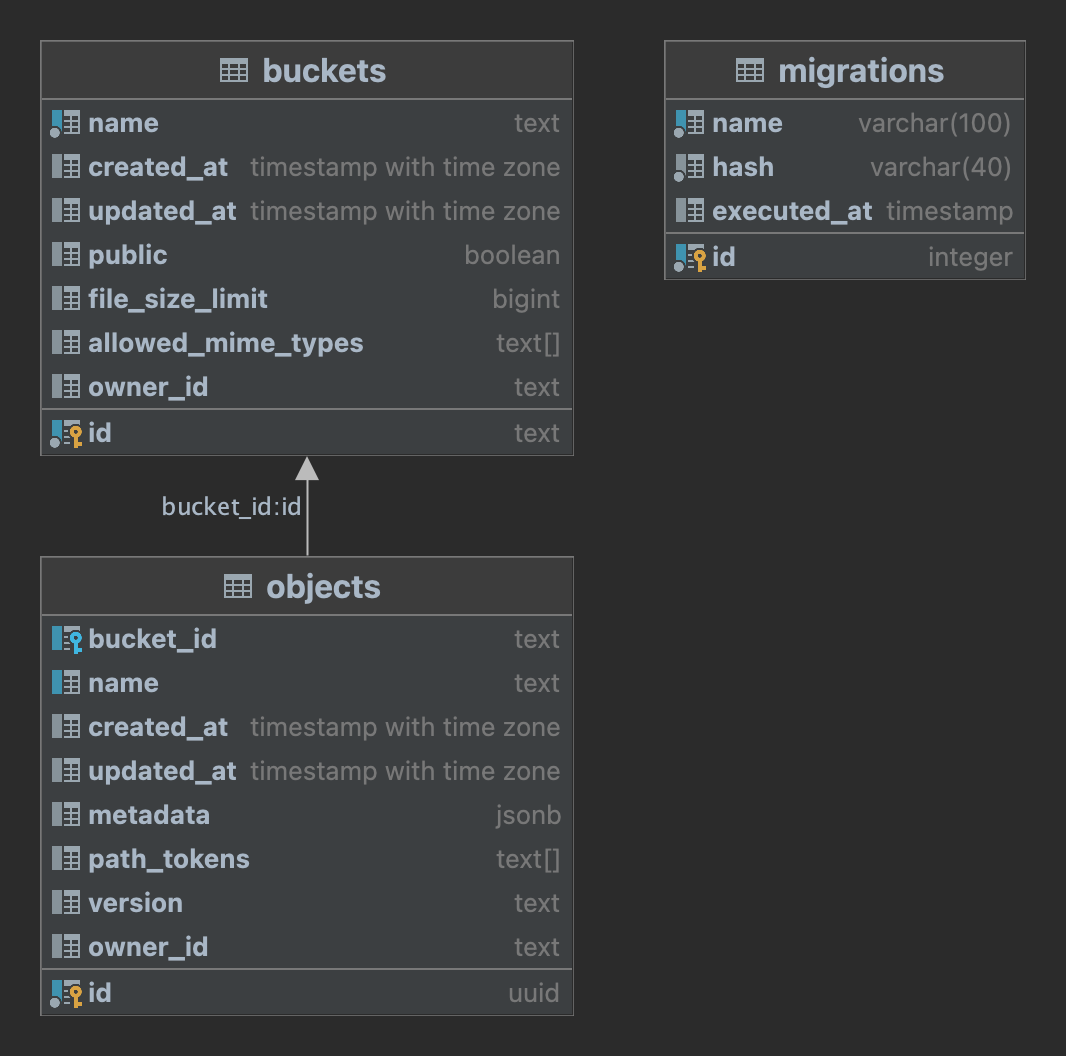

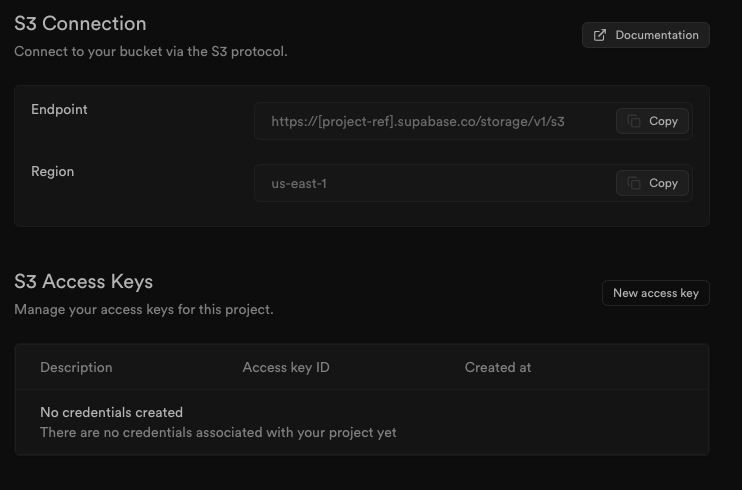

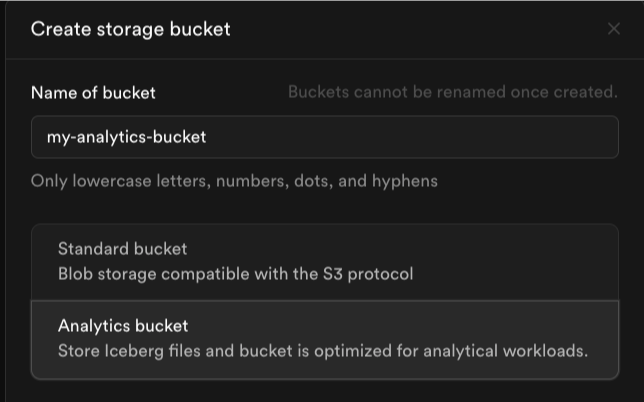

{storageExamples.map((x) => (

{x.description}

))}

{[

{

name: 'Supabase Storage API',

description: 'View the source code.',

href: 'https://github.com/supabase/storage-api',

},

{

name: 'OpenAPI Spec',

description: 'See the Swagger Documentation for Supabase Storage.',

href: 'https://supabase.github.io/storage/',

},

].map((x) => (

{x.description}

))}

HTTP Version

Gzip

Headers |

| Datadog | HTTP | API Key

Region |

| Loki | HTTP | URL

Headers |

| Sentry | HTTP | DSN |

| Amazon S3 | AWS SDK | S3 Bucket

Region

Access Key ID

Secret Access Key

Batch Timeout |

HTTP requests are batched with a max of 250 logs or 1 second intervals, whichever happens first. Logs are compressed via Gzip if the destination supports it.

## Generic HTTP endpoint

Logs are sent as a POST request with a JSON body. Both HTTP/1 and HTTP/2 protocols are supported.

Custom headers can optionally be configured for all requests.

Note that requests are **unsigned**.

Unsigned requests to HTTP endpoints are temporary and all requests will signed in the near future.

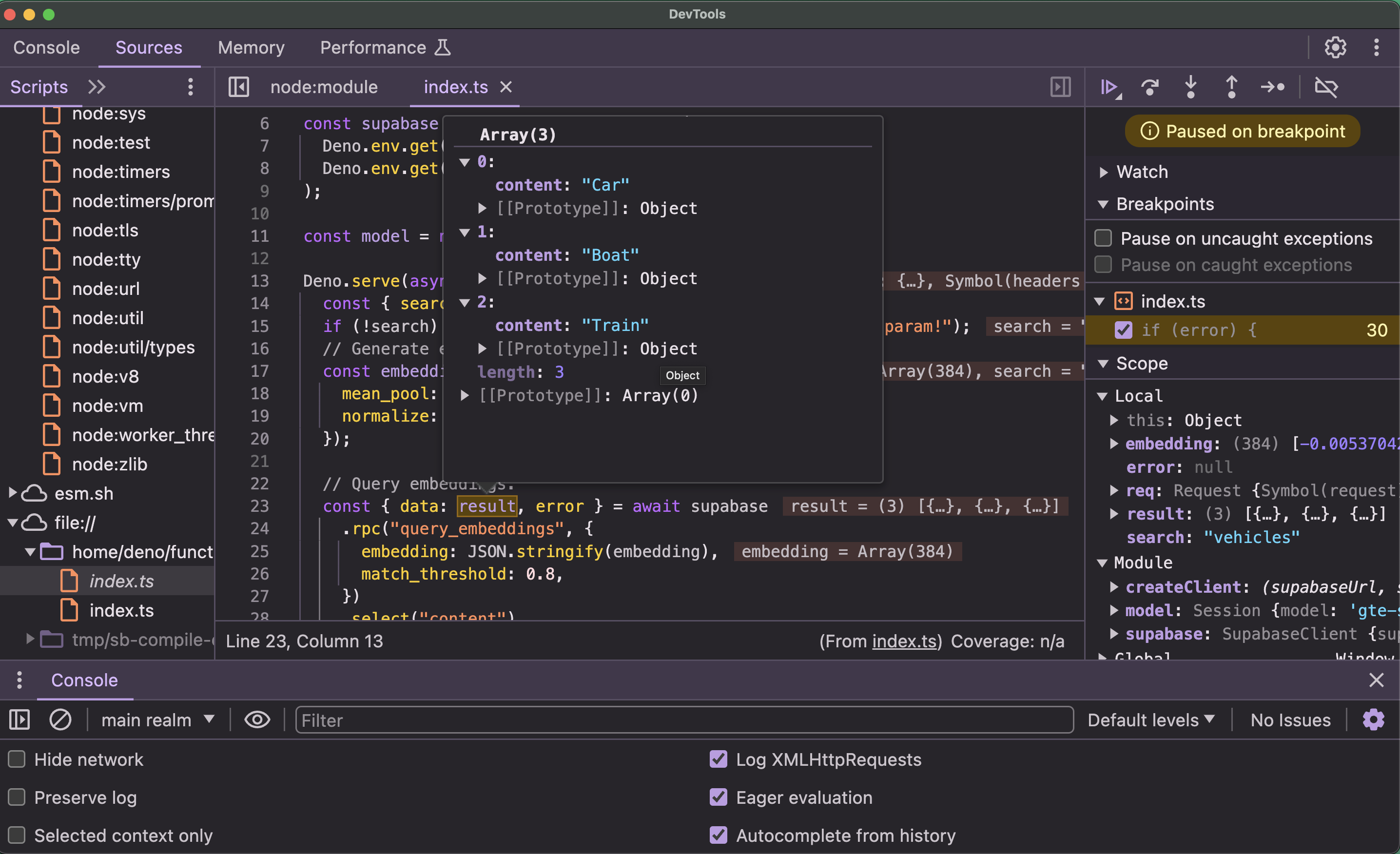

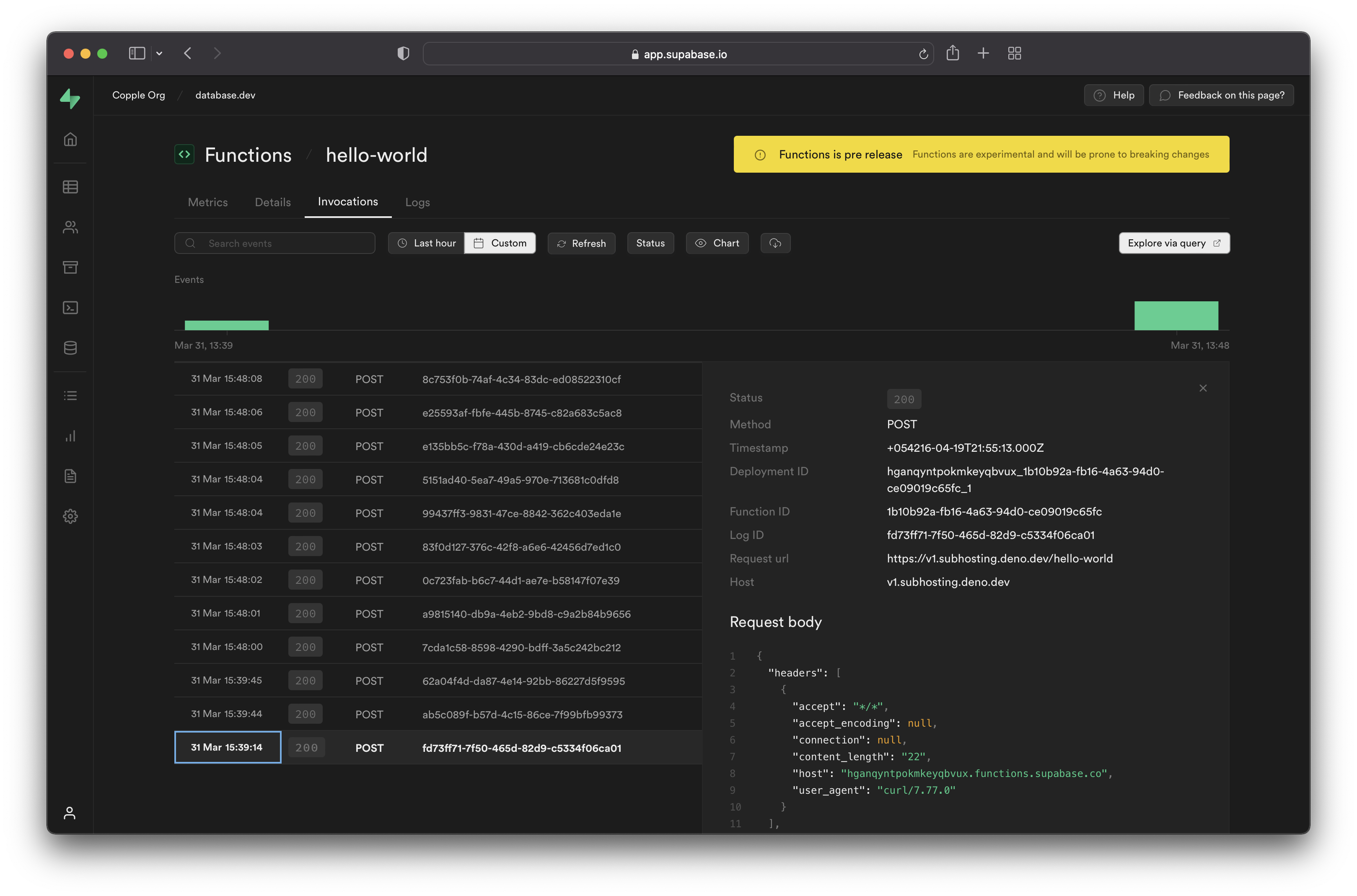

1. Create and deploy the edge function

Generate a new edge function template and update it to log out the received JSON payload. For simplicity, we will accept any request with an Anon Key.

```bash

supabase functions new hello-world

```

You can use this example snippet as an illustration of how the received request will be like.

```ts

import 'npm:@supabase/functions-js/edge-runtime.d.ts'

Deno.serve(async (req) => {

const data = await req.json()

console.log(`Received ${data.length} logs, first log:\n ${JSON.stringify(data[0])}`)

return new Response(JSON.stringify({ message: 'ok' }), {

headers: { 'Content-Type': 'application/json' },

})

})

```

And then deploy it with:

```bash

supabase functions deploy hello-world --project-ref [PROJECT REF]

```

This will create an infinite loop, as we are generating an additional log event that will eventually trigger a new request to this edge function. However, due to the batching nature of how Log Drain events are dispatched, the rate of edge function triggers will not increase greatly and will have an upper bound.

2. Configure the HTTP Drain

Create a HTTP drain under the [Project Settings > Log Drains](/dashboard/project/_/settings/log-drains).

* Disable the Gzip, as we want to receive the payload without compression.

* Under URL, set it to your edge function URL `https://[PROJECT REF].supabase.co/functions/v1/hello-world`

* Under Headers, set the `Authorization: Bearer [ANON KEY]`

Gzip payloads can be decompressed using native in-built APIs. Refer to the Edge Function [compression guide](/docs/guides/functions/compression)

```ts

import { gunzipSync } from 'node:zlib'

Deno.serve(async (req) => {

try {

// Check if the request body is gzip compressed

const contentEncoding = req.headers.get('content-encoding')

if (contentEncoding !== 'gzip') {

return new Response('Request body is not gzip compressed', {

status: 400,

})

}

// Read the compressed body

const compressedBody = await req.arrayBuffer()

// Decompress the body

const decompressedBody = gunzipSync(new Uint8Array(compressedBody))

// Convert the decompressed body to a string

const decompressedString = new TextDecoder().decode(decompressedBody)

const data = JSON.parse(decompressedString)

// Process the decompressed body as needed

console.log(`Received: ${data.length} logs.`)

return new Response('ok', {

headers: { 'Content-Type': 'text/plain' },

})

} catch (error) {

console.error('Error:', error)

return new Response('Error processing request', { status: 500 })

}

})

```

## Datadog logs

Logs sent to Datadog have the name of the log source set on the `service` field of the event and the source set to `Supabase`. Logs are gzipped before they are sent to Datadog.

The payload message is a JSON string of the raw log event, prefixed with the event timestamp.

To setup Datadog log drain, generate a Datadog API key [here](https://app.datadoghq.com/organization-settings/api-keys) and the location of your Datadog site.

1. Generate API Key in [Datadog dashboard](https://app.datadoghq.com/organization-settings/api-keys)

2. Create log drain in [Supabase dashboard](/dashboard/project/_/settings/log-drains)

3. Watch for events in the [Datadog Logs page](https://app.datadoghq.com/logs)

[Grok parser](https://docs.datadoghq.com/service_management/events/pipelines_and_processors/grok_parser?tab=matchers) matcher for extracting the timestamp to a `date` field

```

%{date("yyyy-MM-dd'T'HH:mm:ss.SSSSSSZZ"):date}

```

[Grok parser](https://docs.datadoghq.com/service_management/events/pipelines_and_processors/grok_parser?tab=matchers) matcher for converting stringified JSON to structured JSON on the `json` field.

```

%{data::json}

```

[Remapper](https://docs.datadoghq.com/service_management/events/pipelines_and_processors/remapper) for setting the log level.

```

metadata.parsed.error_severity, metadata.level

```

If you are interested in other log drains, upvote them [here](https://github.com/orgs/supabase/discussions/28324)

## Loki

Logs sent to the Loki HTTP API are specifically formatted according to the HTTP API requirements. See the official Loki HTTP API documentation for [more details](https://grafana.com/docs/loki/latest/reference/loki-http-api/#ingest-logs).

Events are batched with a maximum of 250 events per request.

The log source and product name will be used as stream labels.

The `event_message` and `timestamp` fields will be dropped from the events to avoid duplicate data.

Loki must be configured to accept **structured metadata**, and it is advised to increase the default maximum number of structured metadata fields to at least 500 to accommodate large log event payloads of different products.

## Sentry

Logs are sent to Sentry as part of [Sentry's Logging Product](https://docs.sentry.io/product/explore/logs/). Ingesting Supabase logs as Sentry errors is currently not supported.

To setup the Sentry log drain, you need to do the following:

1. Grab your DSN from your [Sentry project settings](https://docs.sentry.io/concepts/key-terms/dsn-explainer/). It should be of the format `{PROTOCOL}://{PUBLIC_KEY}:{SECRET_KEY}@{HOST}{PATH}/{PROJECT_ID}`.

2. Create log drain in [Supabase dashboard](/dashboard/project/_/settings/log-drains)

3. Watch for events in the [Sentry Logs page](https://sentry.io/explore/logs/)

All fields from the log event are attached as attributes to the Sentry log, which can be used for filtering and grouping in the Sentry UI. There are no limits to cardinality or the number of attributes that can be attached to a log.

If you are self-hosting Sentry, Sentry Logs are only supported in self-hosted version [25.9.0](https://github.com/getsentry/self-hosted/releases/tag/25.9.0) and later.

## Axiom

Logs sent to a specified Axiom's dataset as JSON of a raw log event,

with timestamp modified to be parsed by ingestion endpoint.

To set up the Axiom log drain, you have to:

1. Create a dataset for ingestion in Axiom dashboard -> Datasets

2. Generate an Axiom API Token with permission to ingest into the created dataset (see [Axiom docs](https://axiom.co/docs/reference/tokens#create-basic-api-token))

3. Create log drain in [Supabase dashboard](/dashboard/project/_/settings/log-drains), providing:

* Name of the dataset

* API token

4. Watch for events in the Stream panel of Axiom dashboard

## Amazon S3

Logs are written to an existing S3 bucket that you own.

Required configuration when creating an S3 Log Drain:

* S3 Bucket: the name of an existing S3 bucket.

* Region: the AWS region where the bucket is located.

* Access Key ID: used for authentication.

* Secret Access Key: used for authentication.

* Batch Timeout (ms): maximum time to wait before flushing a batch. Recommended 2000–5000ms.

Ensure the AWS account tied to the Access Key ID has permissions to write to the specified S3 bucket.

## Pricing

For a detailed breakdown of how charges are calculated, refer to [Manage Log Drain usage](/docs/guides/platform/manage-your-usage/log-drains).

# Logging

The Supabase Platform includes a Logs Explorer that allows log tracing and debugging. Log retention is based on your [project's pricing plan](/pricing).

## Product logs

Supabase provides a logging interface specific to each product. You can use simple regular expressions for keywords and patterns to search log event messages. You can also export and download the log events matching your query as a spreadsheet.

{/* */}

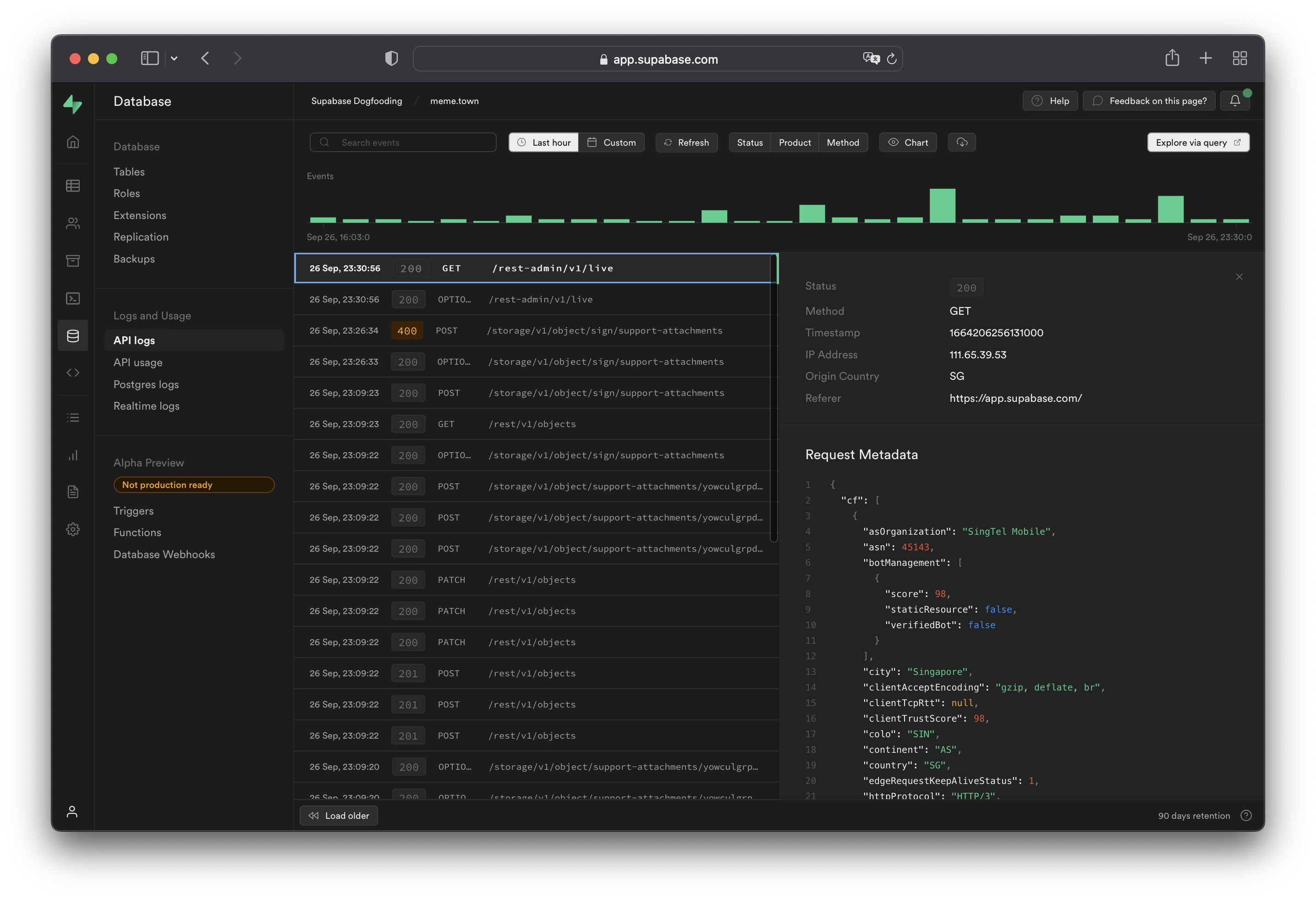

[API logs](/dashboard/project/_/logs/edge-logs) show all network requests and response for the REST and GraphQL [APIs](../../guides/database/api). If [Read Replicas](/docs/guides/platform/read-replicas) are enabled, logs are automatically filtered between databases as well as the [API Load Balancer](/docs/guides/platform/read-replicas#api-load-balancer) endpoint. Logs for a specific endpoint can be toggled with the `Source` button on the upper-right section of the dashboard.

When viewing logs originating from the API Load Balancer endpoint, the upstream database or the one that eventually handles the request can be found under the `Redirect Identifier` field. This is equivalent to `metadata.load_balancer_redirect_identifier` when querying the underlying logs.

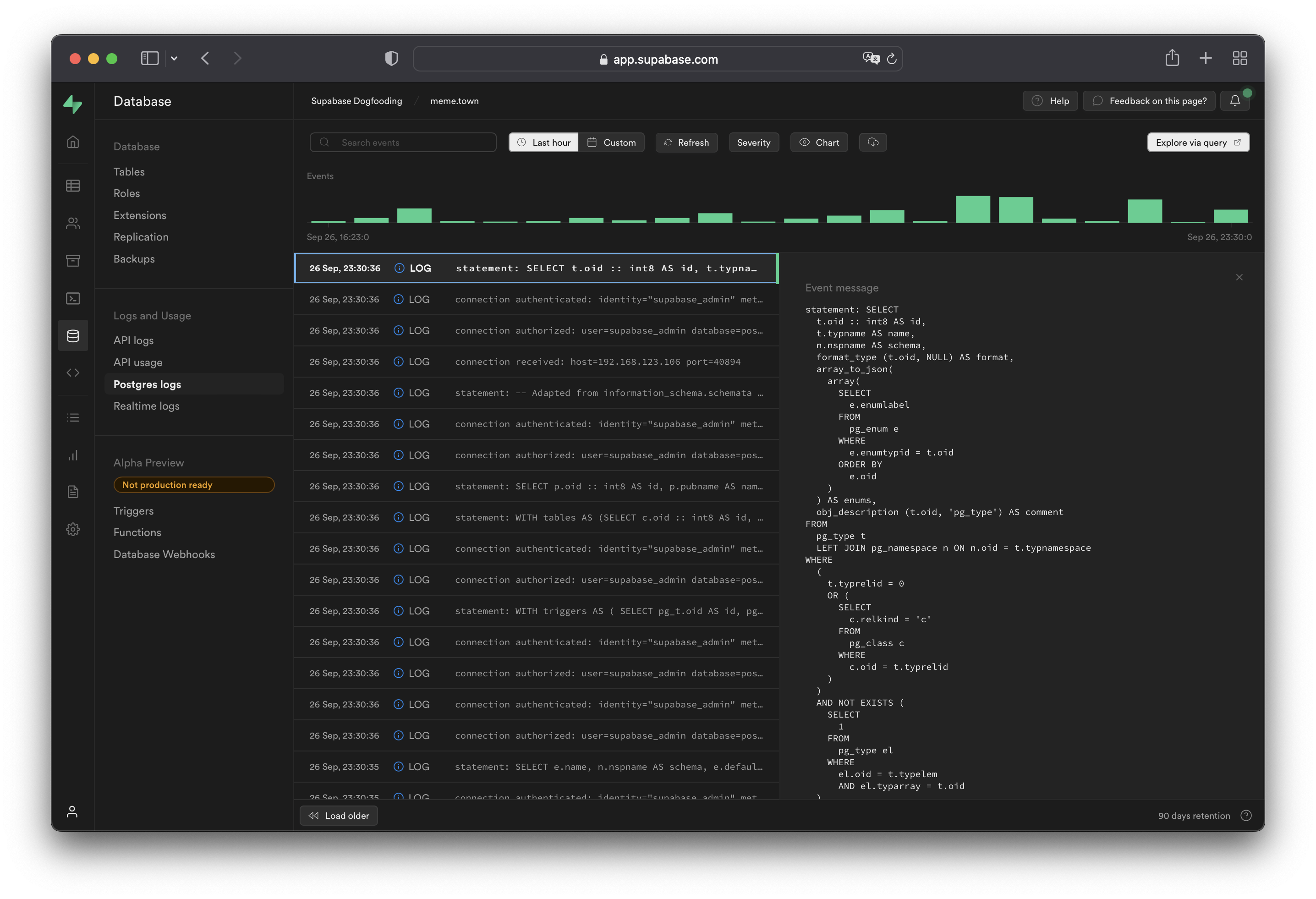

[Postgres logs](/dashboard/project/_/logs/postgres-logs) show queries and activity for your [database](../../guides/database). If [Read Replicas](/docs/guides/platform/read-replicas) are enabled, logs are automatically filtered between databases. Logs for a specific database can be toggled with the `Source` button on the upper-right section of the dashboard.

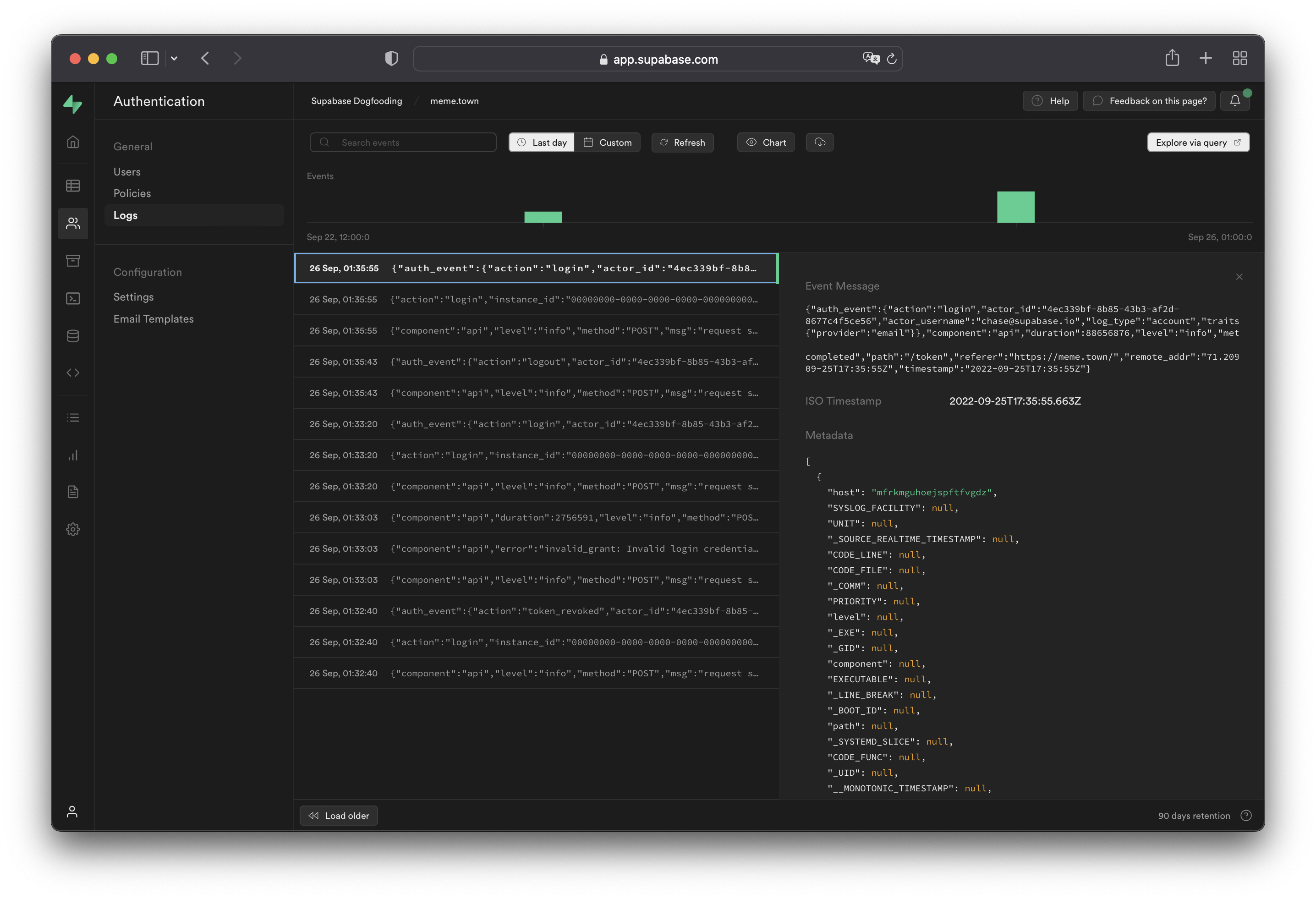

[Auth logs](/dashboard/project/_/logs/auth-logs) show all server logs for your [Auth usage](../../guides/auth).

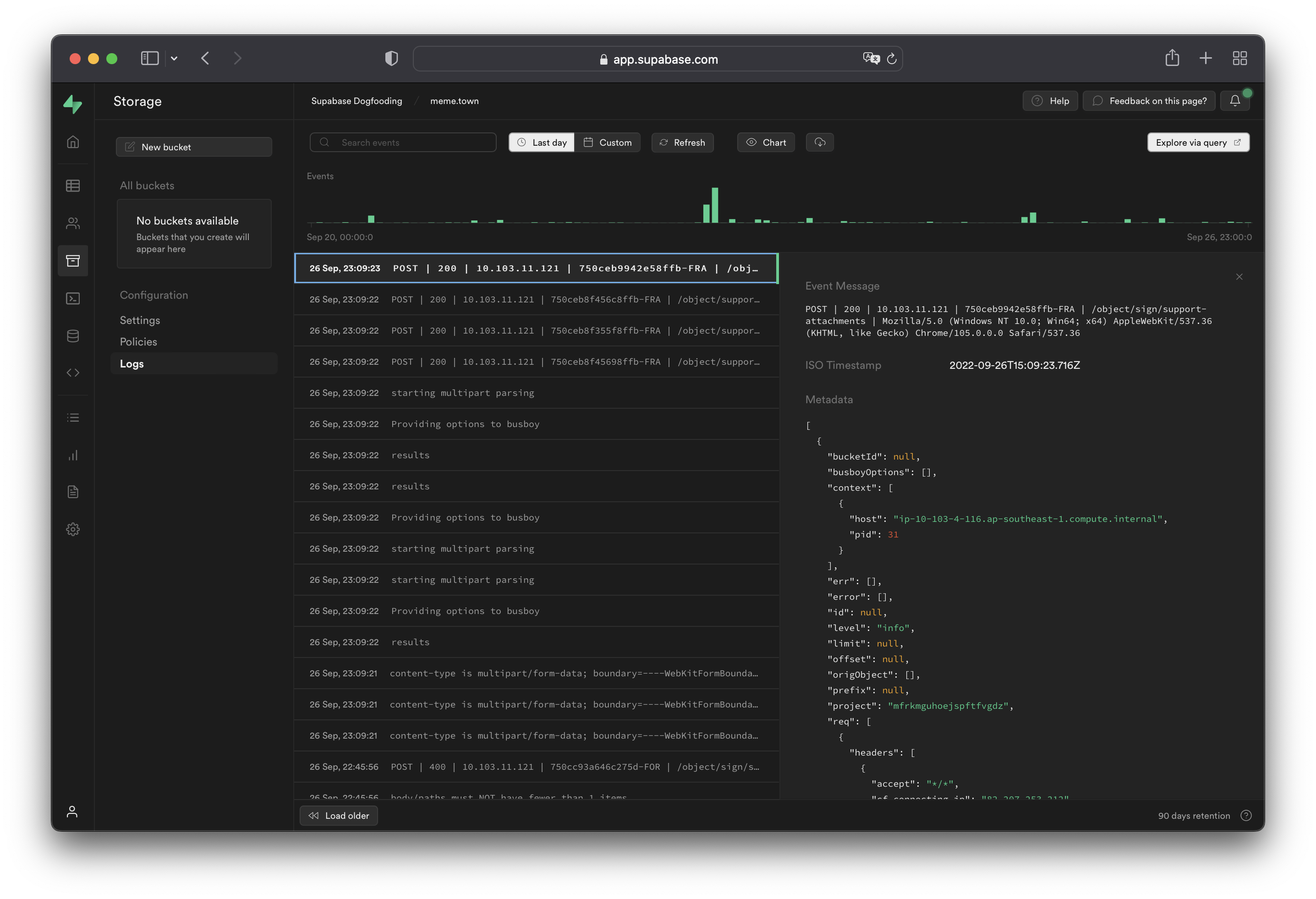

[Storage logs](/dashboard/project/_/logs/storage-logs) shows all server logs for your [Storage API](../../guides/storage).

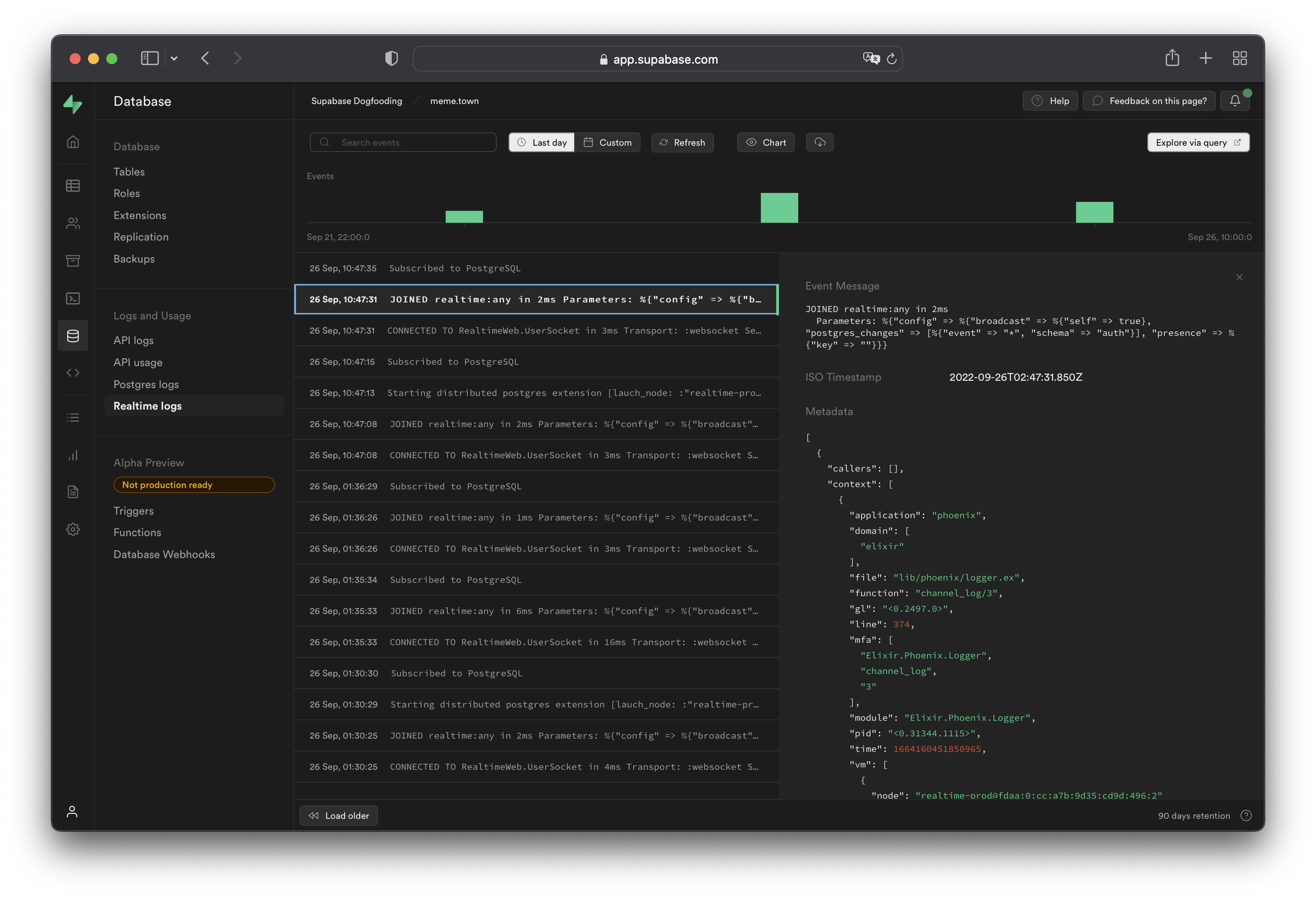

[Realtime logs](/dashboard/project/_/logs/realtime-logs) show all server logs for your [Realtime API usage](../../guides/realtime).

Realtime connections are not logged by default. Turn on [Realtime connection logs per client](#logging-realtime-connections) with the `log_level` parameter.

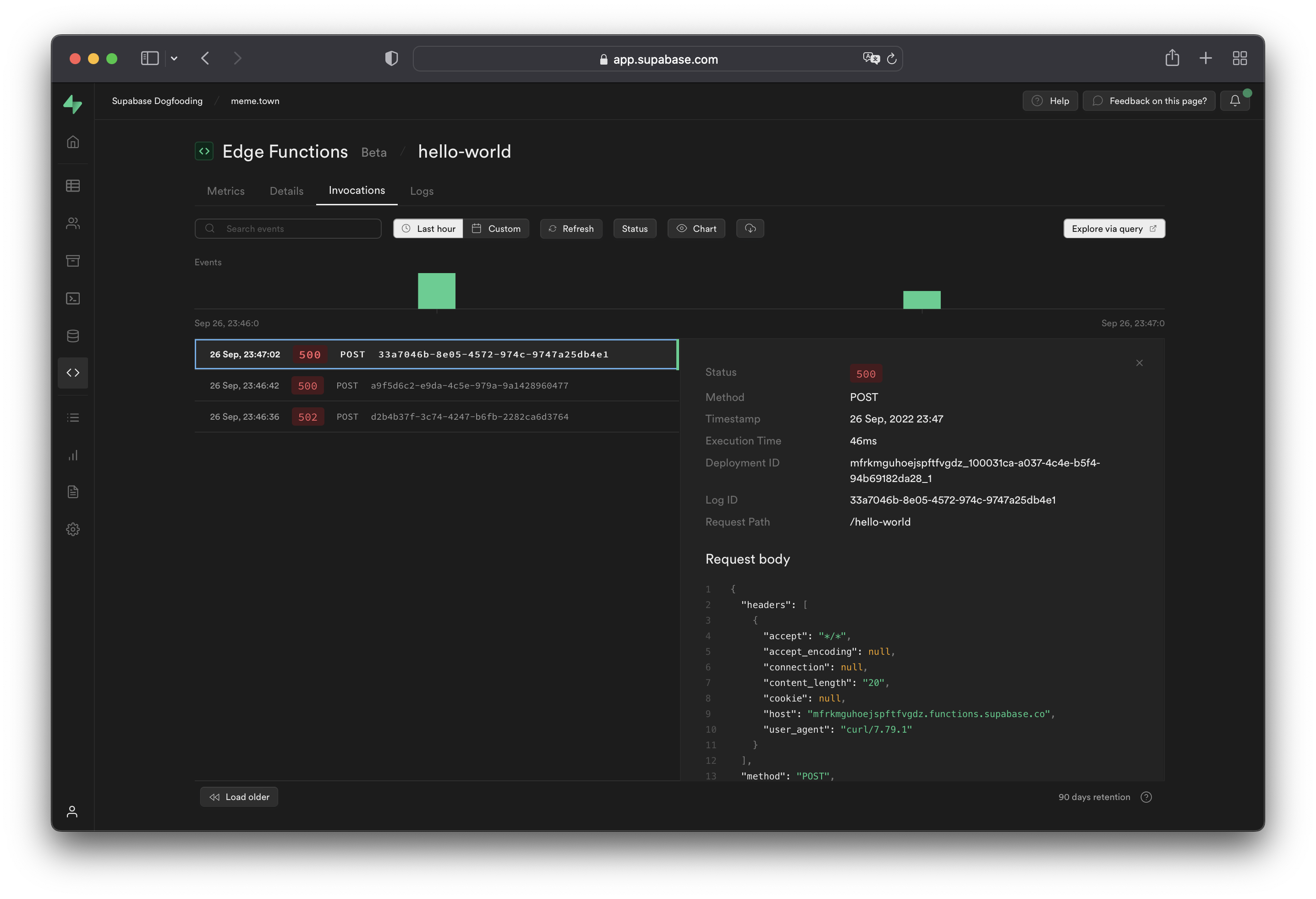

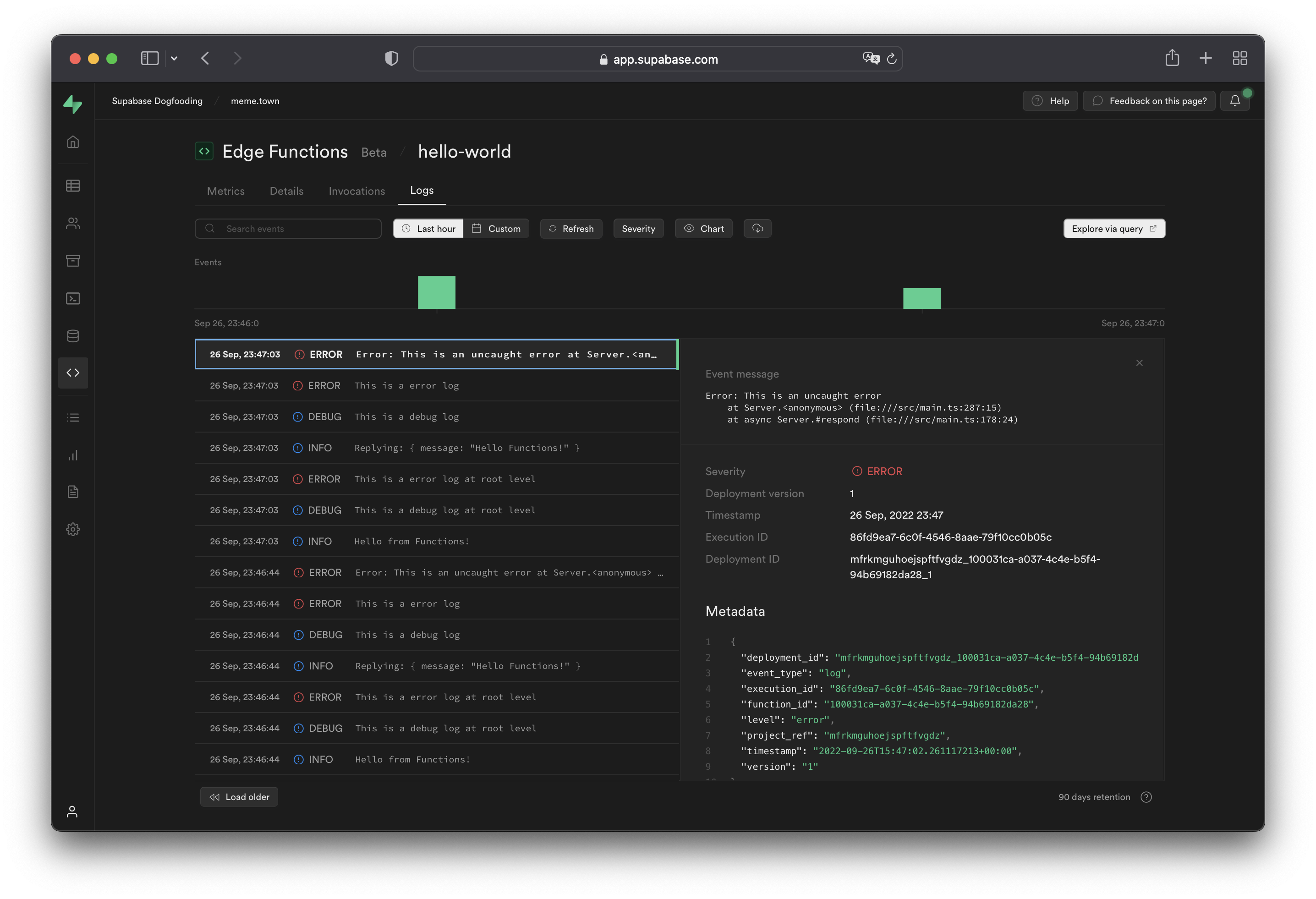

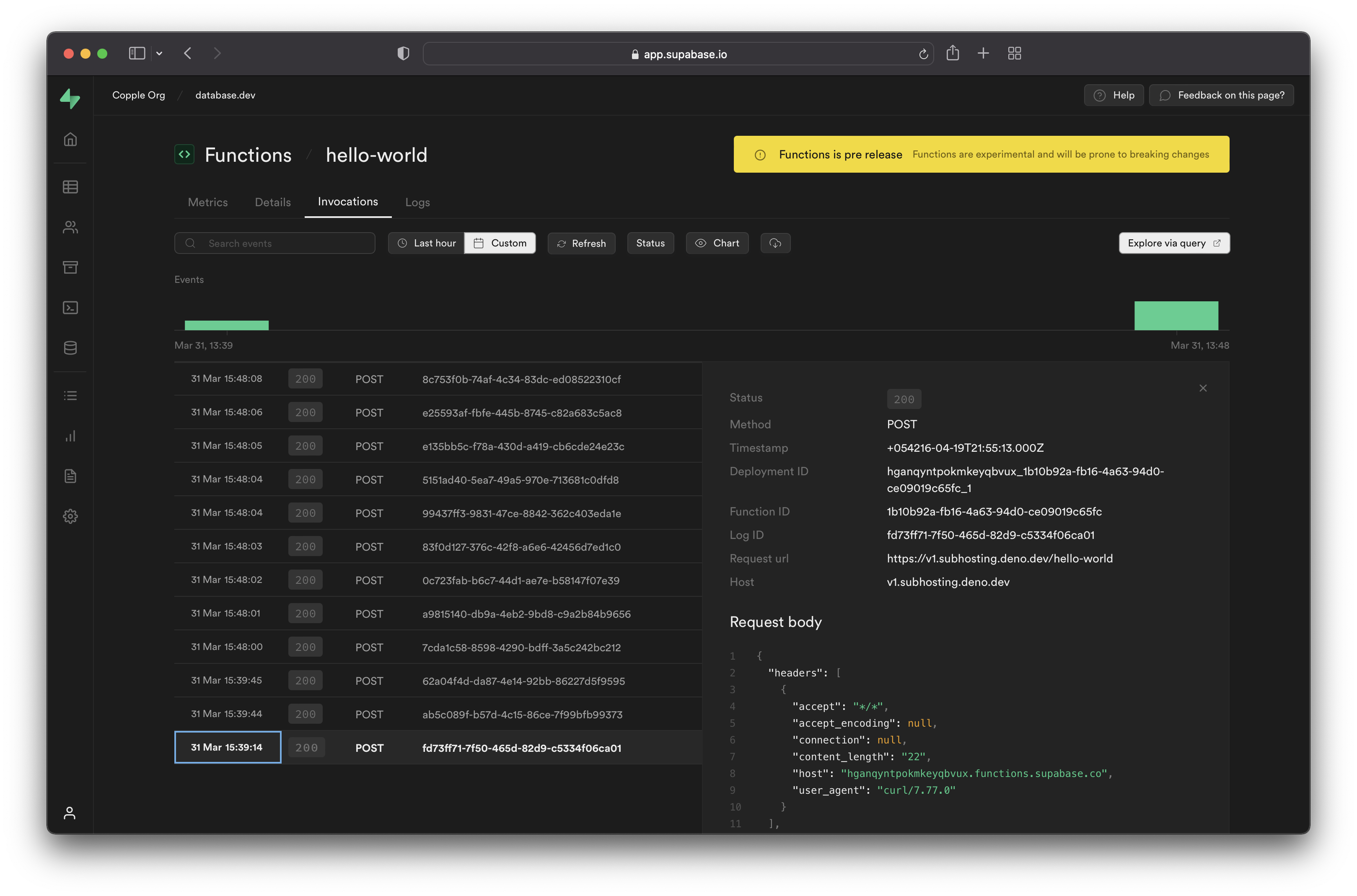

For each [Edge Function](/dashboard/project/_/functions), logs are available under the following tabs:

**Invocations**

The Invocations tab displays the edge logs of function calls.

**Logs**

The Logs tab displays logs emitted during function execution.

**Log Message Length**

Edge Function log messages have a max length of 10,000 characters. If you try to log a message longer than that it will be truncated.

***

## Working with API logs

[API logs](/dashboard/project/_/logs/edge-logs) run through the Cloudflare edge servers and will have attached Cloudflare metadata under the `metadata.request.cf.*` fields.

### Allowed headers

A strict list of request and response headers are permitted in the API logs. Request and response headers will still be received by the server(s) and client(s), but will not be attached to the API logs generated.

Request headers:

* `accept`

* `cf-connecting-ip`

* `cf-ipcountry`

* `host`

* `user-agent`

* `x-forwarded-proto`

* `referer`

* `content-length`

* `x-real-ip`

* `x-client-info`

* `x-forwarded-user-agent`

* `range`

* `prefer`

Response headers:

* `cf-cache-status`

* `cf-ray`

* `content-location`

* `content-range`

* `content-type`

* `content-length`

* `date`

* `transfer-encoding`

* `x-kong-proxy-latency`

* `x-kong-upstream-latency`

* `sb-gateway-mode`

* `sb-gateway-version`

### Additional request metadata

To attach additional metadata to a request, it is recommended to use the `User-Agent` header for purposes such as device or version identification.

For example:

```

node MyApp/1.2.3 (device-id:abc123)

Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:47.0) Gecko/20100101 Firefox/47.0 MyApp/1.2.3 (Foo v1.3.2; Bar v2.2.2)

```

Do not log Personal Identifiable Information (PII) within the `User-Agent` header, to avoid infringing data protection privacy laws. Overly fine-grained and detailed user agents may allow fingerprinting and identification of the end user through PII.

## Logging Postgres queries

To enable query logs for other categories of statements:

1. [Enable the pgAudit extension](/dashboard/project/_/database/extensions).

2. Configure `pgaudit.log` (see below). Perform a fast reboot if needed.

3. View your query logs under [Logs > Postgres Logs](/dashboard/project/_/logs/postgres-logs).

### Configuring `pgaudit.log`

The stored value under `pgaudit.log` determines the classes of statements that are logged by [pgAudit extension](https://www.pgaudit.org/). Refer to the pgAudit documentation for the [full list of values](https://github.com/pgaudit/pgaudit/blob/master/README.md#pgauditlog).

To enable logging for function calls/do blocks, writes, and DDL statements for a single session, execute the following within the session:

```sql

-- temporary single-session config update

set pgaudit.log = 'function, write, ddl';

```

To *permanently* set a logging configuration (beyond a single session), execute the following, then perform a fast reboot:

```sql

-- equivalent permanent config update.

alter role postgres set pgaudit.log to 'function, write, ddl';

```

To help with debugging, we recommend adjusting the log scope to only relevant statements as having too wide of a scope would result in a lot of noise in your Postgres logs.

Note that in the above example, the role is set to `postgres`. To log user-traffic flowing through the [HTTP APIs](../../guides/database/api#rest-api-overview) powered by PostgREST, set your configuration values for the `authenticator`.

```sql

-- for API-related logs

alter role authenticator set pgaudit.log to 'write';

```

By default, the log level will be set to `log`. To view other levels, run the following:

```sql

-- adjust log level

alter role postgres set pgaudit.log_level to 'info';

alter role postgres set pgaudit.log_level to 'debug5';

```

Note that as per the pgAudit [log\_level documentation](https://github.com/pgaudit/pgaudit/blob/master/README.md#pgauditlog_level), `error`, `fatal`, and `panic` are not allowed.

To reset system-wide settings, execute the following, then perform a fast reboot:

```sql

-- resets stored config.

alter role postgres reset pgaudit.log

```

If any permission errors are encountered when executing `alter role postgres ...`, it is likely that your project has yet to receive the patch to the latest version of [supautils](https://github.com/supabase/supautils), which is currently being rolled out.

### `RAISE`d log messages in Postgres

Messages that are manually logged via `RAISE INFO`, `RAISE NOTICE`, `RAISE WARNING`, and `RAISE LOG` are shown in Postgres Logs. Note that only messages at or above your logging level are shown. Syncing of messages to Postgres Logs may take a few minutes.

If your logs aren't showing, check your logging level by running:

```sql

show log_min_messages;

```

Note that `LOG` is a higher level than `WARNING` and `ERROR`, so if your level is set to `LOG`, you will not see `WARNING` and `ERROR` messages.

## Logging realtime connections

Realtime doesn't log new WebSocket connections or Channel joins by default. Enable connection logging per client by including an `info` `log_level` parameter when instantiating the Supabase client.

```javascript

import { createClient } from '@supabase/supabase-js'

const options = {

realtime: {

params: {

log_level: 'info',

},

},

}

const supabase = createClient('https://xyzcompany.supabase.co', 'publishable-or-anon-key', options)

```

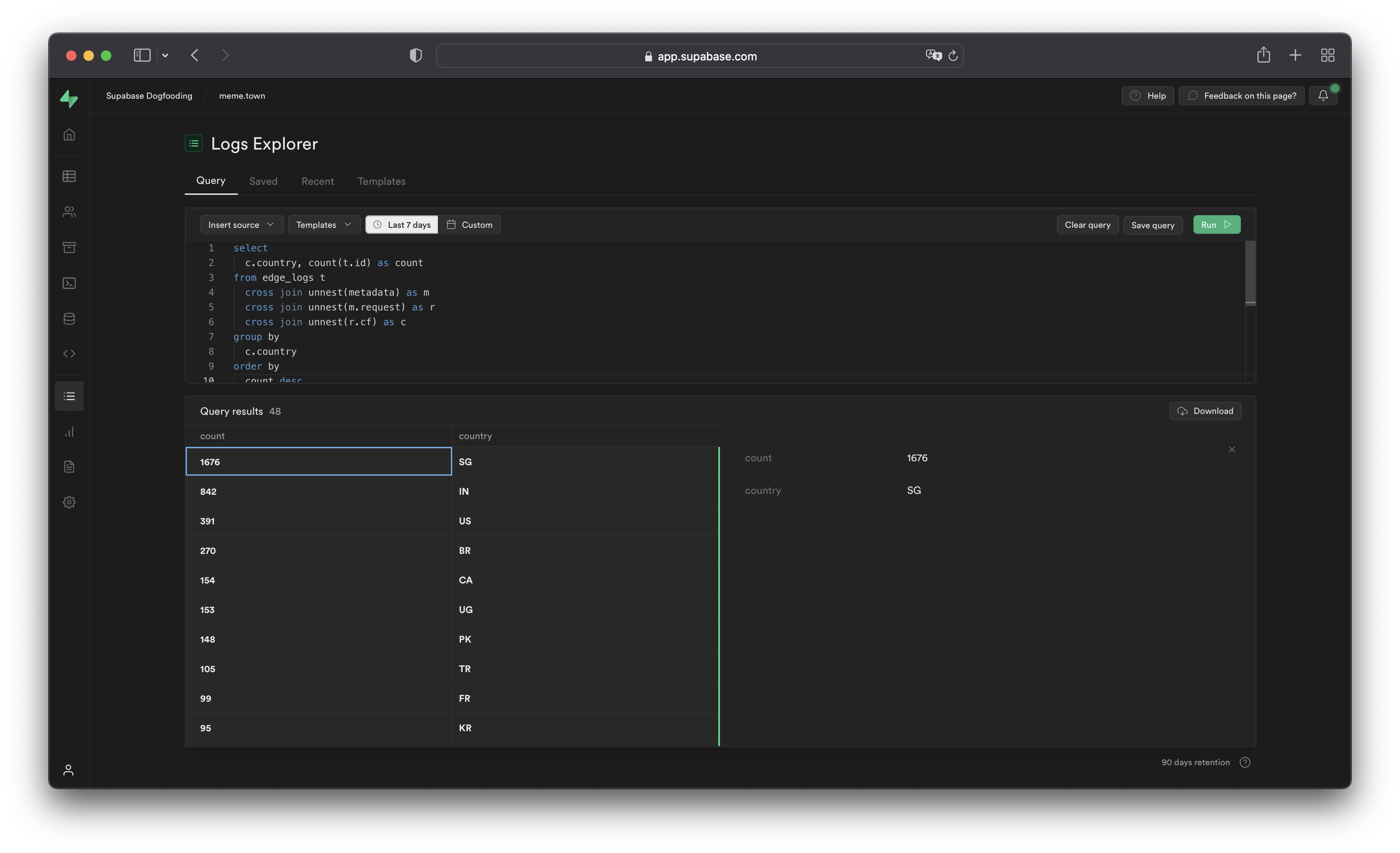

## Logs Explorer

The [Logs Explorer](/dashboard/project/_/logs-explorer) exposes logs from each part of the Supabase stack as a separate table that can be queried and joined using SQL.

You can access the following logs from the **Sources** drop-down:

* `auth_logs`: GoTrue server logs, containing authentication/authorization activity.

* `edge_logs`: Edge network logs, containing request and response metadata retrieved from Cloudflare.

* `function_edge_logs`: Edge network logs for only edge functions, containing network requests and response metadata for each execution.

* `function_logs`: Function internal logs, containing any `console` logging from within the edge function.

* `postgres_logs`: Postgres database logs, containing statements executed by connected applications.

* `realtime_logs`: Realtime server logs, containing client connection information.

* `storage_logs`: Storage server logs, containing object upload and retrieval information.

## Querying with the Logs Explorer

The Logs Explorer uses BigQuery and supports all [available SQL functions and operators](https://cloud.google.com/bigquery/docs/reference/standard-sql/functions-and-operators).

### Timestamp display and behavior

Each log entry is stored with a `timestamp` as a `TIMESTAMP` data type. Use the appropriate [timestamp function](https://cloud.google.com/bigquery/docs/reference/standard-sql/timestamp_functions#timestamp) to utilize the `timestamp` field in a query.

Raw top-level timestamp values are rendered as unix microsecond. To render the timestamps in a human-readable format, use the `DATETIME()` function to convert the unix timestamp display into an ISO-8601 timestamp.

```sql

-- timestamp column without datetime()

select timestamp from ....

-- 1664270180000

-- timestamp column with datetime()

select datetime(timestamp) from ....

-- 2022-09-27T09:17:10.439Z

```

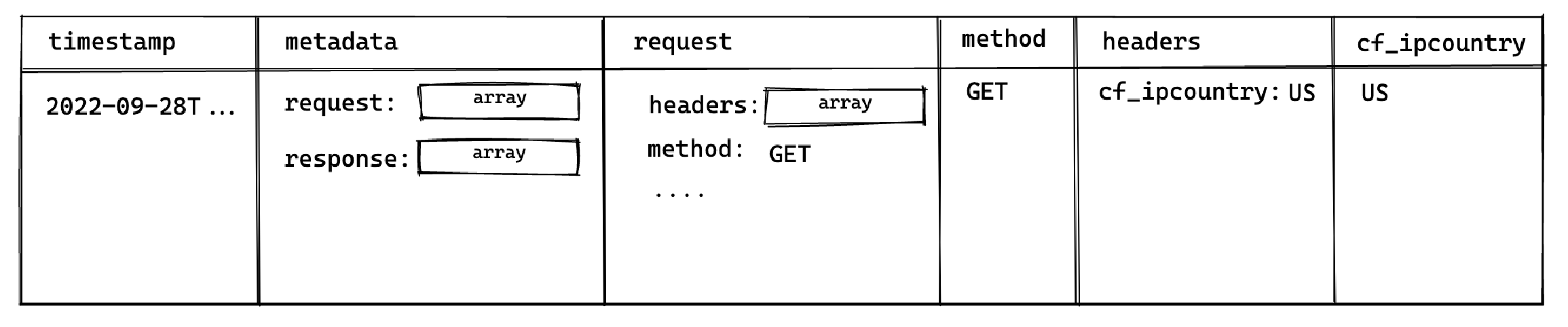

### Unnesting arrays

Each log event stores metadata an array of objects with multiple levels, and can be seen by selecting single log events in the Logs Explorer. To query arrays, use `unnest()` on each array field and add it to the query as a join. This allows you to reference the nested objects with an alias and select their individual fields.

For example, to query the edge logs without any joins:

```sql

select timestamp, metadata from edge_logs as t;

```

The resulting `metadata` key is rendered as an array of objects in the Logs Explorer. In the following diagram, each box represents a nested array of objects:

{/* */}

Perform a `cross join unnest()` to work with the keys nested in the `metadata` key.

To query for a nested value, add a join for each array level:

```sql

select timestamp, request.method, header.cf_ipcountry

from

edge_logs as t

cross join unnest(t.metadata) as metadata

cross join unnest(metadata.request) as request

cross join unnest(request.headers) as header;

```

This surfaces the following columns available for selection:

This allows you to select the `method` and `cf_ipcountry` columns. In JS dot notation, the full paths for each selected column are:

* `metadata[].request[].method`

* `metadata[].request[].headers[].cf_ipcountry`

### LIMIT and result row limitations

The Logs Explorer has a maximum of 1000 rows per run. Use `LIMIT` to optimize your queries by reducing the number of rows returned further.

### Best practices

1. Include a filter over **timestamp**

Querying your entire log history might seem appealing. For **Enterprise** customers that have a large retention range, you run the risk of timeouts due additional time required to scan the larger dataset.

2. Avoid selecting large nested objects. Select individual values instead.

When querying large objects, the columnar storage engine selects each column associated with each nested key, resulting in a large number of columns being selected. This inadvertently impacts the query speed and may result in timeouts or memory errors, especially for projects with a lot of logs.

Instead, select only the values required.

```sql

-- ❌ Avoid doing this

select

datetime(timestamp),

m as metadata -- <- metadata contains many nested keys

from

edge_logs as t

cross join unnest(t.metadata) as m;

-- ✅ Do this

select

datetime(timestamp),

r.method -- <- select only the required values

from

edge_logs as t

cross join unnest(t.metadata) as m

cross join unnest(m.request) as r;

```

### Examples and templates

The Logs Explorer includes **Templates** (available in the Templates tab or the dropdown in the Query tab) to help you get started.

For example, you can enter the following query in the SQL Editor to retrieve each user's IP address:

```sql

select datetime(timestamp), h.x_real_ip

from

edge_logs

cross join unnest(metadata) as m

cross join unnest(m.request) as r

cross join unnest(r.headers) as h

where h.x_real_ip is not null and r.method = "GET";

```

### Logs field reference

Refer to the full field reference for each available source below. Do note that in order to access each nested key, you would need to perform the [necessary unnesting joins](#unnesting-arrays)

{(logConstants) => (

{logConstants.schemas.map((schema) => (

| Path |

Type |

{schema.fields

.sort((a, b) => a.path - b.path)

.map((field) => (

| {field.path} |

{field.type} |

))}

))}

)}

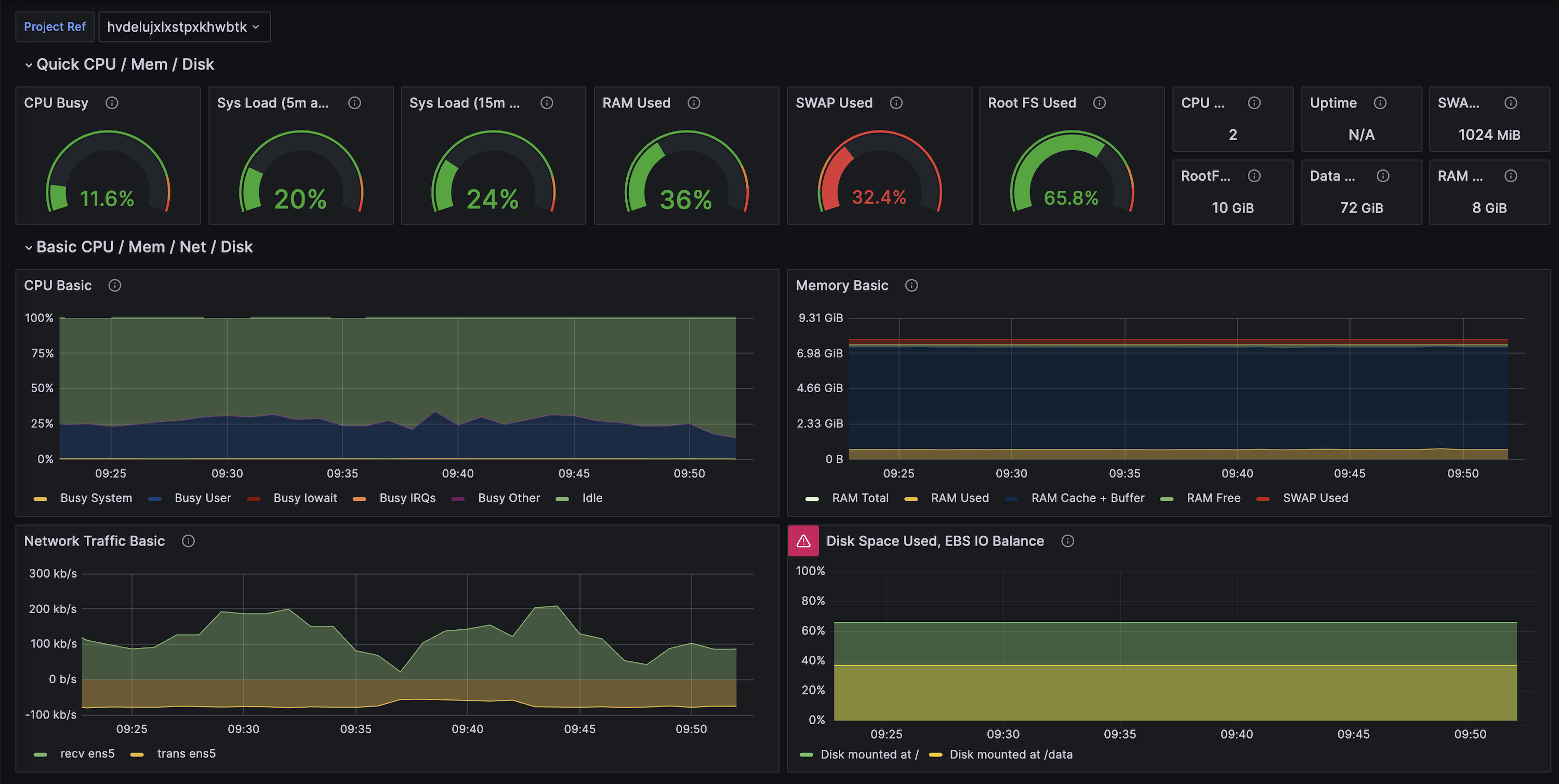

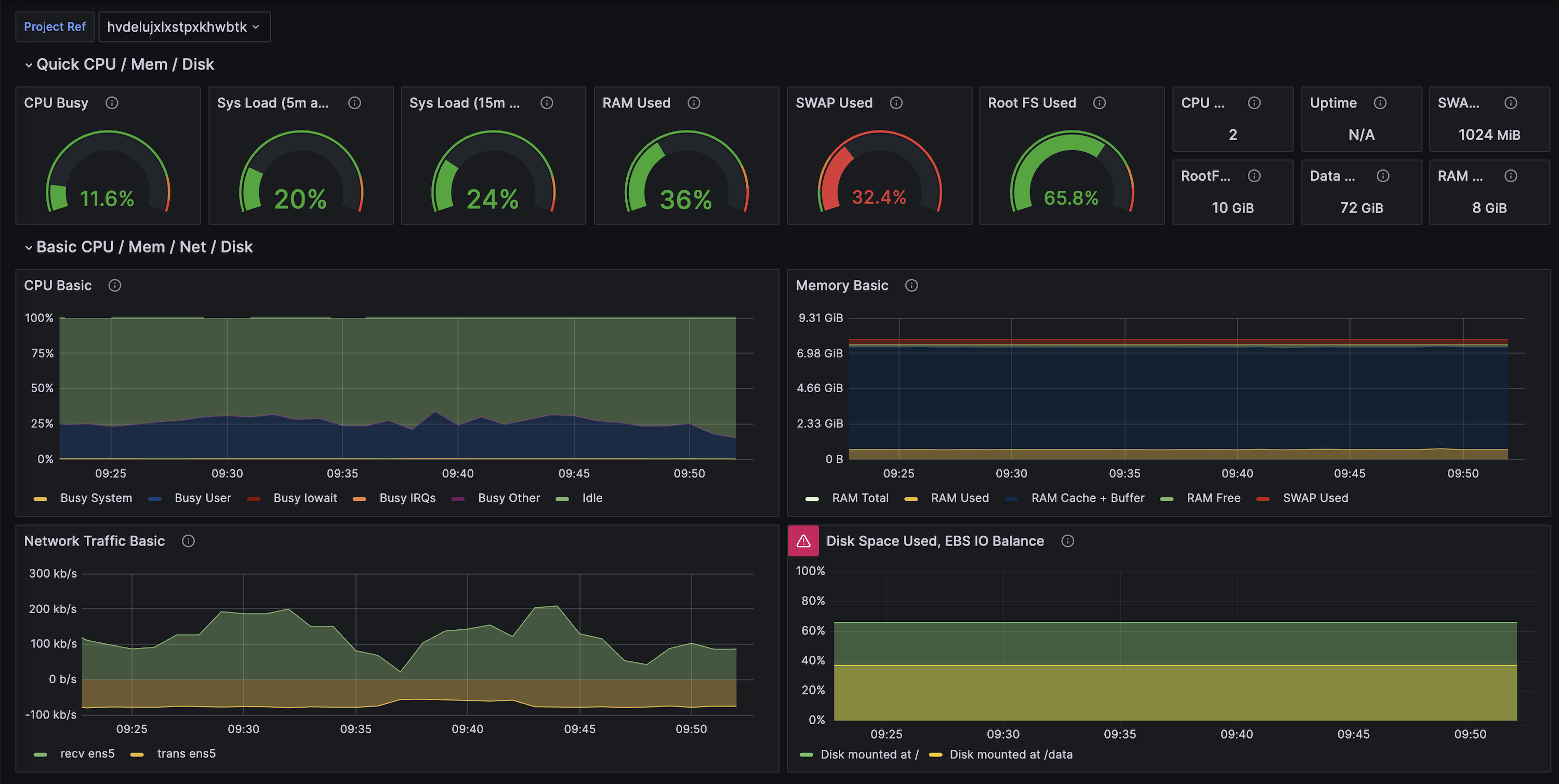

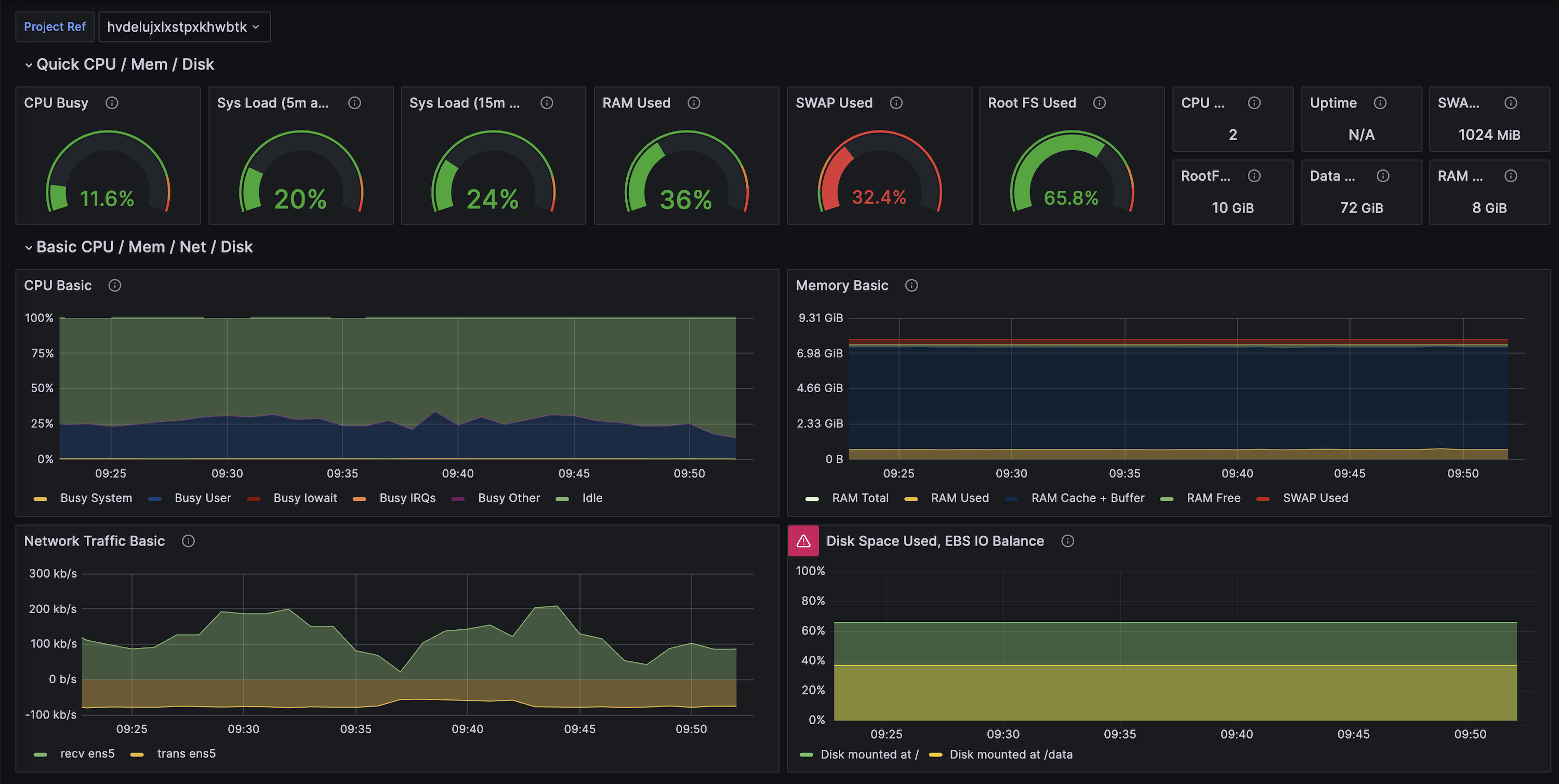

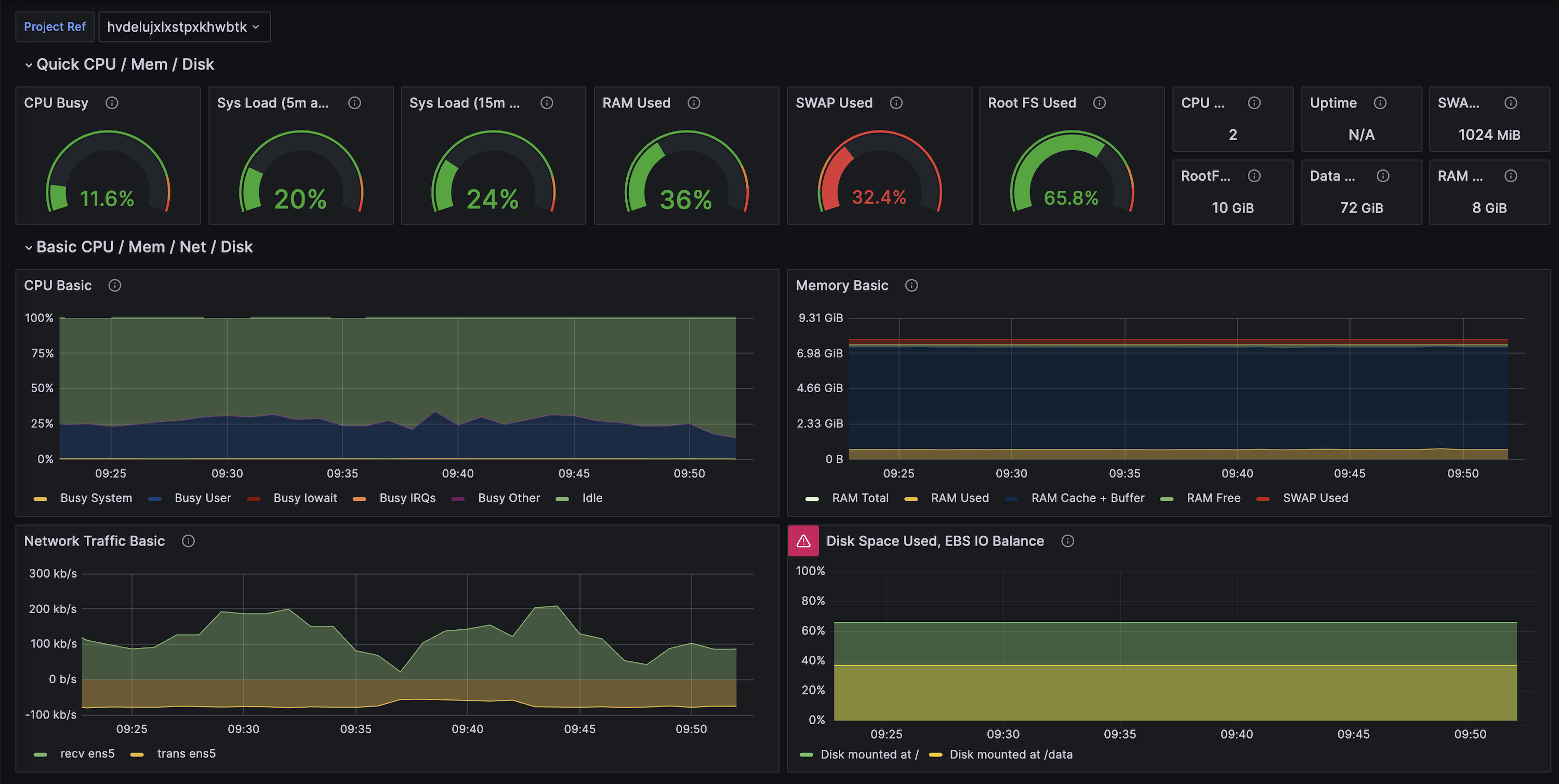

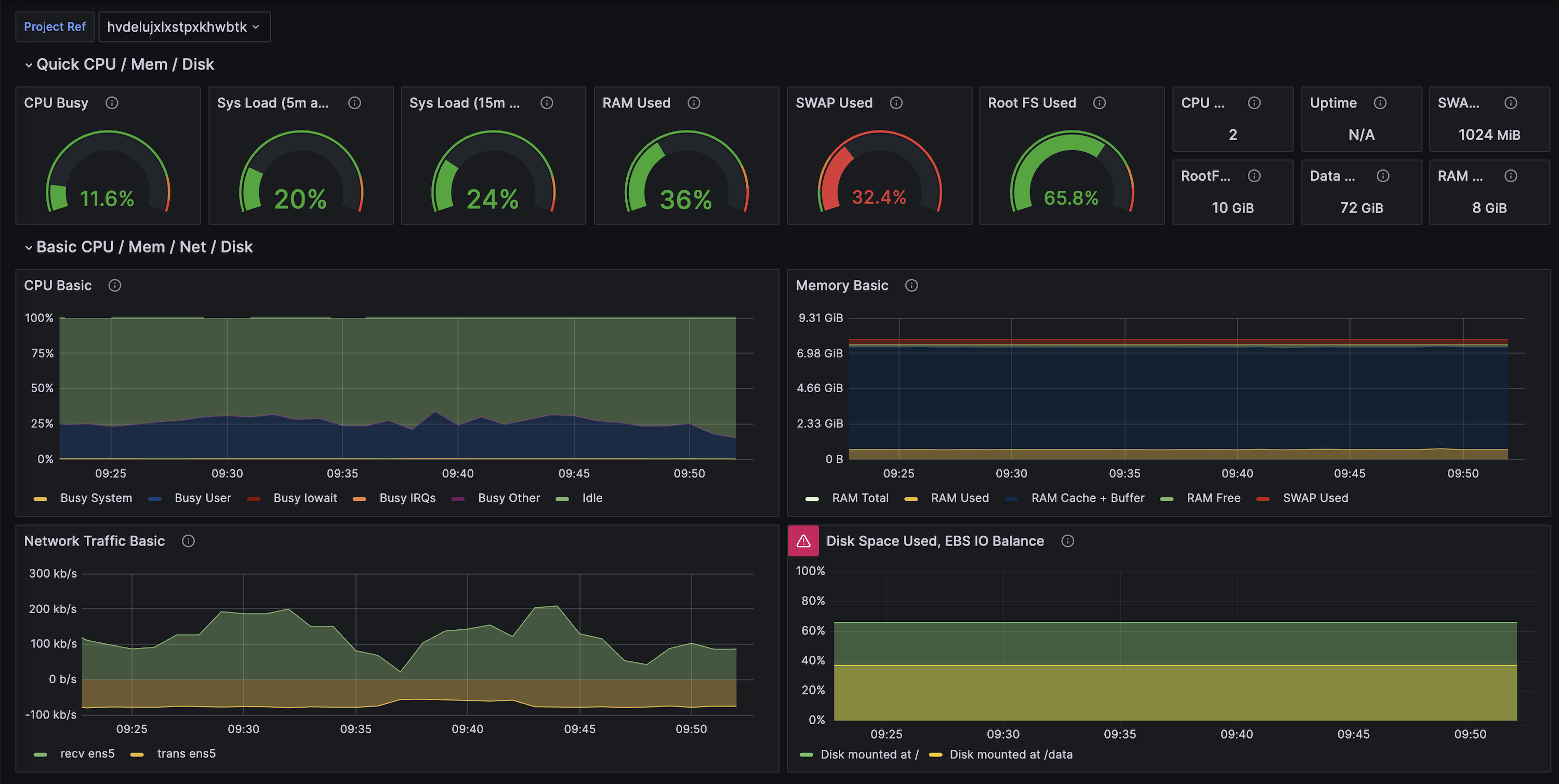

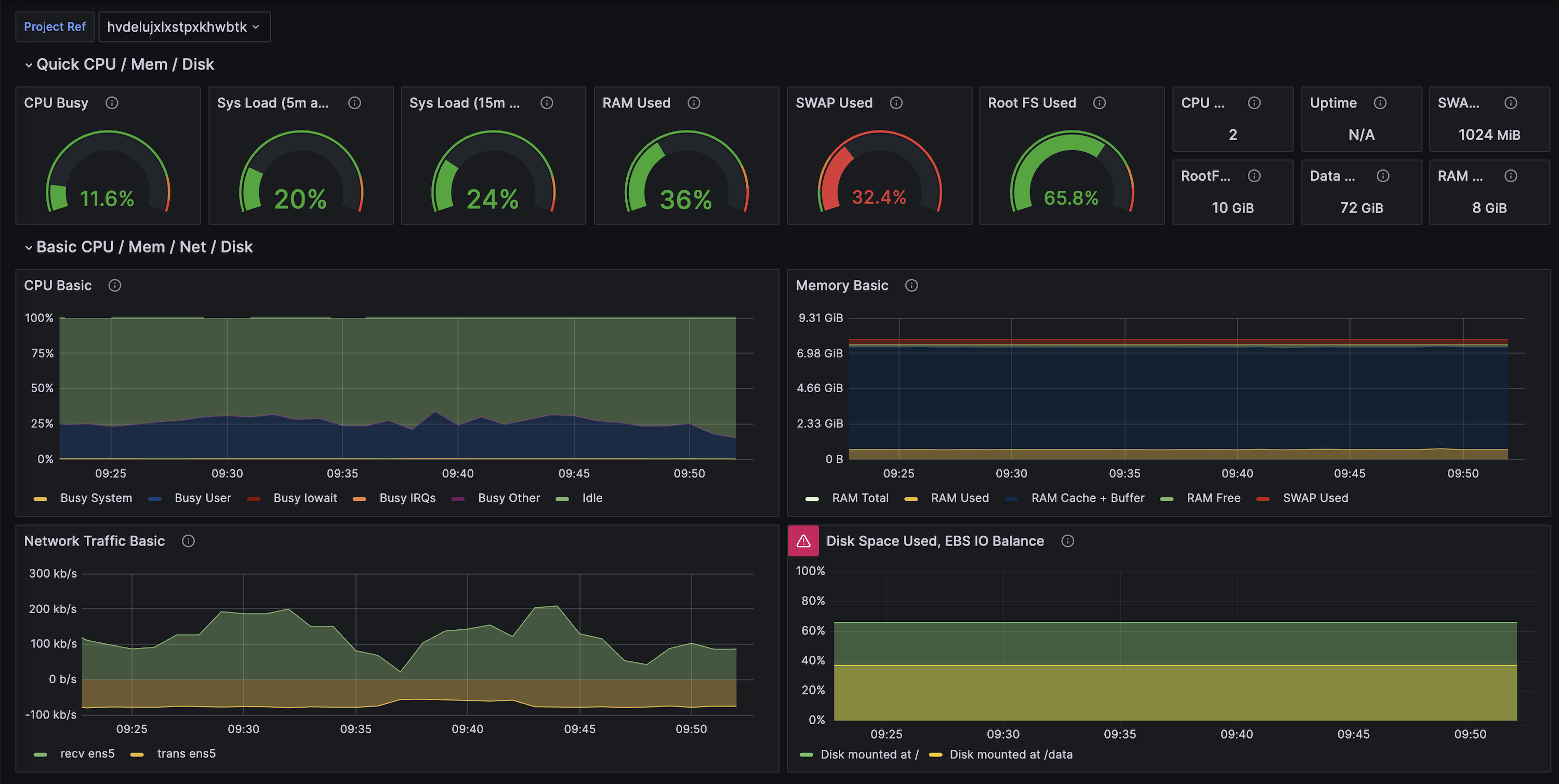

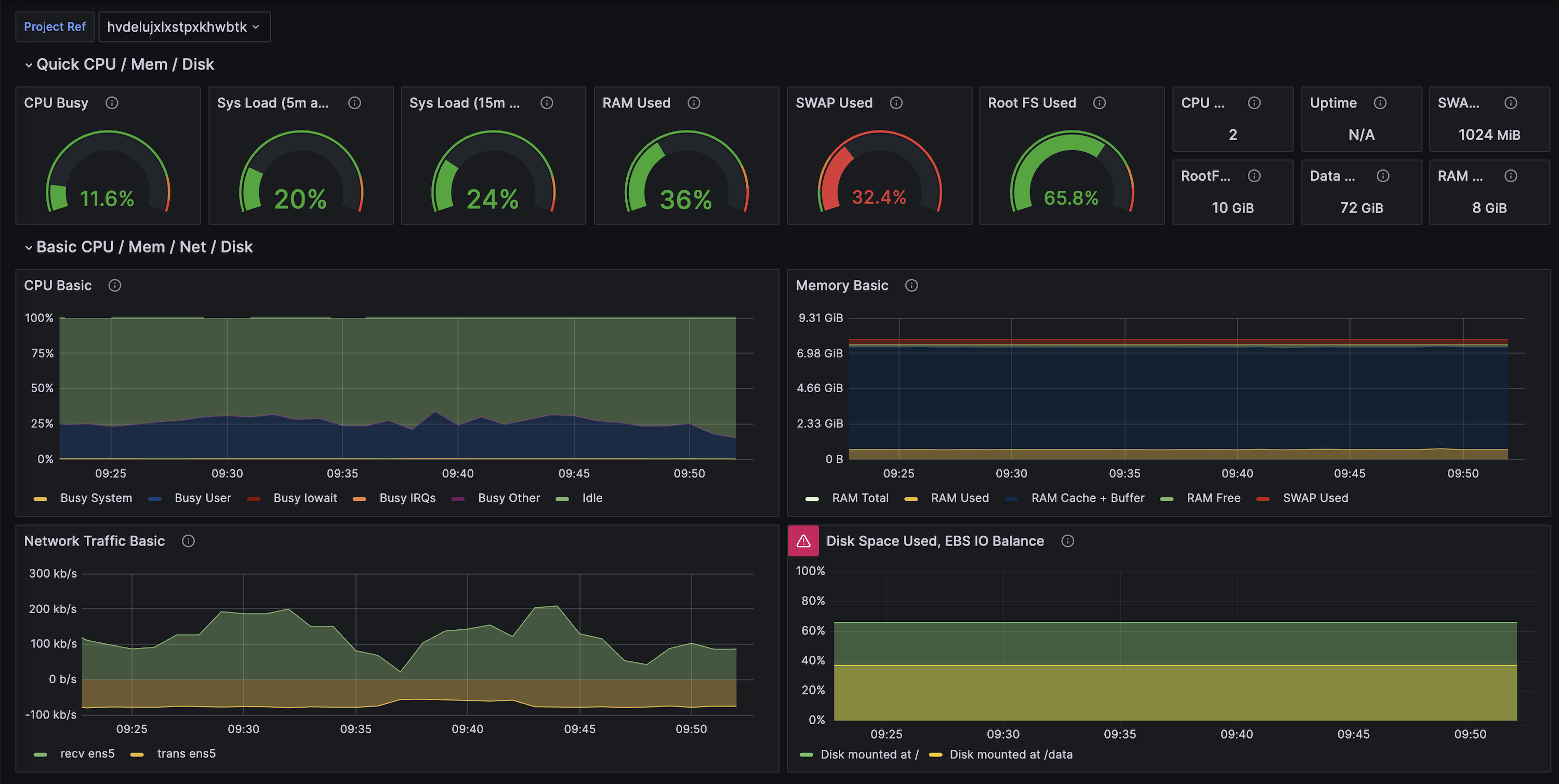

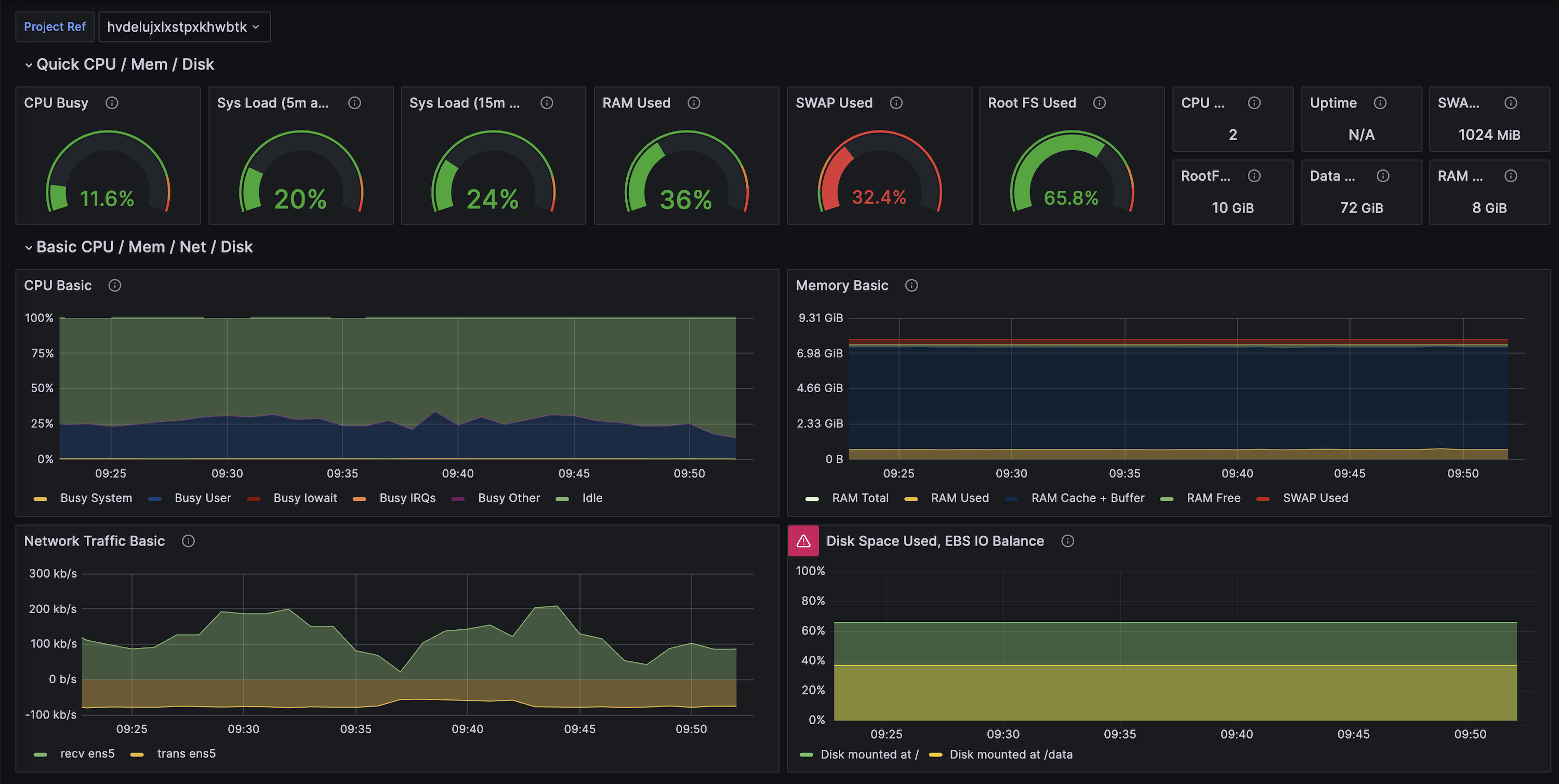

# Metrics API

Every Supabase project exposes a [Prometheus](https://prometheus.io/)-compatible **Metrics API** endpoint that surfaces ~200 Postgres performance and health series. You can scrape it into any observability stack to power custom dashboards, alerting rules, or long-term retention that goes beyond what Supabase Studio provides out of the box.

The Metrics API is currently in beta. Metric names and labels might evolve as we expand the dataset, and the feature is not available in self-hosted Supabase instances.

## What you can do with the Metrics API

* Stream database CPU, IO, WAL, connection, and query stats into Prometheus-compatible systems.

* Combine Supabase metrics with application signals in Grafana, Datadog, or any other observability vendor.

* Reuse our [supabase-grafana dashboard JSON](https://github.com/supabase/supabase-grafana) to bootstrap over 200 ready-made charts.

* Build your own alerting policies (right-sizing, saturation detection, index regression, and more).

What you can do with the Metrics API} id="how-do-i-check-when-a-user-went-through-mfa" className="border-0 px-2 py-4">

Every Supabase project exposes a metrics feed at `https://.supabase.co/customer/v1/privileged/metrics`. Replace `` with the identifier from your project URL or from the dashboard sidebar.

1. Copy your project reference and confirm the base URL using the helper below.

2. Configure your collector to scrape once per minute. The endpoint already emits the full set of metrics on each request.

3. Authenticate with HTTP Basic Auth:

* **Username**: `service_role`

* **Password**: a **Secret API key** (`sb_secret_...`). You can create/copy it in [**Project Settings → API Keys**](/dashboard/project/_/settings/api-keys). For more context, see [Understanding API keys](/docs/guides/api/api-keys).

Testing locally is as simple as running `curl` with your Secret API key:

```bash

curl /customer/v1/privileged/metrics \

--user 'service_role:sb_secret_...'

```

You can provision long-lived automation tokens in two ways:

* Create an account access token once at [**Account Settings > Access Tokens**](/dashboard/account/tokens) and reuse it wherever you configure observability tooling.

* **Optional**: programmatically exchange an access token for project API keys via the [Management API](/docs/reference/api/management-projects-api-keys-retrieve).

```bash

# (Optional) Exchange an account access token for project API keys

export SUPABASE_ACCESS_TOKEN="your-access-token"

export PROJECT_REF="your-project-ref"

curl -H "Authorization: Bearer $SUPABASE_ACCESS_TOKEN" \

"https://api.supabase.com/v1/projects/$PROJECT_REF/api-keys?reveal=true"

```

## Choose your monitoring stack

Pick the workflow that best matches your tooling. Cards link to Supabase-authored guides or vendor integration docs, and some include a “Community” pill when there’s an accompanying vendor reference.

## Additional resources

* [Supabase Grafana repository](https://github.com/supabase/supabase-grafana) for dashboard JSON and alert examples.

* [Grafana Cloud’s Supabase integration doc](https://grafana.com/docs/grafana-cloud/monitor-infrastructure/integrations/integration-reference/integration-supabase/) (community-maintained, built on this Metrics API).

* [Datadog’s Supabase integration doc](https://docs.datadoghq.com/integrations/supabase/) (community-maintained, built on this Metrics API).

* [Log Drains ](/docs/guides/telemetry/log-drains) for exporting event-based telemetry alongside metrics.

* [Query Performance report](/dashboard/project/_/observability/query-performance) for built-in visualizations based on the same underlying metrics.

# Reports

Supabase Reports provide comprehensive observability for your project through dedicated monitoring dashboards that visualize key metrics across your database, auth, storage, realtime, and API systems. Each report offers self-debugging tools to gain actionable insights for optimizing performance and troubleshooting issues.

Reports are only available for projects hosted on the Supabase Cloud platform and are not available for self-hosted instances.

## Using reports

Reports can be filtered by time range to focus your analysis on specific periods. Available time ranges are gated by your organization's plan, with higher-tier plans providing access to longer historical periods.

| Time Range | Free | Pro | Team | Enterprise |

| --------------- | ---- | --- | ---- | ---------- |

| Last 10 minutes | ✅ | ✅ | ✅ | ✅ |

| Last 30 minutes | ✅ | ✅ | ✅ | ✅ |

| Last 60 minutes | ✅ | ✅ | ✅ | ✅ |

| Last 3 hours | ✅ | ✅ | ✅ | ✅ |

| Last 24 hours | ✅ | ✅ | ✅ | ✅ |

| Last 7 days | ❌ | ✅ | ✅ | ✅ |

| Last 14 days | ❌ | ❌ | ✅ | ✅ |

| Last 28 days | ❌ | ❌ | ✅ | ✅ |

***

## Database

The Database report provides the most comprehensive view into your Postgres instance's health and performance characteristics. These charts help you identify performance bottlenecks, resource constraints, and optimization opportunities at a glance.

The following charts are available for Free and Pro plans:

| Chart | Available Plans | Description | Key Insights |

| ---------------------------- | --------------- | -------------------------------------------- | --------------------------------------------- |

| Memory usage | Free, Pro | RAM usage percentage by the database | Memory pressure and resource utilization |

| CPU usage | Free, Pro | Average CPU usage percentage | CPU-intensive query identification |

| Disk IOPS | Free, Pro | Read/write operations per second with limits | IO bottleneck detection and workload analysis |

| Database connections | Free, Pro | Number of pooler connections to the database | Connection pool monitoring |

| Shared Pooler connections | All | Client connections to the shared pooler | Shared pooler usage patterns |

| Dedicated Pooler connections | All | Client connections to PgBouncer | Dedicated pooler connection monitoring |

{/* supa-mdx-lint-disable-next-line Rule001HeadingCase */}

### Advanced Telemetry

The following charts provide a more advanced and detailed view of your database performance and are available only for Team, Enterprise, and Platform plans.

### Memory usage

| Component | Description |

| ------------------- | ------------------------------------------------------ |

| **Used** | RAM actively used by Postgres and the operating system |

| **Cache + buffers** | Memory used for page cache and Postgres buffers |

| **Free** | Available unallocated memory |

How it helps debug issues:

| Issue | Description |

| ------------------------------ | ------------------------------------------------ |

| Memory pressure detection | Identify when free memory is consistently low |

| Cache effectiveness monitoring | Monitor cache performance for query optimization |

| Memory leak detection | Detect inefficient memory usage patterns |

Actions you can take:

| Action | Description |

| --------------------------------------------------------------------------- | ---------------------------------------------- |

| [Upgrade compute size](/docs/guides/platform/compute-and-disk#compute-size) | Increase available memory resources |

| Optimize queries | Reduce memory consumption of expensive queries |

| Tune Postgres configuration | Improve memory management settings |

| Implement application caching | Add query result caching to reduce memory load |

### CPU usage

| Category | Description |

| ---------- | ------------------------------------------------ |

| **System** | CPU time for kernel operations |

| **User** | CPU time for database queries and user processes |

| **IOWait** | CPU time waiting for disk/network IO |

| **IRQs** | CPU time handling interrupts |

| **Other** | CPU time for miscellaneous tasks |

How it helps debug issues:

| Issue | Description |

| ---------------------------------- | -------------------------------------------------- |

| CPU-intensive query identification | Identify expensive queries when User CPU is high |

| IO bottleneck detection | Detect disk/network issues when IOWait is elevated |

| System overhead monitoring | Monitor resource contention and kernel overhead |

Actions you can take:

| Action | Description |

| -------------------------------------------------------------- | --------------------------------------------------------------------------- |

| Optimize CPU-intensive queries | Target queries causing high User CPU usage |

| Address IO bottlenecks | Resolve disk/network issues when IOWait is high |

| [Upgrade compute size](/docs/guides/platform/compute-and-disk) | Increase available CPU capacity |

| Implement proper indexing | Use [query optimization](/docs/guides/database/postgres/indexes) techniques |

### Disk input/output operations per second (IOPS)

This chart displays read and write IOPS with a reference line showing your compute size's maximum IOPS capacity.

How it helps debug issues:

| Issue | Description |

| --------------------------------- | ---------------------------------------------------------------- |

| Disk IO bottleneck identification | Identify when disk IO becomes a performance constraint |

| Workload pattern analysis | Distinguish between read-heavy vs write-heavy operations |

| Performance correlation | Spot disk activity spikes that correlate with performance issues |

Actions you can take:

| Action | Description |

| -------------------------------------------------------------- | --------------------------------------------------------- |

| Optimize indexing | Reduce high read IOPS through better query indexing |

| Consider read replicas | Distribute read-heavy workloads across multiple instances |

| Batch write operations | Reduce write IOPS by grouping database writes |

| [Upgrade compute size](/docs/guides/platform/compute-and-disk) | Increase IOPS limits with larger compute instances |

### Disk throughput

Available on Team and Enterprise plans.

This chart displays read and write throughput (bytes per second) with a reference line showing your compute size's maximum disk throughput.

How it helps debug issues:

| Issue | Description |

| ------------------------------------ | ------------------------------------------------------- |

| Throughput bottleneck identification | Spot when disk bandwidth is saturated |

| Workload pattern analysis | Differentiate read-heavy vs write-heavy bandwidth usage |

| Performance correlation | Correlate spikes with query performance changes |

Actions you can take:

| Action | Description |

| -------------------------------------------------------------- | ------------------------------------------------------------- |

| Optimize query patterns | Reduce large sequential reads/writes |

| Tune caching and batching | Minimize repeated disk access and improve throughput headroom |

| [Upgrade compute size](/docs/guides/platform/compute-and-disk) | Increase throughput limits for sustained workloads |

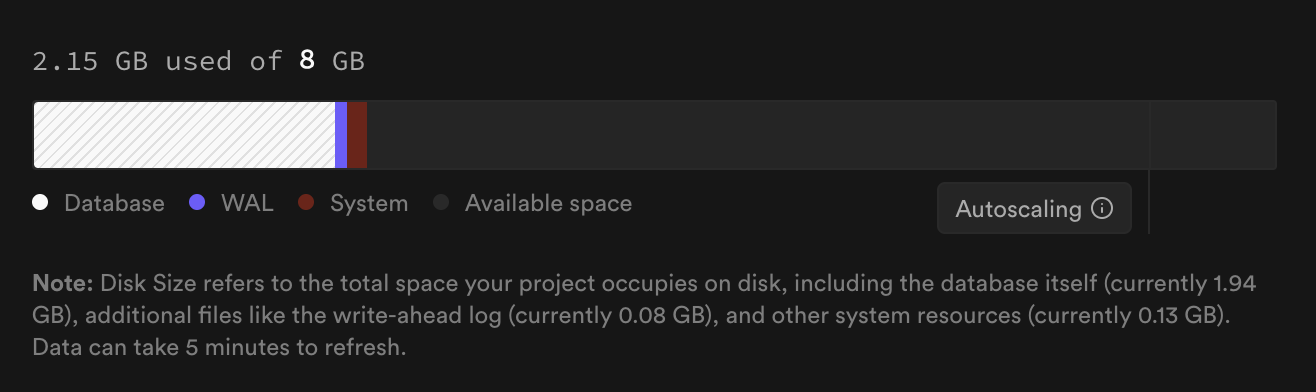

### Disk size

| Component | Description |

| ------------ | --------------------------------------------------------- |

| **Database** | Space used by your actual database data (tables, indexes) |

| **WAL** | Space used by Write-Ahead Logging |

| **System** | Reserved space for system operations |

How it helps debug issues:

| Issue | Description |

| ----------------------------- | ------------------------------------------- |

| Space consumption monitoring | Track disk usage trends over time |

| Growth pattern identification | Identify rapid growth requiring attention |

| Capacity planning | Plan upgrades before hitting storage limits |

Actions you can take:

| Action | Description |

| -------------------------------------------------------------------------------- | -------------------------------------------------------------------- |

| Run [VACUUM](https://www.postgresql.org/docs/current/sql-vacuum.html) operations | Reclaim dead tuple space and optimize storage |

| Analyze large tables | Use CLI commands like `table-sizes` to identify optimization targets |

| Implement data archival | Archive historical data to reduce active storage needs |

| [Upgrade disk size](/docs/guides/platform/database-size) | Increase storage capacity when approaching limits |

### Database connections

| Connection Type | Description |

| --------------- | ------------------------------------------------ |

| **Postgres** | Direct connections from your application |

| **PostgREST** | Connections from the PostgREST API layer |

| **Reserved** | Administrative connections for Supabase services |

| **Auth** | Connections from Supabase Auth service |

| **Storage** | Connections from Supabase Storage service |

| **Other roles** | Miscellaneous database connections |

How it helps debug issues:

| Issue | Description |

| ------------------------------- | ----------------------------------------------------------- |

| Connection pool exhaustion | Identify when approaching maximum connection limits |

| Connection leak detection | Spot applications not properly closing connections |

| Service distribution monitoring | Monitor connection usage across different Supabase services |

Actions you can take:

| Action | Description |

| ------------------------------------------------------------------------------------------ | --------------------------------------------------------------- |

| [Upgrade compute size](/docs/guides/platform/compute-and-disk#compute-size) | Increase maximum connection limits |

| Implement [connection pooling](/docs/guides/database/connecting-to-postgres#shared-pooler) | Optimize connection management for high direct connection usage |

| Review application code | Ensure proper connection handling and cleanup |

## Auth

The Auth report focuses on user authentication patterns and behaviors within your Supabase project.

| Chart | Description | Key Insights |

| ------------------------ | --------------------------------------------- | ----------------------------------------------- |

| Active Users | Count of unique users performing auth actions | User engagement and retention patterns |

| Sign In Attempts by Type | Breakdown of authentication methods used | Password vs OAuth vs magic link preferences |

| Sign Ups | Total new user registrations | Growth trends and onboarding funnel performance |

| Auth Errors | Error rates grouped by status code | Authentication friction and security issues |

| Password Reset Requests | Volume of password recovery attempts | User experience pain points |

## Storage

The Storage report provides visibility into how your Supabase Storage is being utilized, including request patterns, performance characteristics, and caching effectiveness.

| Chart | Description | Key Insights |

| --------------- | ------------------------------------------ | ------------------------------------------------------ |

| Total Requests | Overall request volume to Storage | Traffic patterns and usage trends |

| Response Speed | Average response time for storage requests | Performance bottlenecks and optimization opportunities |

| Network Traffic | Ingress and egress usage | Data transfer costs and CDN effectiveness |

| Request Caching | Cache hit rates and miss patterns | CDN performance and cost optimization |

| Top Routes | Most frequently accessed storage paths | Popular content and usage patterns |

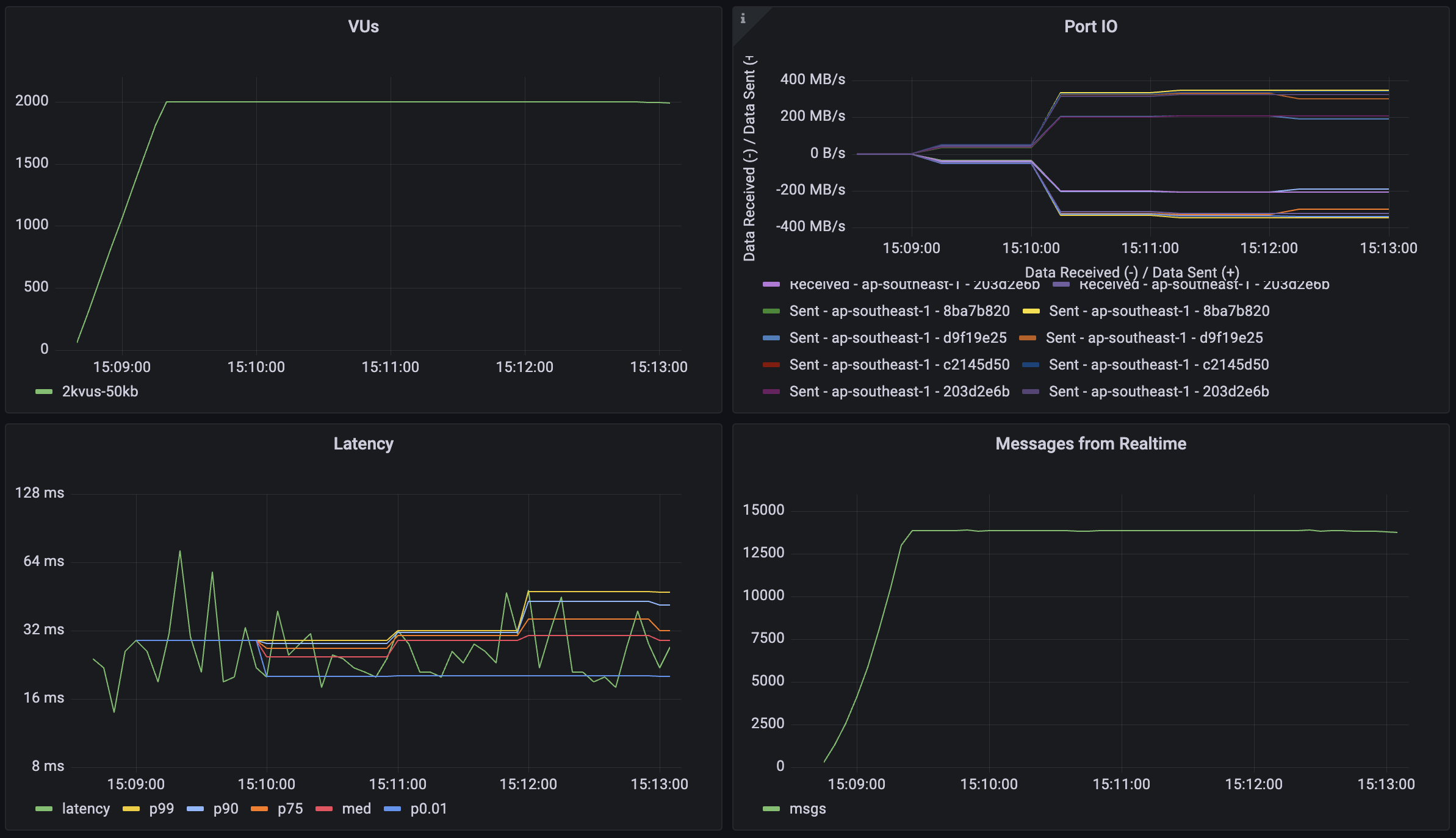

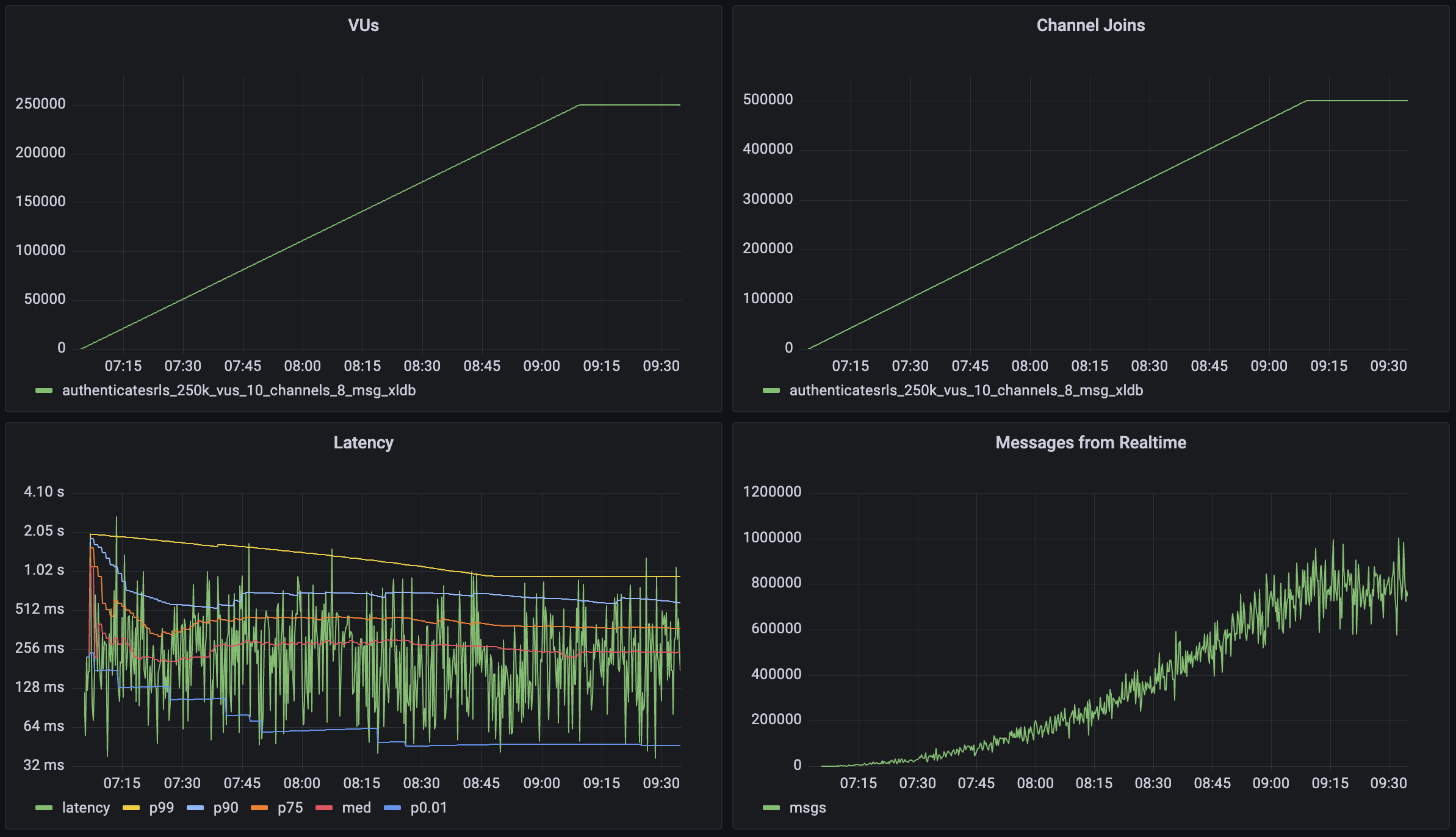

## Realtime

The Realtime report tracks WebSocket connections, channel activity, and real-time event patterns in your Supabase project.

| Chart | Description | Key Insights |

| ---------------------------------------------------------- | --------------------------------------------------------------------------------------- | ------------------------------------------------- |

| Realtime Connections | Active WebSocket connections over time | Concurrent user activity and connection stability |